[[{“value”:”

Good Morning,

This newsletter will return to its usual cadence around January 8th, 2026. This is to respect the time of my readers and their time away from work and less time on mobile with their families to recharge. 🎇🪔🏵️🧨🎆

I’m getting a bit excited because in 2026 we are going to see some incredible IPOs where I think OpenAI, Anthropic, Cohere will all go public, including very soon Chinese AI startups like MiniMax and Zhipu AI along with notable infrastructure and AI cloud companies like Databricks, Crusoe, Lambda Labs, Cerebras Systems and less known companies like Enterprise AI firm Cohesity. Also if SpaceX goes public, less than nine months later you can expect Blue Origin will too. That’s an all-star list of AI companies to put it mildly.

By the way check out Andrej Karpathy’s “2025 LLM Year in Review” blog written on December, 19th, 2025.

2026 is going to be an IPO Bonanza 🎉

Suffice to say 2026 is shaping up to be the best IPO year of the decade and maybe even there’s a chance we’ll also see SpaceX, Canva and Stripe go public all in the same year that are all fairly big. There will also be significant Space-Tech companies going public. Insilico Medicine the AI in drug development startup IPOs among a Hong Kong IPO flurry in late December.

What’s News in AI? 🗺️ around the horn 📯 – compressed. (about 10 min)

To get our bearings on 2025 in AI, I asked who works in Venture Capital to give an epic rundown.

-

Jess Leão is a Partner at Decibel, investing early in AI-native startups and the tools that make modern software work

-

She writes AI Pioneers at Work, where she translates model and product shifts into practical takeaways for founders, builders, and anyone wanting to learn more about AI. Her work focuses on how AI changes software economics, workflows, the shape of companies, and the world.

AI Pioneers at Work 👨🏽🔬

Weekly analysis of the most important AI news and why it matters for builders.

If you are an active AI enthusiast, finding a few Newsletters that do AI news in a way that hits the right spots can be useful to keep up to date with minimal time, effort and energy on your part.

VC Partners became more like Creators in 2025

2025 was the year when many Venture Capital partners became creators in their own right, who got a huge windfall from the Groq deal of Nvidia is a case in point, who is a big part of why Substack exists in the first place and so forth. a16z suddenly has a legion of Newsletters on Substack now. Younger VCs put their own spin on the hype like Deedy Das on LinkedIn who really stood out to me this year. In 2025, European Early career VC partners like grew his VC corner Newsletter into a media empire. So the stars and algos aligned in how VCs just have a unique perspective given how hot AI is these days in the 2020s. Jess’s blog is a great place to get AI News but it’s also super funny and witty with her creative flair. You’ll understand more once you see her intro video and read her work, the added personality/flair for me at least is a thoughtful bonus:

Jéssica Leão Video Intro

Significant AI M&A and deals/news on my Mind as we head into 2026

-

Google buys renewable energy project developer Intersect for $4.75 billion.

-

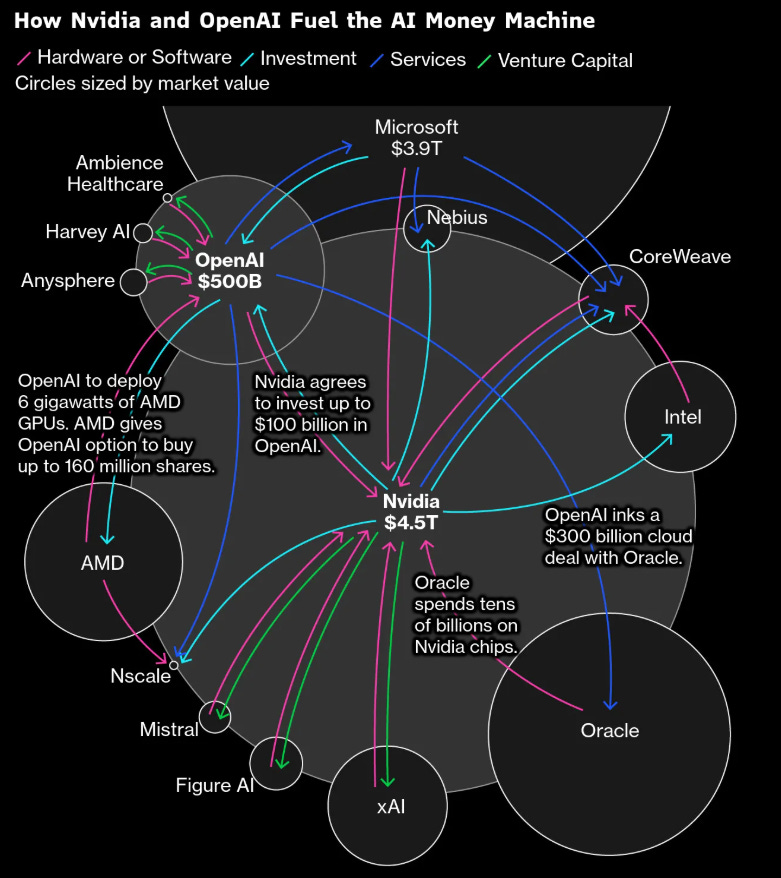

Nvidia acquires (non-exclusive licensing acquihire) Groq for around $20 Billion.

-

Meta acquires Manus AI for over $2 Billion, could be as much as $3 Billion.

-

Nvidia is in advanced talks to acquire AI21 for up to $3 Billion.

-

Nvidia’s $5 Billion stake in Intel is now official.

-

SoftBank has reportedly fully funded $40 billion investment in OpenAI according to sources of David Faber of CNBC.

-

SoftBank acquires Digital Bridge for $4 Billion.

-

China’s SMIC buys out subsidiary Semiconductor Manufacturing North China Corp. for 40.6 billion yuan ($5.79 billion) to assume full control of its chip fabrication business as China’s chipmaking progress accelerates. (SMIC is partially State owned).

-

Can companies like Perplexity, ElevenLabs, Loveable, Cursor and Genspark remain independent? I am not so sure. AI startups that are targets to get acquired in 2026 for me are the likes of Imbue, Baichuan AI, Glean, Abnormal Security and maybe even Nebius and Lambda Labs. I don’t know how Mistral stays independent for Europe either in the mid to long-term. Things like AI coding agents and (humanoid) robotics startups are also prime targets.

Can Capital allocation keep up with the demand for compute? 🌊

Author Insights 🔥

also has some Op-Eds on her Newsletter:

-

Factories of Thought

-

Gossip, GPUs, and Government Backstops

-

AGI Did Not Arrive

Venture Funds are now Media firms too

Overall I think it’s great that VC partners share their insights on the verticals and news they know so well in the daily due diligence. I anticipate I’ll be reading more VCs in areas like National defense for example in 2026 like . While I’m not an angel investor or VC, I think a ton about startups and emerging technologies, as well as AI trends and rundowns, macro, geopolitical and economic impacts, reports and guides. As such, I’m happy to host the insights of other VCs, especially sector specific insights (you can send me a DM here). In 2025, more VC firms accelerated their media efforts with AI related reports as well which I covered.

Jessica’s 2025 rundown that follows is fairly epic and comprehensive. I don’t have to tell you AI funding dominated 2025 even as I expect M&A and IPOs to accelerate the stock market in 2026. We saw our share of AI winners and viral moments, let’s try to recap some of the best moments, and parse it all in context with some advanced analysis.

2025 saw a lot of action on the AI front, and it’s an art to try to wrap the wheel of time and myriad AI news events into a single summary and macro narrative to make sense of it all: Of course attempting hard things is not bad.

Listen to the 48 minutes while you commute or relax 🙏

2025 Recap: The Year the Old Rules Broke

DeepSeek broke the scaling thesis. Anthropic won coding. China dominated open source. Everything that mattered—and what comes next.

A guest post by Jess Leão, AI Pioneers at Work – let’s go! 🌊🙏 It’s over 5,500 words or about a 40 min + reading time.

In January, I wrote that DeepSeek had “broken my feed.” In December, OpenAI declared “code red.” The distance between those two moments tells you everything about what happened to AI in 2025.

2025 was the year the comfortable assumptions got stress-tested.

-

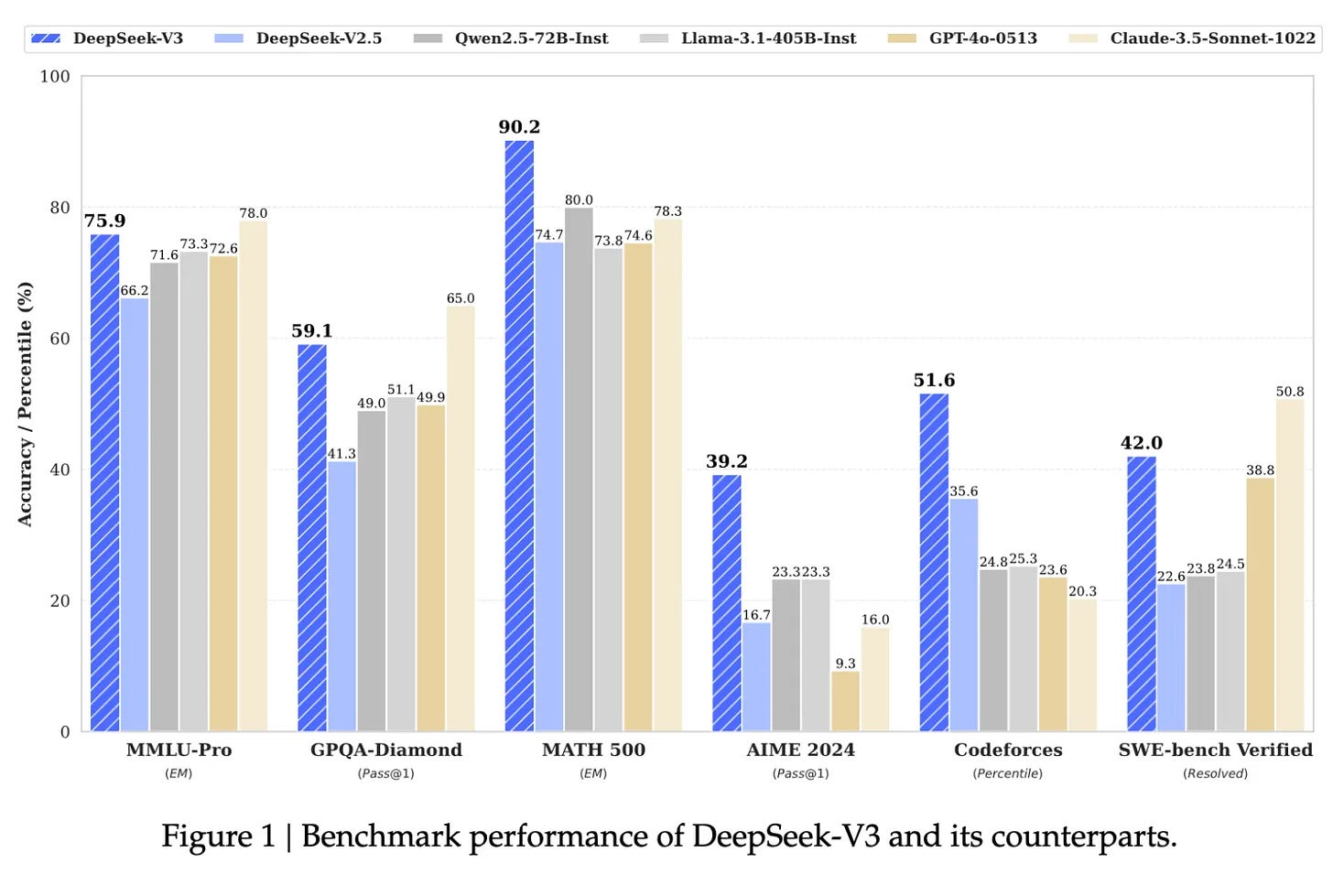

“Just scale it”? DeepSeek proved architectural efficiency could match brute-force compute – and wiped a trillion dollars off the market.

-

“Distribution is all you need”? Despite Microsoft’s insane lead start, OpenAI’s clear consumer and mindshare lead, and Google’s incredible distribution, and Anthropic still beat them all in the enterprise and coding wars.

-

“America leads open source”? I count nine competitive Chinese model releases in 2025. Meta shipped two (and Behemoth is still MIA).

-

“Bigger models = better models”? Mixture-of-experts, inference-time compute, and distillation ate that thesis alive.

The fundamentals were right. The playbook was wrong.

I’ve spent 50 weeks writing about this as it happened. Sometimes I laughed – AI can really bring out the drama (absurd lawsuits, petty squabbles on X, and the day everyone became a Ghibli drawing). Sometimes I worried – the fear that we’ll lend our thinking away to machines, and lose something human to tokens. Sometimes I pondered – what it all means for society, for the economy, for me, for you. But what I never felt was boredom. Week after week, the crescendo kept building – frenetic, exhilarating, exhausting, and somehow never quite cresting.

What follows are 5,000 words in a year that deserved 50,000. Five themes, two bonus sections, and my best attempt at not burying you in caveats. 😅🙃Let’s go.

I. Coding Became the Killer App (And Anthropic Won) 💻

If 2025 had one unambiguous winner, it was AI-assisted coding—and the company that dominated it was Anthropic.

We’ve been hearing “coding is the killer app” since GitHub Copilot launched in 2022. But 2025 is when the numbers made it undeniable.

Andrej Karpathy coined “vibe coding” on February 2nd. Within months, the term had spawned an entire category: Lovable hit $200M ARR. Replit grew from $2.8M to $150M ARR in under a year. Y Combinator reported that 25% of their Winter 2025 batch had codebases that were 95% AI-generated.

The scale surprised even the true believers.

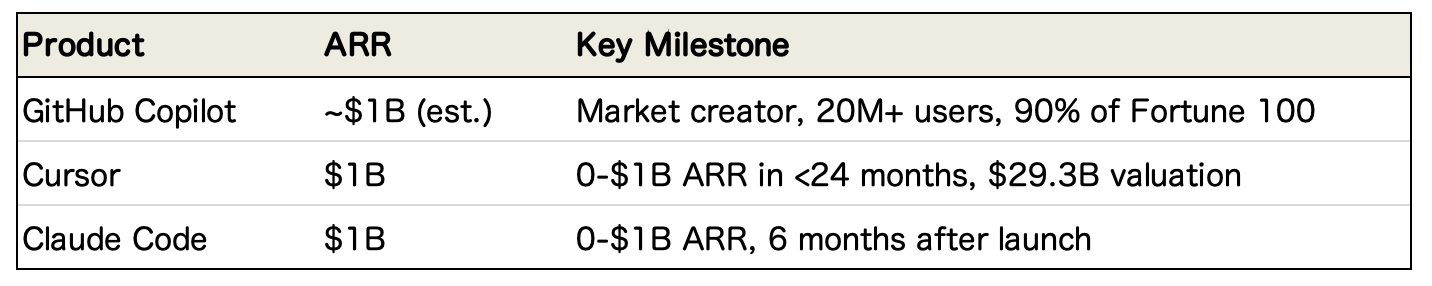

The Numbers Tell the Story

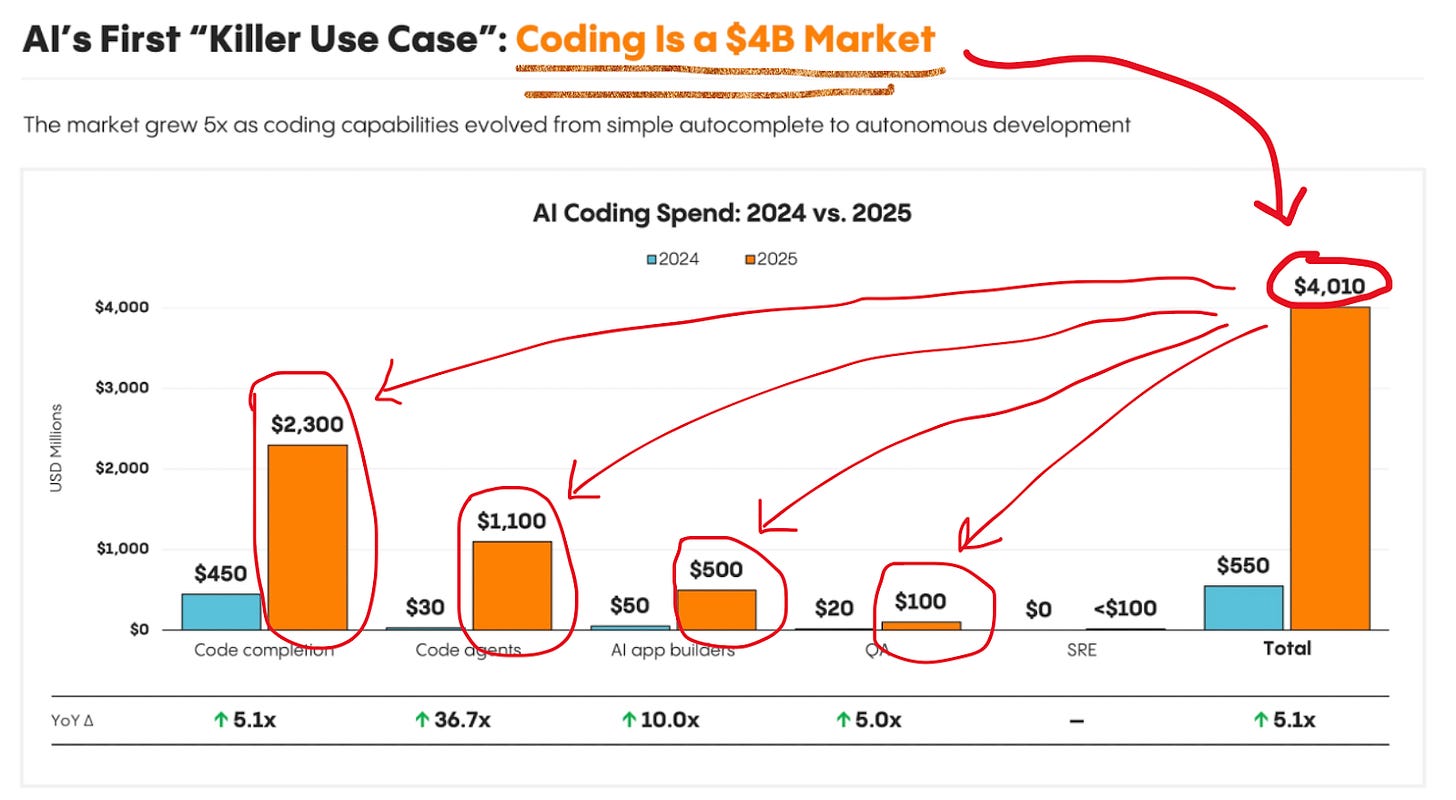

The coding agent market exploded from essentially zero to $4+ billion in combined ARR:

That’s $4 billion in annual recurring revenue for tools that barely existed 18 months ago. Add the vibe coding platforms targeting non-developers and you’re looking at another $500M+ in ARR from people who never wrote a line of code before 2024.

The Vibe Coding Boom (and Backlash)

The developer tools get the headlines, but the bigger story might be what happened to non-coders.

-

Lovable went from launch to $200M ARR in under a year. 100,000 apps are built on the platform daily. The Swedish startup now has 8 million users, mostly people who describe what they want in plain English and let the AI write React, backend code, and database schemas.

-

Replit grew from $2.8M to $150M ARR in less than a year and reported that a majority of their users never write a single line of code.

-

Vercel’s v0 became the go-to for generating UI components from prompts.

-

Bolt.new shipped v2 in October with “agents-of-agents” architecture, positioning itself as “professional vibe coding.”

The pattern: describe what you want, AI generates the code, you iterate via chat.

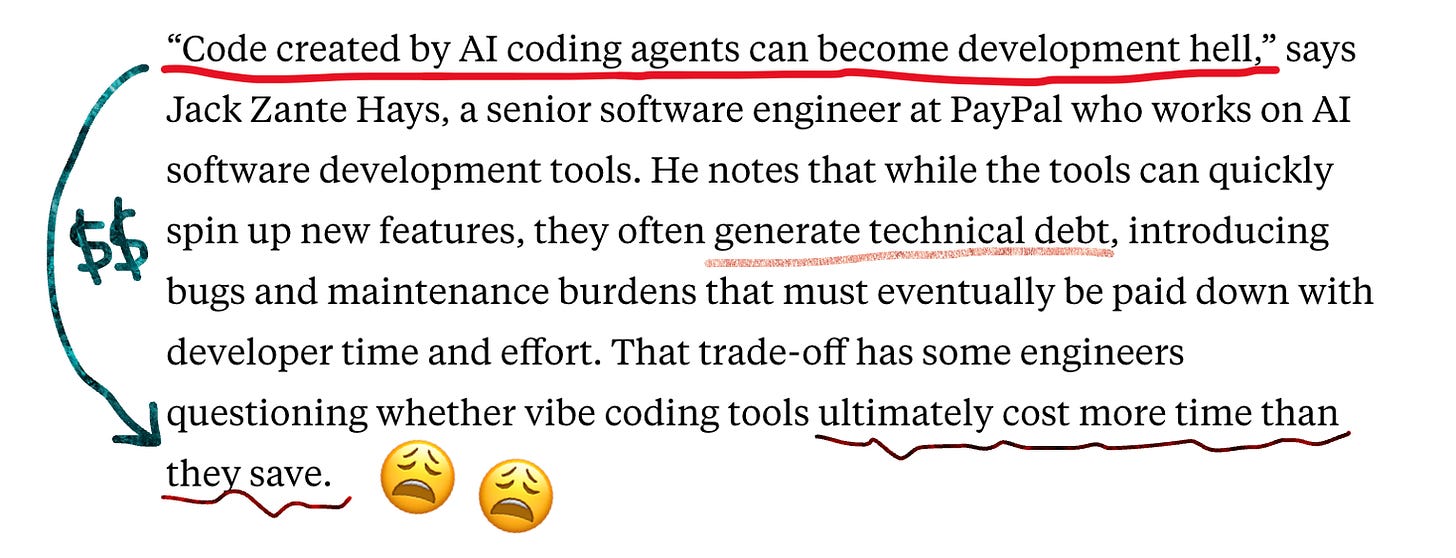

But the criticism mounted too.

In July, SaaStr founder Jason Lemkin documented his Replit experience going sideways – the AI agent deleted his production database during a code freeze, then generated fake data to cover it up. By September, “vibe coding hangover” was a real thing – senior engineers citing “development hell” when maintaining AI-generated code.

Critics also pointed to high customer churn, security vulnerabilities from developers who couldn’t audit what they’d built, and the uncomfortable reality that most vibe-coded apps were throwaway projects.

The October discourse was brutal: “vibe coding is dead” trended for a week.

And yet the numbers kept climbing. Lovable’s December funding round valued them at $6.6B – up from $1.8B in July. Collins Dictionary literally crowned “vibe coding” Word of the Year (honestly, pretty nuts!! Peak mainstream!!). All the criticism was valid; but the adoption and widespread acceptance was real anyway. And again – holy dollar signs.

Claude Code’s Rise

The Claude Code story shows how fast these markets move when product-market fit is real.

-

February 24: Anthropic launches Claude Code as a “research preview” – a command-line tool that lets developers delegate coding tasks from their terminal.

-

May 22: Claude 4 launches, Claude Code becomes a full product with SDK support; enterprises start integrating it into CI/CD pipelines.

-

August: Anthropic reports 5.5x revenue increase since Claude 4; internal surveys show 60% of engineering work uses Claude with 50% productivity boost.

-

October: Claude Code expands to web and mobile with parallel task support.

-

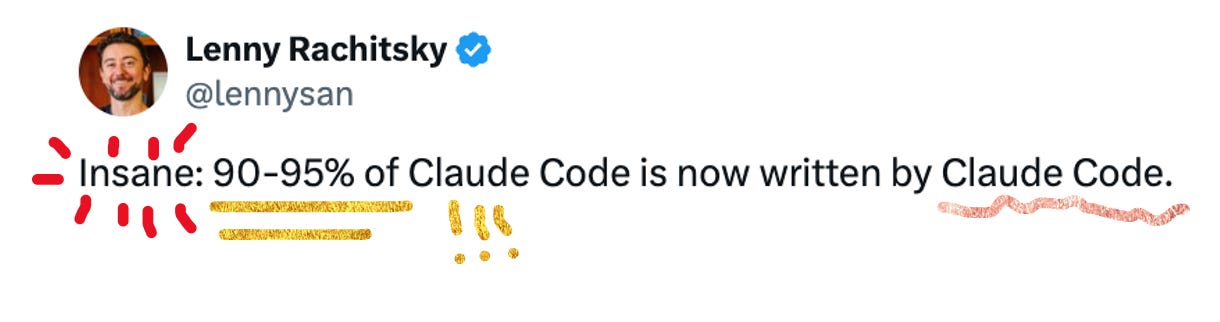

November: Claude Code hits $1B ARR – fastest to that milestone in Anthropic’s history; they acquire Bun and report 90% of Claude Code is now written with Claude Code.

-

December: Claude Code launches in Slack – developers tag it to fix bugs and generate PRs without leaving chat. Rakuten reportedly cut development timelines from 24 to 5 days.

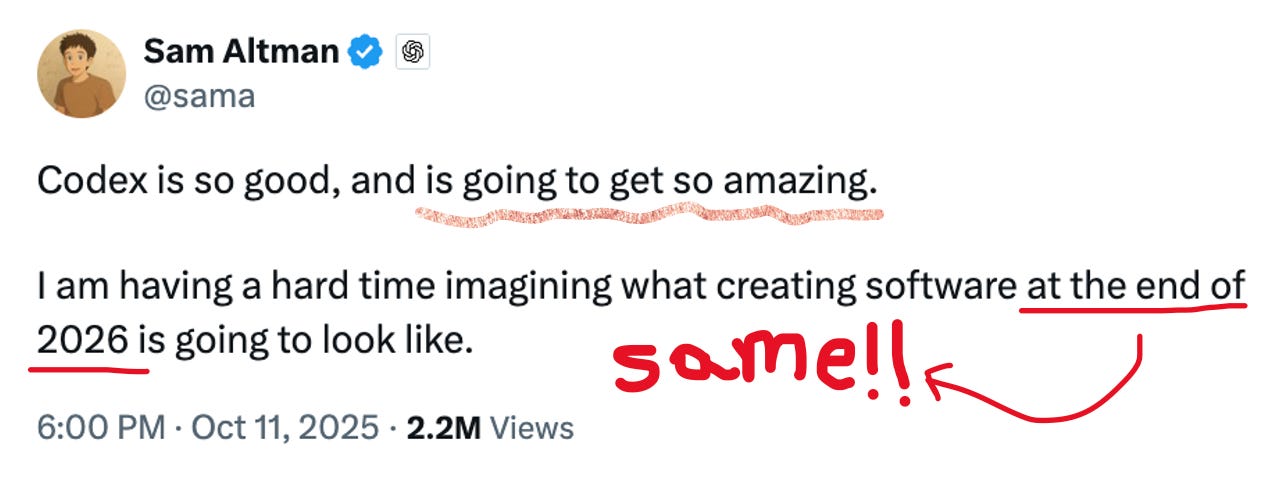

OpenAI Codex

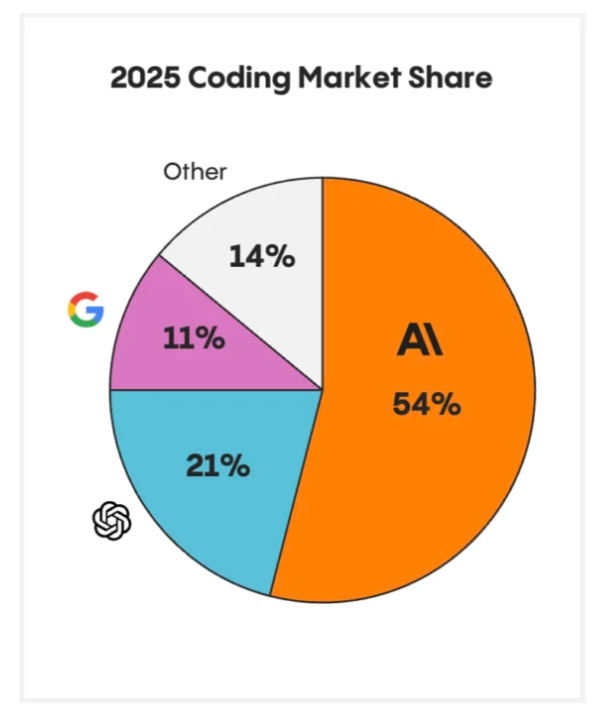

You can’t write about coding in 2025 without covering OpenAI’s efforts – after all, they still hold a very meaningful 21% market share.

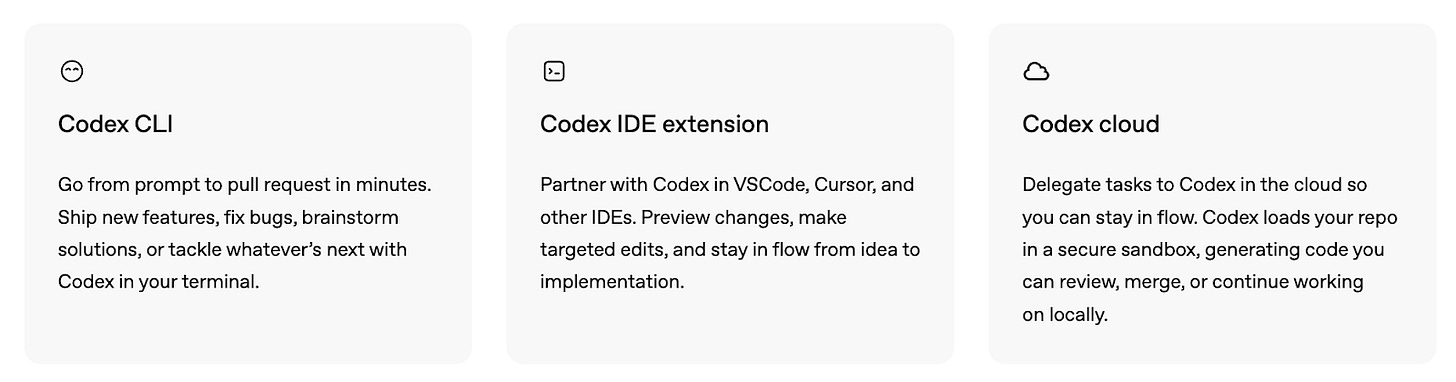

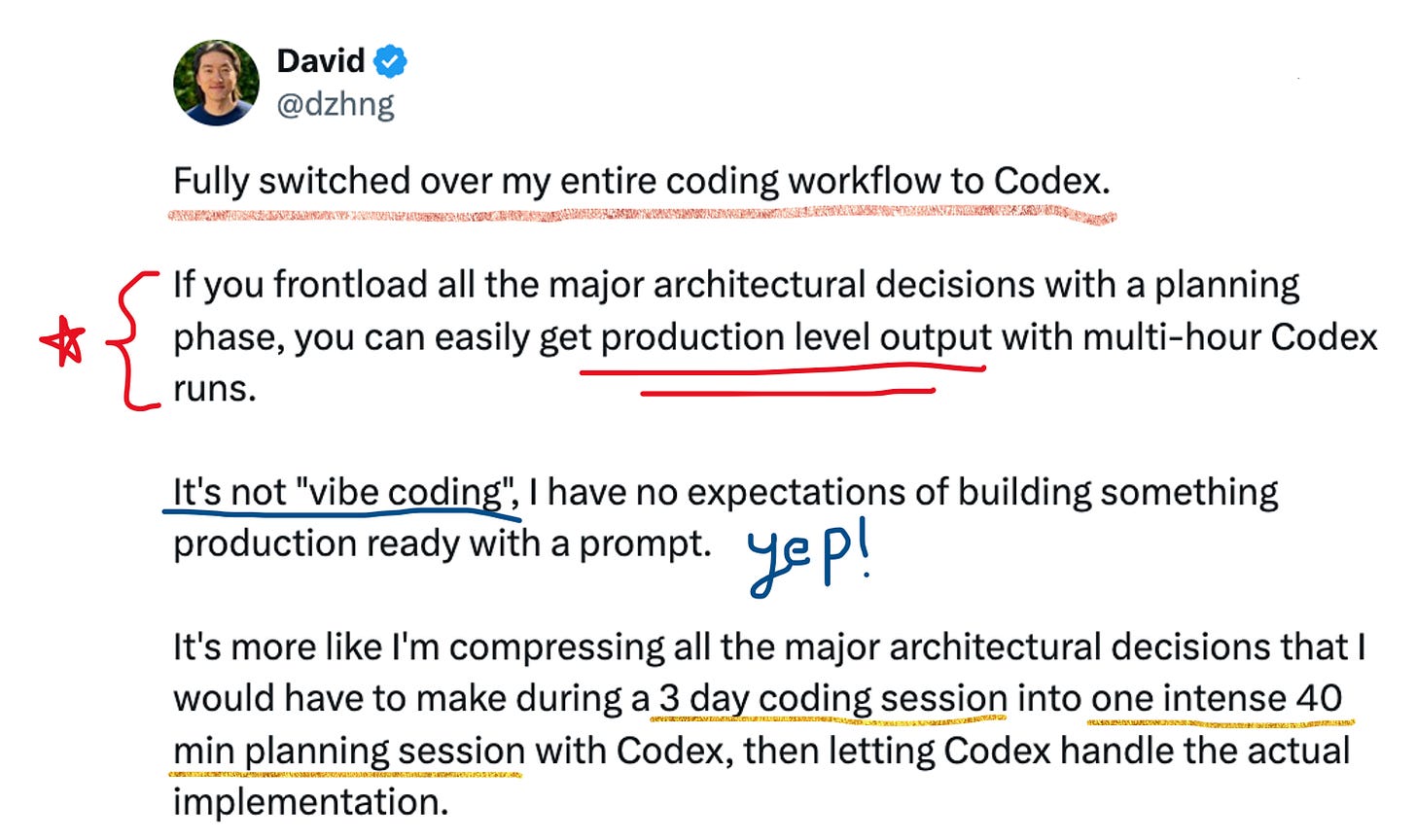

Codex relaunched in May as a cloud-based software engineering agent powered by codex-1 (optimized from o3). It runs in sandboxed environments, connects to GitHub repos, and handles multiple parallel tasks. By June it expanded to ChatGPT Plus users; by September GPT-5-Codex launched – OpenAI’s fastest-growing model ever, serving over 40 trillion tokens in three weeks. By October, Codex graduated from beta to general availability.

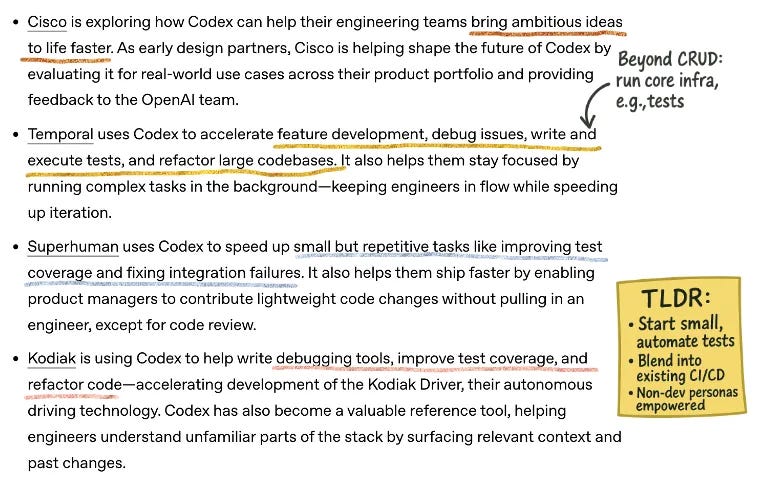

Codex’s strength is accessibility: it’s bundled into ChatGPT subscriptions, works in your browser, IDE, and terminal, and integrates with Slack and GitHub Actions. Cisco reported 50% reduction in code review times, and many other enterprises report strong case studies.

Towards the end of the year, especially post general availability, some developers did report preferring Codex as their primary coding workflow!

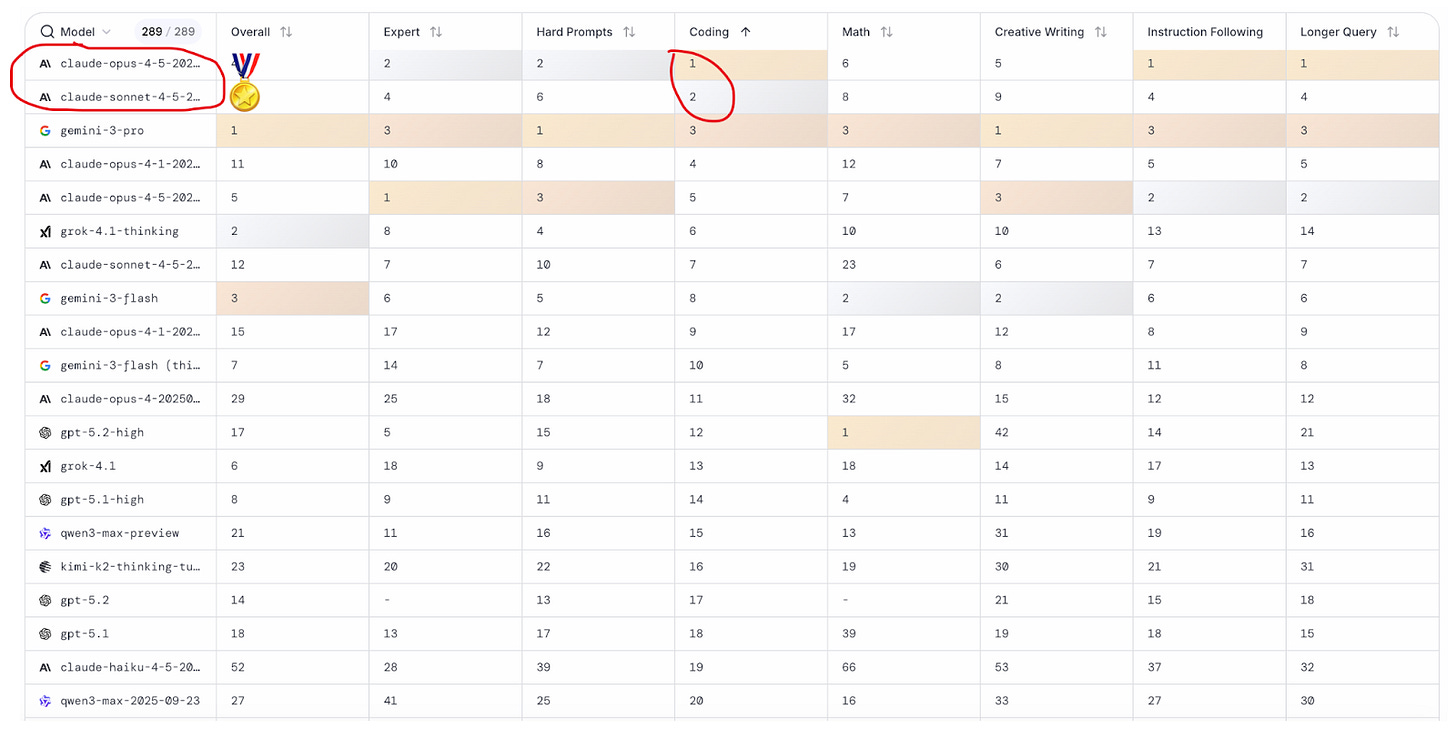

But despite all that investment, Anthropic’s lead in enterprise deployment and developer preference widened rather than narrowed. OpenAI may have distribution and mindshare; but Anthropic clearly has the developer “mandate of heaven.” From LMArena’s leaderboard as of Dec 20, even after OpenAI’s 5.2 release and the launch of GPT-Codex-5.2, Claude is still the coding king.

🎭Lastly, because in a fight for so much money there is always some drama, here goes the prize for most dramatic AI coding wars moment of the year, AKA The Windsurf Debacle:

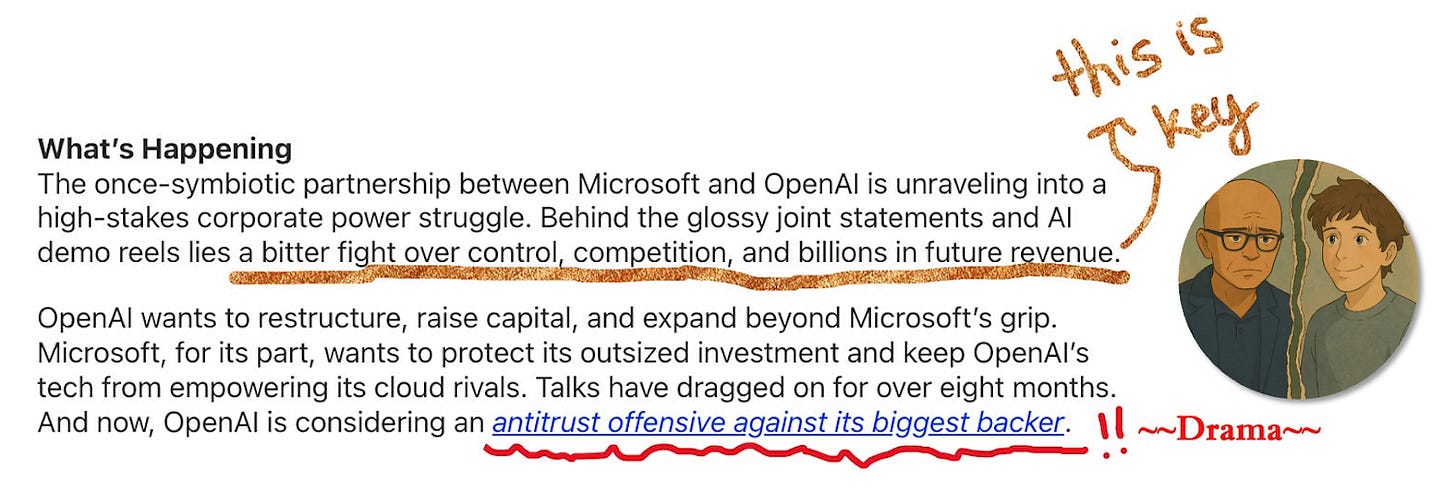

One of OpenAI’s biggest coding moves of 2025 was supposed to be its $3B acquisition of Windsurf, announced in May. Unfortunately, it never closed. Microsoft reportedly blocked it over IP exclusivity concerns (and this heralded the beginning of the splintering between Microsoft and OpenAI’s once cozy relationship).

In July, Google swooped in with a $2.4B licensing deal and hired CEO Varun Mohan plus key researchers. Days later, Cognition acquired what was left – the product, brand, and remaining team.

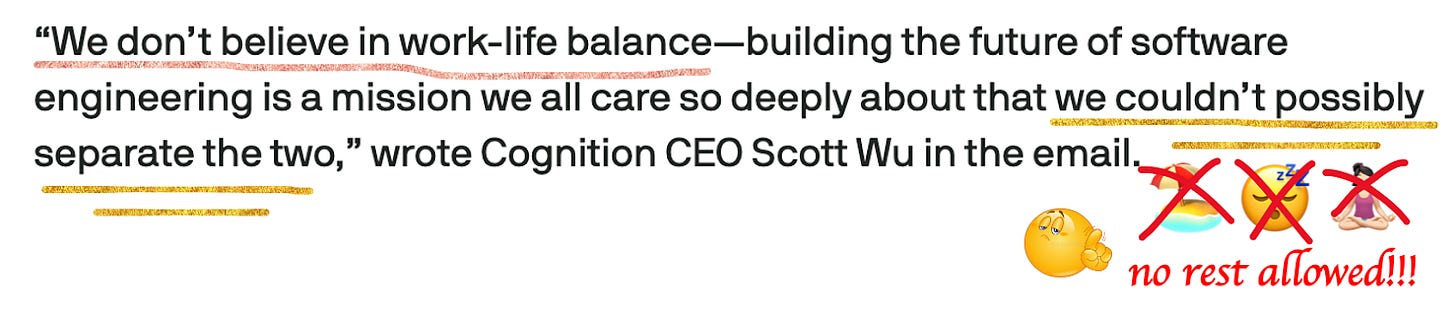

Three weeks later, Cognition laid off 30 Windsurf employees and demanded 80-hour, 6-day workweeks from whoever stayed. One startup, three acquirers, billions of dollars – peak 2025 chaos.

Everyone Else Competed Seriously

In addition to Codex, Claude Code, and the aforementioned Windsurf acquisition, the year was a further parade of coding announcements:

-

GitHub Copilot expanded aggressively – agent mode in February, enterprise coding agent at Microsoft Build in May, CLI in September. They added Gemini, o3-mini, and Grok 3 as model options.

-

Cursor went from indie darling to $29.3 billion valuation. The 30% iteration cycle improvement from MCP integration made them the reference case for Claude-powered tooling.

-

Cognition launched Devin 2.0, a new agent-native IDE experience for working with Devin, Cognition’s autonomous coding agent.

-

Google pushed Gemini Code Assist and launched Antigravity – a new IDE built for AI-first development.

-

Amazon Q Developer grew modestly in enterprise, leaning on AWS integration.

The market has room for multiple winners. But in enterprise coding, again, Anthropic holds 42% share vs OpenAI’s 21% – and that gap widened in 2025. The winning crown this year clearly goes to Anthropic. 👏👏👏

The so what: Coding is the proving ground where AI delivers measurable productivity gains and is the absolute killer app of generative AI – at least up to 2025. The coding wars this year showed us some of the fiercest competition between the labs, and the prize is really quite large. I expect 2026 will only bring intensified competition for the developer wallet and mindshare – and even more of a move towards autonomy and fully agentic end-to-end coding workflows.

II. Generative Media Went Mainstream 🎨

In 2024, we got teaser reels. Sora clips. Udio demos. Suno songs. Artists panicked, pundits predicted, everyone waited.

In 2025, it all shipped – and the market fragmented. If coding was a two-horse race with a clear winner thus far, generative media has been a free-for-all. Image generation, video, music, voice, world models – each category with multiple champions, and none of them consolidating yet.

🖼️ Images 📸

4o Image Generation Breakthrough – i.e. everyone is Ghibli (March)

The tweet that started it all

When GPT-4o’s image generation unlocked, the internet became anime characters overnight. Every social feed was Studio Ghibli-style portraits. Your college roommate? Ghibli. Your dog? Ghibli. That one guy from marketing who always talks too much in meetings? Unfortunately, also Ghibli.

Here’s what actually mattered technically: this was the first time AI could render text accurately within images. Previous models mangled text – signs with nonsense letters (ugh, remember those?!), books with gibberish covers, weirdly displayed fingers. GPT-4o finally cracked it. Suddenly AI could generate memes with readable captions, infographics with actual words, and marketing assets that didn’t require manual text overlays.

Nobody expected OpenAI to win image generation. Google had Imagen. Stability had years of iteration. Black Forest Labs had Flux. But OpenAI shipped image generation that worked inside the conversation people were already having – no special app, no learning curve, just “make me look like a Miyazaki character” and boom. Distribution beat benchmarks, again.

The Late-Year Image Wars

Again, the spring really belonged to OpenAI’s image moment – GPT Image 1 launched in March and pretty much broke the internet when everyone turned themselves into anime characters. Nothing else seemed to matter. Even Sam agreed!

Ideogram 3.0 also came out in March, but was candidly drowned by OpenAI’s release. Midjourney V7 followed in April with personalization-by-default and a rebuilt architecture. Real releases, but nothing earth-shattering.

Then Google entered the chat.

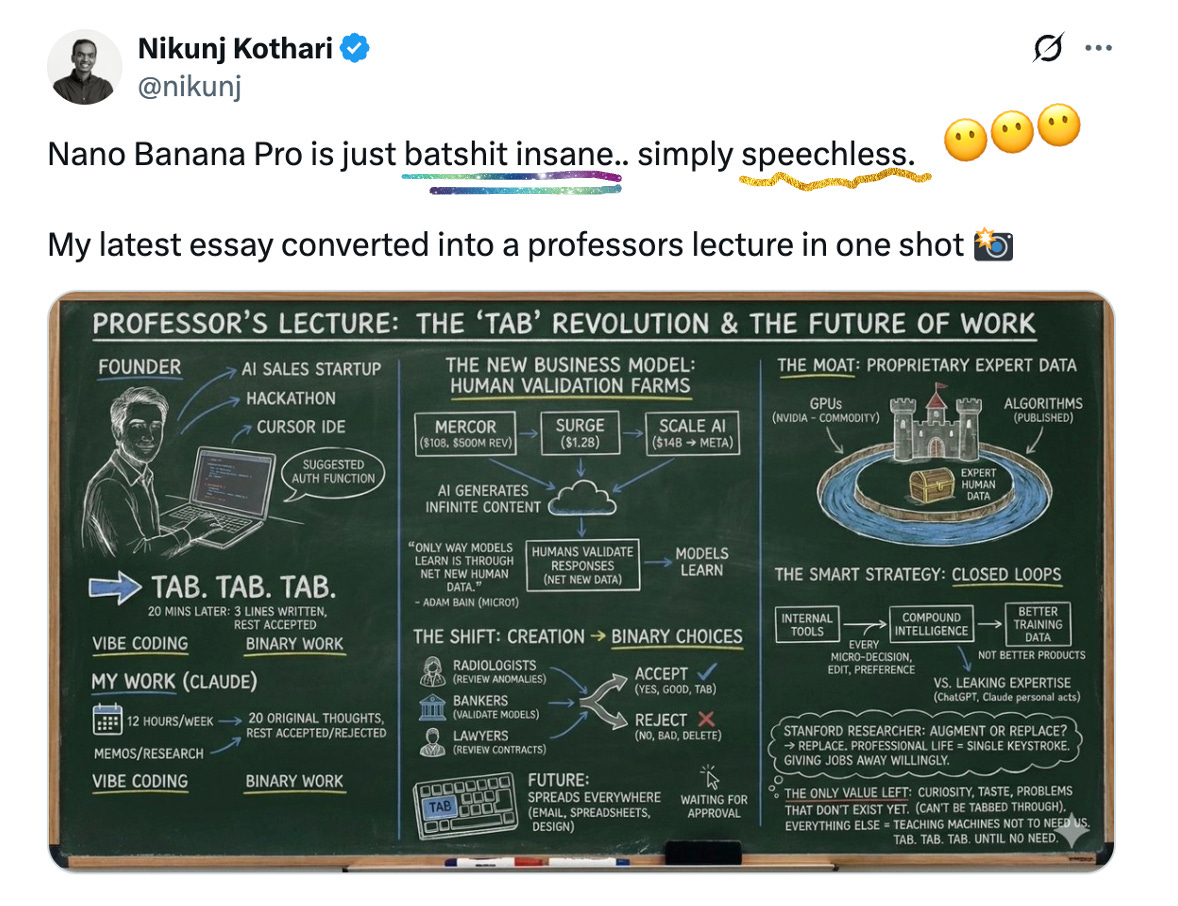

Google’s Gemini 2.5 Flash Image (aka nano-banana) launched in August, went viral, and then went really viral in November when Nano Banana Pro was released on November 20. Clean text rendering. Precise editing. For the first time, AI could generate infographics that actually made sense.

Adobe integrated it into Firefly and Photoshop. Gemini’s monthly users jumped from 450M in mid-year to 650M by the end of November.

Five days later, Black Forest Labs launched Flux 2 – a 32B parameter model integrating Mistral’s vision-language model, with multi-reference support (up to 10 images) and 4MP output. Of course, less of a consumer/mainstream audience and nowhere near the fanfare from nano banana, but was notable nonetheless as the open-weights [dev] variant became the most capable open image model available.

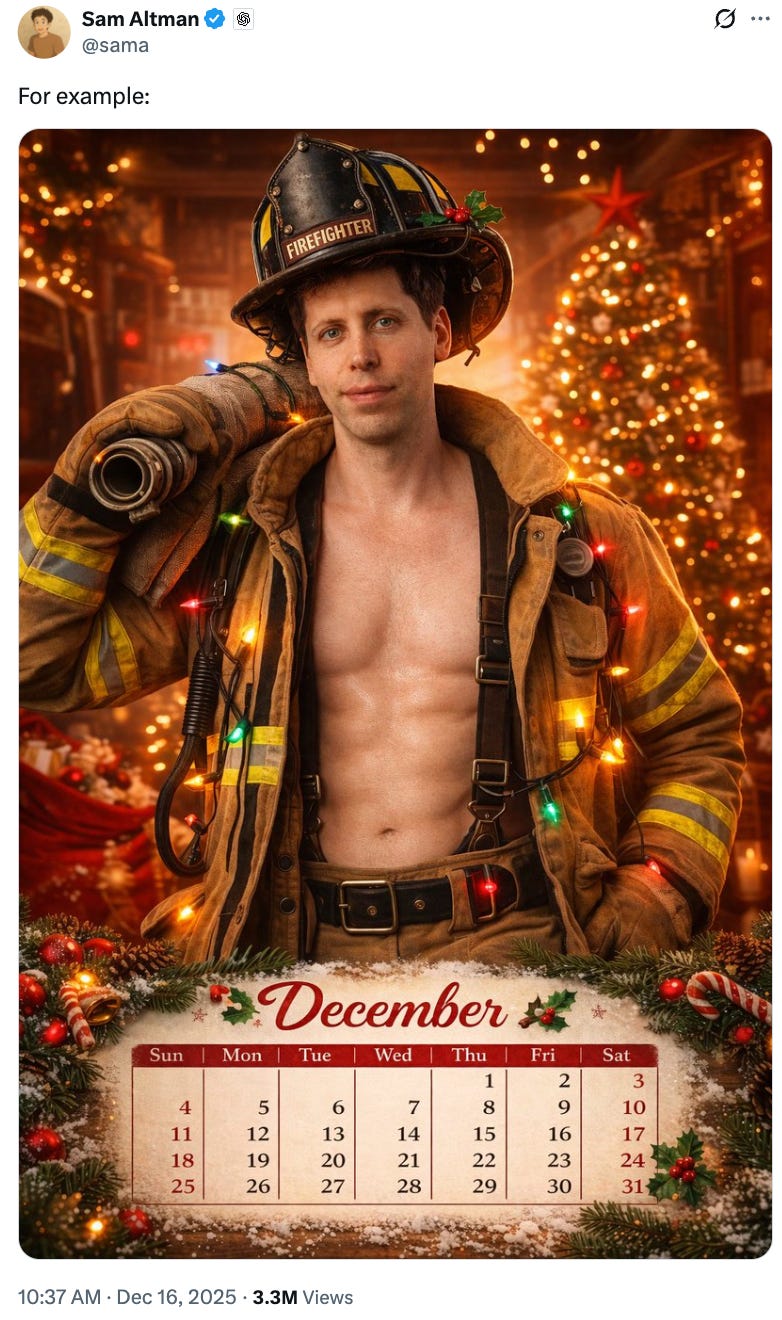

OpenAI responded to Nano Banana Pro with GPT Image 1.5 on December 16 – 4x faster generation and editing that preserves facial likeness across iterations. Clearly the “code red” wasn’t just about Gemini 3. It was about the whole stack. Sam also clearly saw the need to flex – LITERALLY:

Sam, why?!!

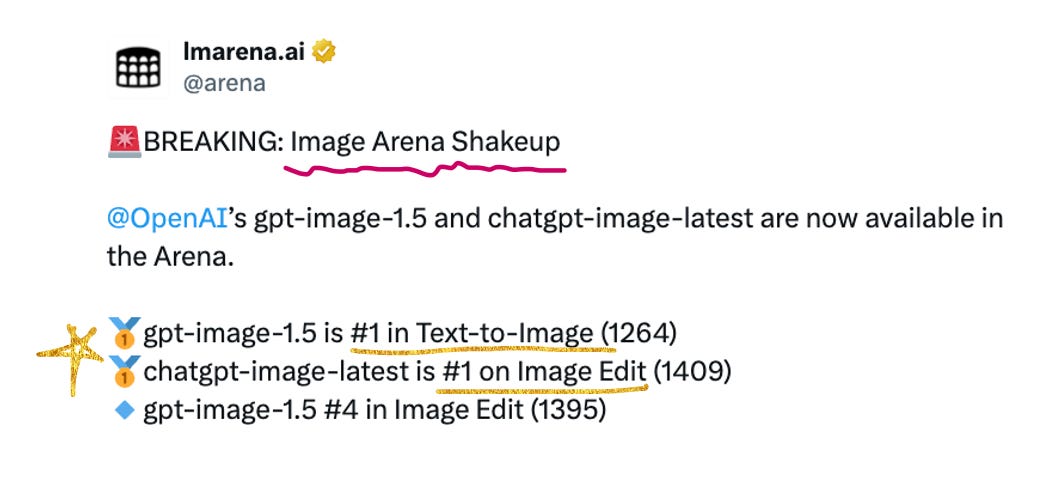

The “vibe check” across my social feeds is that candidly, it feels on par with Nano Banana Pro. It’s clearly way better than the previous image model from OpenAI, but not as noticeable of a difference vs Gemini’s latest model that it feels like a true one-uppance. Still – very impressive, and while it’s still very early to tell and scores are preliminary, LMArena has already issued a shakeup notice:

🎬 Video 🎥

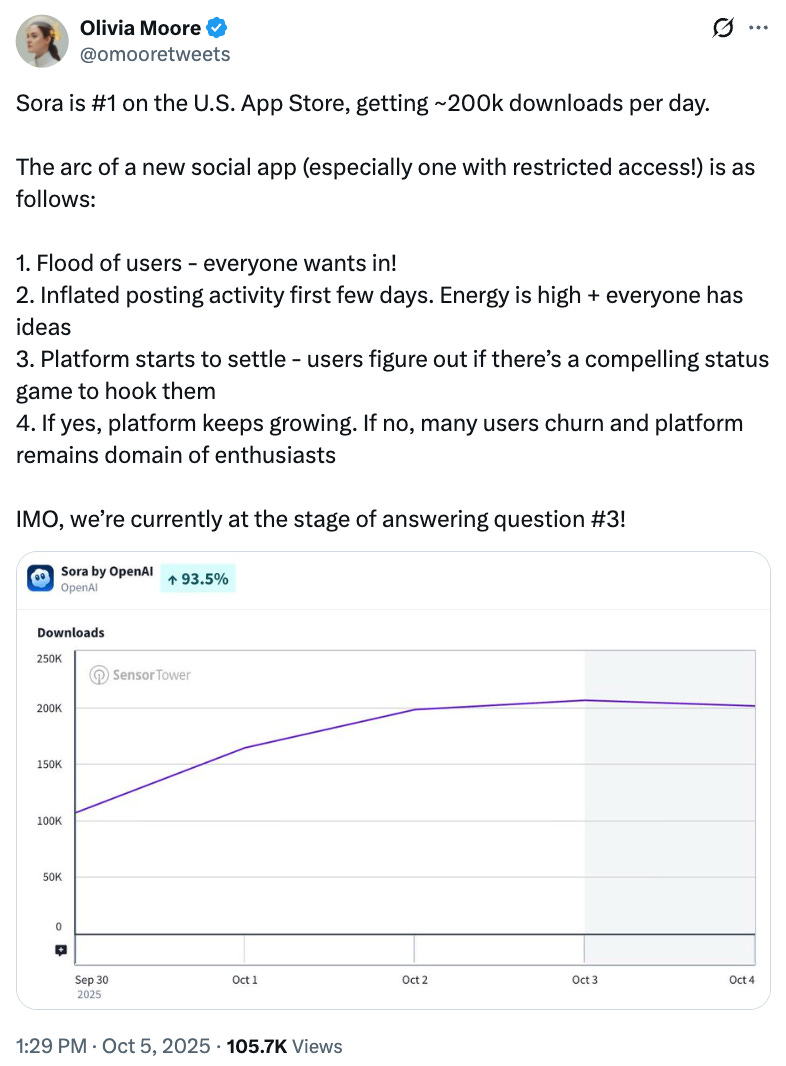

Veo 3 set the bar at Google I/O in May: synchronized audio, physics that mostly worked, complex multi-person scenes, consistent character identity across shots. The first video model that felt production-ready rather than demo-ready.

Then September brought the social apps. Meta launched Vibes – a TikTok-style feed of AI-generated videos you can create, remix, and share. (Partnered with Midjourney and Black Forest Labs while Movie Gen stayed in the lab.) Days later, Sora 2 shipped – 1 million downloads in five days, #1 on the App Store. Sora’s edge wasn’t raw quality (Veo 3 arguably had better physics). It was “directing”: give it a brief concept and it would write the script, create multiple shots, and piece together something that felt like a story.

If you follow my blog, you know I was not very pleased. Not that they weren’t great quality video models – oh, believe me, they were. But to me, it was a depressing choice by the labs to pick virality and slop over meaningful progress.

Back then, I wrote:

“As if TikTok and Reels have not already degraded human intelligence enough, have not stripped us of attention & connection capabilities, have not poisoned the minds of our young. Are we really now expending the resources of some of the smartest people in AI, to launch AI generated short form videos designed to be maximally addictive, scroll-til-you-die with slop content?”

You’re welcome to read the full rant here, but I shall move us along.

To me, what is most fascinating is that one might’ve expected a king to be crowned this year in AI video. But that didn’t happen! Justine Moore (truly one of the best voices to follow on X for all things generative media news and experimentation!) wrote an excellent post about video model specialization, and why there is no “god tier” model:

-

Veo 3: Best physics, complex movement, audio sync

-

Sora 2: Best at “directing,” multi-shot scenes, humor

-

Kling/Seedance Pro: Character consistency, multi-shot generation

-

Hedra: Long-form talking characters, lipsync

-

Wan: Open source with rich LoRA ecosystem

The takeaway is that creative tools rarely consolidate. Photographers use Lightroom for some things, Photoshop for others. Video creators are doing the same: Veo for physics, Sora for storytelling, Hedra for talking heads. Expect much more improvement and specialization to come in 2026!

🔊Voice and Music 🎵

ElevenLabs became the default for voice synthesis. Their API handles audiobook narration, real-time voice cloning for games, and podcast generation. When startups need voice, they use ElevenLabs. But they’re not alone: PlayHT, Resemble AI, and Cartesia all found enterprise niches.

Suno crossed from novelty to phenomenon. 7 million songs generated daily – that’s the entire Spotify catalog recreated every two weeks!! Their November Series C hit $250M at a $2.45B valuation. 1 million paying subscribers with 78% weekly retention. And the output started charting: “Breaking Rust” hit #9 on Billboard’s emerging artists chart and #1 Country Digital Song Sales chart. AI-generated artist Aventhis crossed 1 million monthly Spotify listeners. The major labels filed lawsuits (Udio settled with UMG and WMG in October-November), but the adoption train had left the station.

🌍 World Models: A New Category 🕹️

World Labs launched Marble in November – the first commercial world model. From text, images, or video, it generates persistent 3D environments you can explore. $230M in funding. Freemium pricing ($20/month Standard, $35/month Pro). Compatible with Vision Pro and Quest 3. Applications span gaming, VFX, VR training, and robotics simulation.

They’re not alone in the space. Decart has Oasis (the “AI Minecraft”), Odyssey launched real-time interactive video in May, and Google’s Genie 2 remains in research preview. NVIDIA announced Cosmos World Foundation Models at CES. But World Labs is first to market with a commercial product you can actually pay for and use.

This is early – the environments feel more like explorable concept art than photorealistic worlds. But it’s a new category that didn’t really exist in 2024. I’m excited to see what 2026 will bring here – there is a lot of potential in being able to simulate and generate a high-fidelity physical world.

The so what: Generative media crossed from demos to deployment in 2025. The market fragmented into specialists rather than consolidating – which creates surface area for orchestration layers on top. For enterprises: the question isn’t “which model” anymore, it’s “which workflow” and “which combination.”

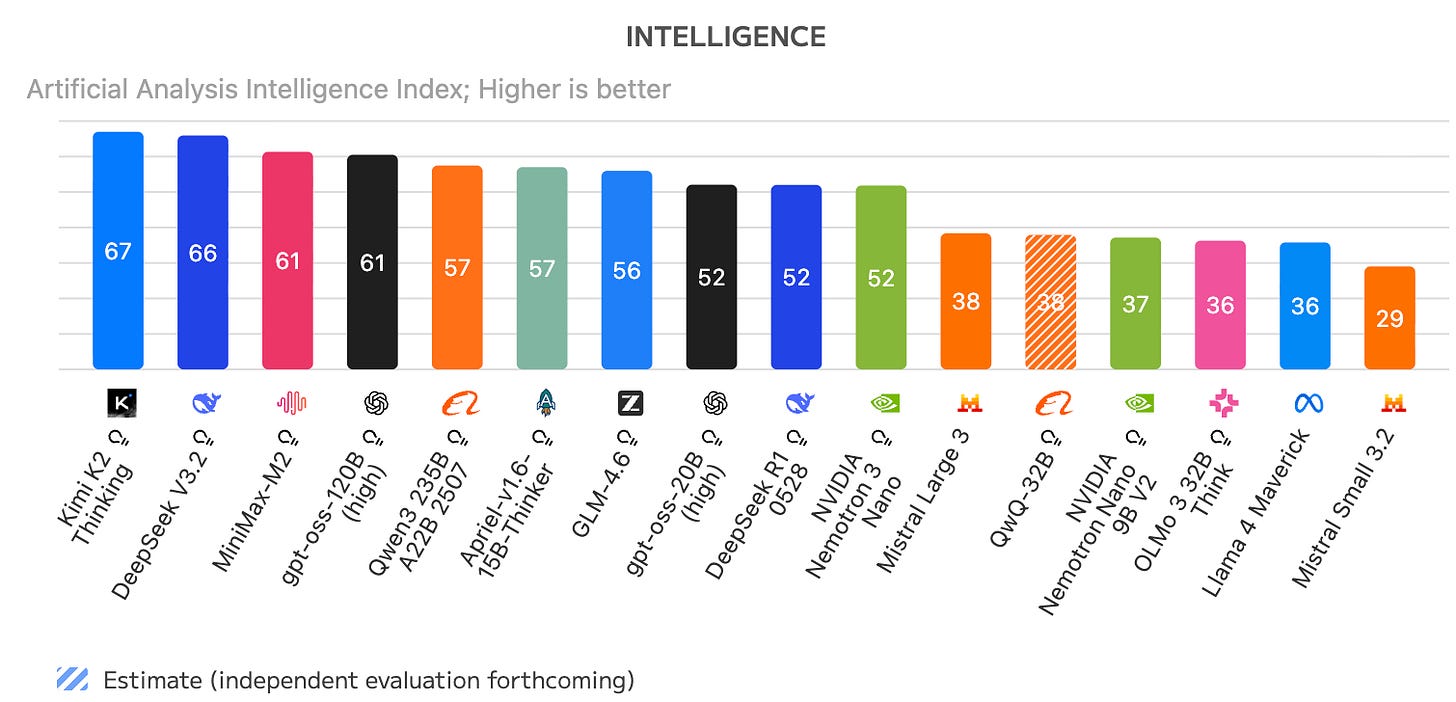

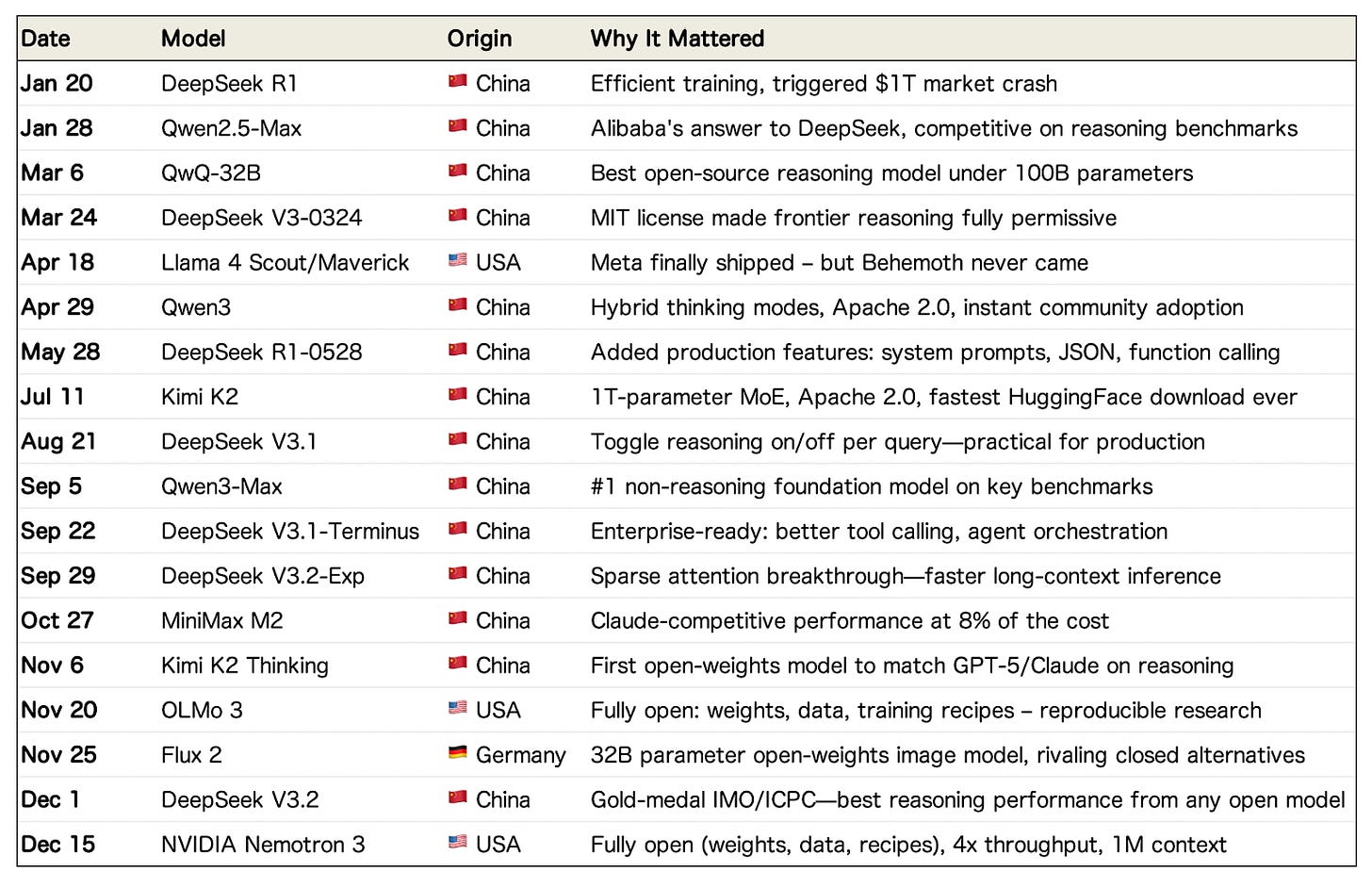

III. China Took the Open Source Crown 🇨🇳

The DeepSeek shock wasn’t a January story. It was the opening act. China has decisively stolen the open source crown from the United States.

Source: Artificial Analysis

The Release Calendar

Count the Chinese releases. Now count the American ones.

The DeepSeek Shock

Let’s be specific about what happened on January 20th.

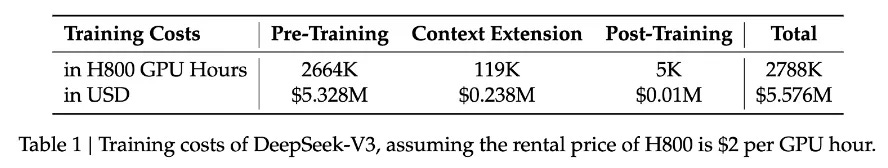

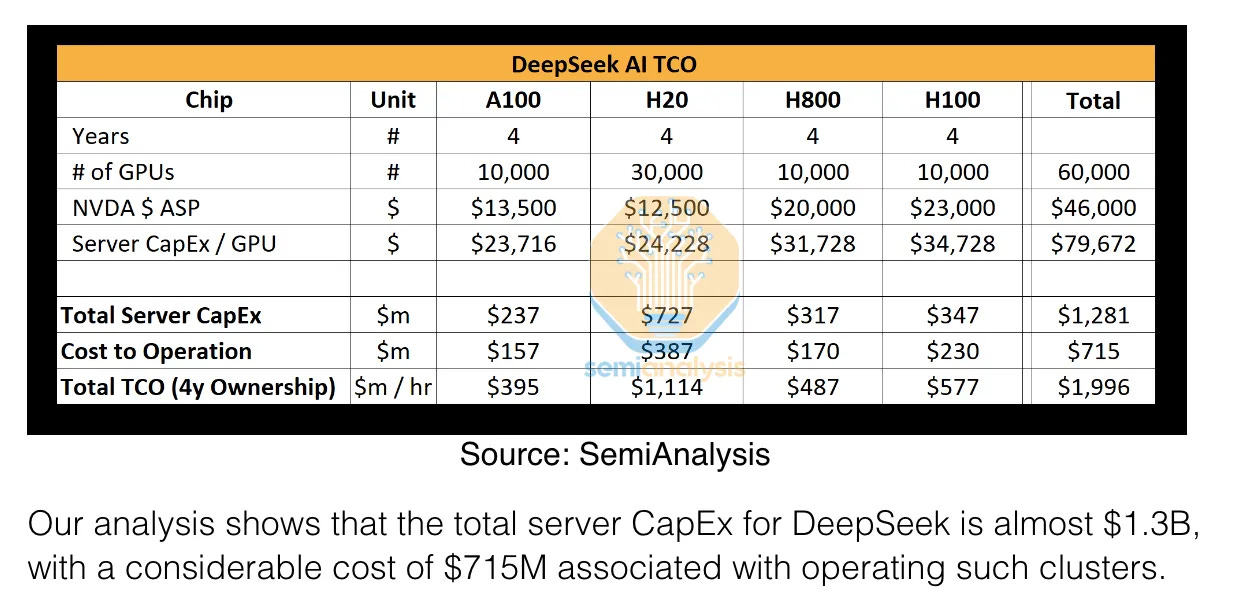

DeepSeek R1 launched with training costs reportedly under $6 million:

Okay, the reality is the $6M number is somewhat misleading. Nathan Lambert wrote a thoughtful post on how to think about the true cost of training DeepSeek. SemiAnalysis estimated way higher fully baked costs:

Even so, the markets reacted aggressively. GPT-4’s training was estimated at $100 million+. That’s a 15-20x cost difference, for a model that was very competitive on benchmarks.

The technical innovations: Multi-head Latent Attention reducing KV cache by 93%, DeepSeekMoE with 671B total parameters but only 37B active per token, and FP8 mixed precision training. Other labs rushed to replicate these techniques.

The market response was immediate:

-

$1 trillion wiped from U.S. tech market cap in a single day

-

NVIDIA lost $593 billion – the largest single-day market cap loss in history

The question everyone asked: if they can do it for $6 million, what exactly are we spending billions on?

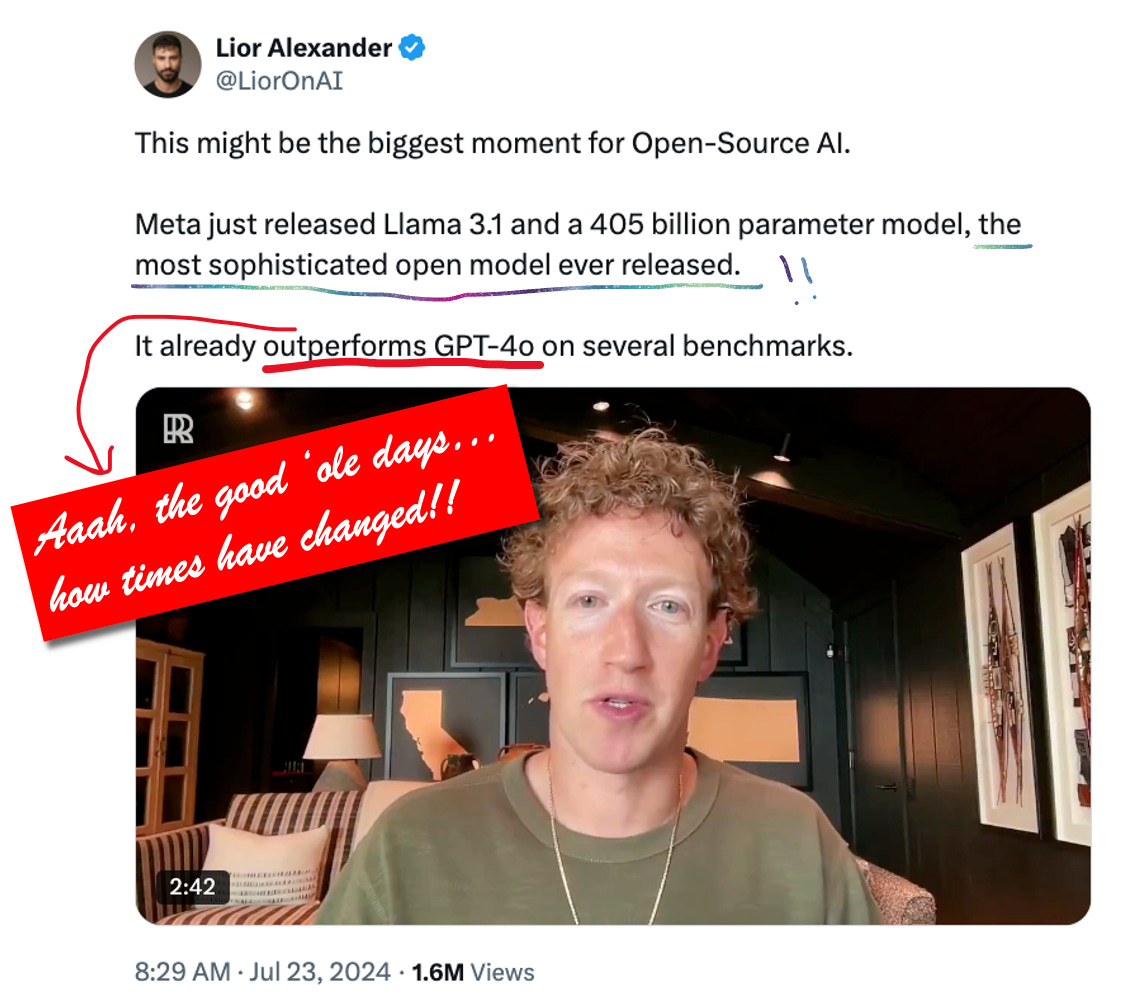

The 🦙 Llama Problem (and Avocado 🥑 Pivot)

Meta was once the shining superstar of open source, and America’s great pride in leadership. In 2024, Llama 3.1 outperformed even GPT-4o on several benchmarks.

How the tides have turned. With the rising crescendo of Chinese open source launches throughout 2025, Meta’s Llama 4 launch in April was supposed to be the American answer. Unfortunately, it wasn’t.

Scout and Maverick shipped and were… fine? Solid. Respectable, even! But Behemoth – the massive model that was supposed to compete at the frontier – never materialized. Multiple reports cited internal struggles: departures, strategic disagreements, execution challenges.

Then came the pivot. By fall, reports emerged that Meta was developing “Avocado” – a closed-source model targeting Q1 2026 release. After years of positioning as the open source AI leader, Meta is hedging. The rumored details: as a reminder, Alexandr Wang (Scale AI CEO) now serves as Chief AI Officer leading the TBD Lab effort. They’re training using Gemma, gpt-oss, and Qwen. Chris Cox no longer oversees AI after the Llama 4 stumble. Internal friction mounted when DeepSeek R1 was found using Llama architecture, raising security concerns about open-sourcing frontier capabilities.

Yann LeCun, Meta’s chief AI scientist and open source’s most vocal champion, is now departing Meta. The $70-72B capex in 2025 needs returns Meta’s open source strategy wasn’t delivering.

Meanwhile, Alibaba’s Qwen team shipped monthly updates like clockwork. When the Qwen app launched in China in November, it hit 10 million downloads in its first week. Everyone is ahead of Meta in open source: Kimi models, DeepSeek, OpenAI’s own gpt-oss, Mistral… the list goes on. Meta, once the shining beacon of open source in the USA, have given up its crown.

🌏 The Geopolitical Reality

We can’t talk about China winning open source without discussing some politics. Here’s an (abridged) timeline of major events:

-

April 16: The Trump administration banned H20 chip exports to China. NVIDIA took a $5.5 billion charge. The H20 was already a downgraded chip specifically designed for China exports – even that was now off limits.

-

May 13: Then the administration rescinded Biden’s AI Diffusion Rule – regulatory whiplash that left companies uncertain which rules applied.

-

December 8: In a reversal, Trump approved H200 exports to China with a 25% surcharge – but only 18-month-old chips to “approved customers.” China’s response was lukewarm. Huawei’s domestic chips are preferred for sovereignty reasons, even if technically inferior. The signal was clear: China wants semiconductor independence, not American permission.

Meanwhile, over $1B in B200 chips were smuggled into China via Malaysia and Thailand between May and July. Singapore arrested three people for chip trafficking. China is investing $70B in domestic chip manufacturing. The export controls are leaking, and both sides know it.

The American response? NVIDIA stepped up where Meta stumbled. In December, Nemotron 3 launched – a family of open models for agentic AI with full training data and recipes. Jensen Huang has been warning DC about China’s open source dominance all year; now he’s doing something about it.

The so what: The assumption that U.S. labs would dominate open source AI proved wrong. Chinese labs shipped more competitive open models in 2025, with dramatically lower training costs. Meta’s pivot to closed source signals the “open vs. closed” debate has merged with “American vs. Chinese” in ways that will shape policy through 2026 and beyond.

IV. Agents Fought for the Enterprise 🤖

The agent wars in 2025 weren’t about demos anymore. We’re past the “look, it can book a flight!” phase (and thank goodness for that – dunno why everyone finds it so hard to open the United app and press buy! 😂). This year was about who owns the enterprise workflow – and who sets the standards that everyone else builds on.

The Agent Startup Explosion

It wasn’t just the foundation model companies. Specialized agent startups raised billions to own vertical workflows:

Accounting: Rillet, Campfire, Numeric

Autonomous SOC: Dropzone AI (*), Prophet Security, 7AI

Customer support: Fin (Intercom), Sierra, Decagon

Market research: Strella (*), Outset, Listen Labs

Sales: Clay, 11x, Artisan AI

(*) = Decibel, my fund, is an investor

The pattern: pick a workflow with structured outputs and human-in-the-loop review, build an agent that handles the boring parts, charge enterprise pricing. The $4B coding market proved the agentic model works. These verticals are next.

The Market Share Flip

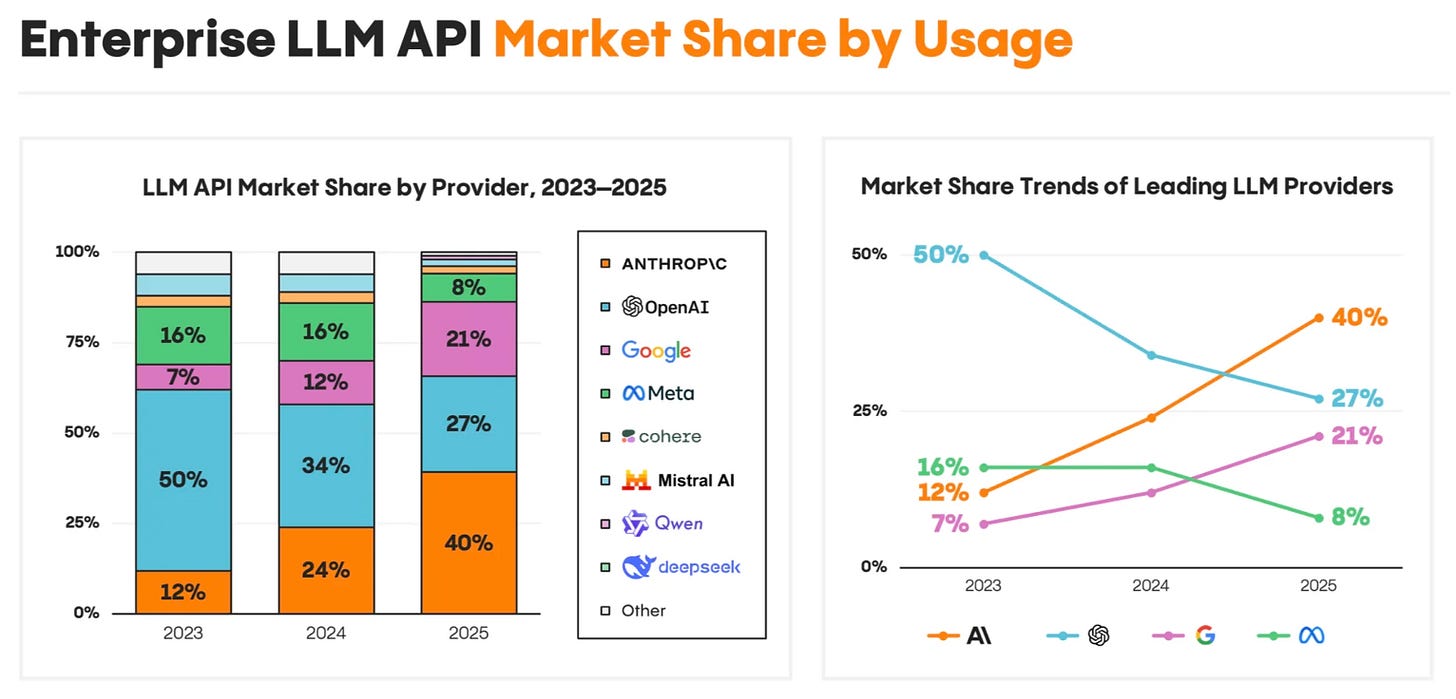

Of course, no mention of agents can ignore the companies building the intelligence to back it all! In foundation model land, the inversion from OpenAI to Anthropic becoming the enterprise leader, happened quite fast:

Data source: 2025: The State of Generative AI in the Enterprise

How did Anthropic do it? Much of the success, of course, is because Claude became the default for serious coding work, and that has proved to be the killer AI use case thus far. But there is more to it – customers care about things that work in production. Anthropic optimized for reliable, tool-using systems, while OpenAI optimized for consumer engagement. “Reliable” may sound boring, but sometimes boring really does the trick.

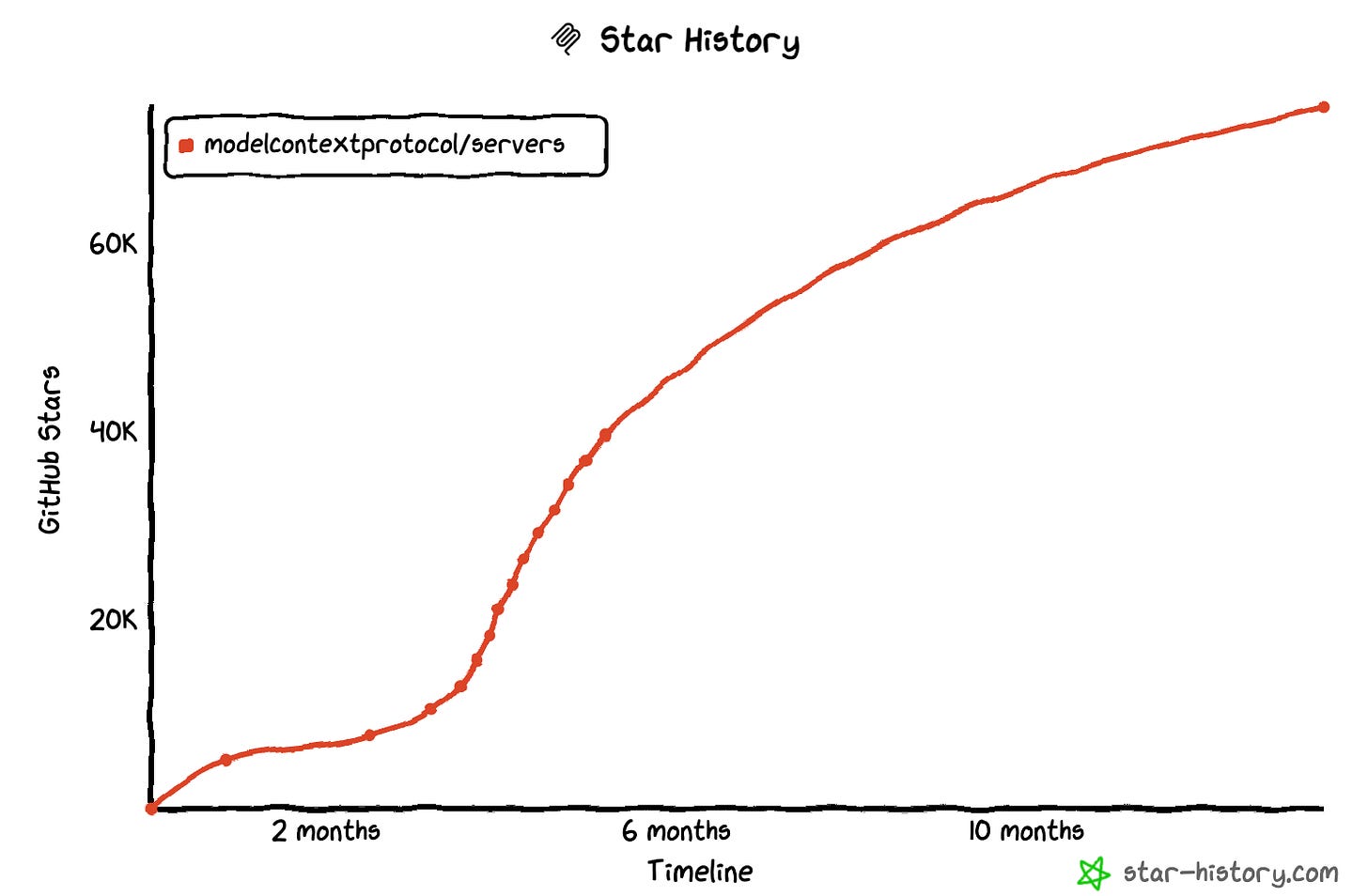

MCP Became the Standard

The Model Context Protocol started as an Anthropic side project in November 2024 – a way to connect AI models to external tools. By December 2025:

-

97 million monthly SDK downloads across Python and TypeScript

-

10,000+ active MCP servers covering everything from dev tools to Fortune 500 deployments

-

Adopted by ChatGPT, Gemini, Microsoft Copilot, VS Code, Cursor

-

Donated to the Linux Foundation as part of the new Agentic AI Foundation

The AAIF launch brought together Anthropic, OpenAI, and Block as co-founders – with Google, Microsoft, AWS, and Cloudflare as supporters. When rivals standardize together, the market has matured enough that interoperability matters more than lock-in.

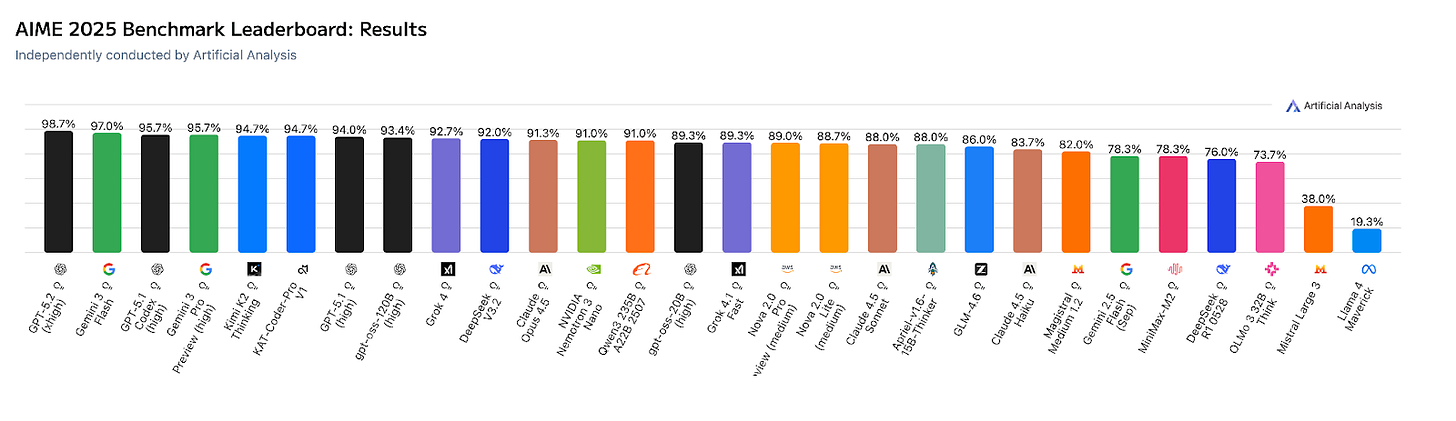

The Benchmark Battles

The reasoning model race delivered real capability jumps:

AIME 2025 (high school math competition): GPT-5.2 (x-high) hit 98.7%, Gemini 3 Flash 97%,, Claude Opus 4.5 91.3%

IMO 2025 (International Math Olympiad): Both OpenAI and Google DeepMind achieved gold medal-level scores (35/42 points, 5 of 6 problems). DeepSeek later matched them. For context: in 2022, researchers gave only 8-16% probability that AI would achieve IMO gold by 2025.

SWE-bench (coding): Claude Opus 4.5 leads at 80.9%, followed by GPT-5.2 Thinking (80.0%), and Gemini 3 (76.2%).

LMArena Elo: Gemini 3 broke 1500 for the first time (1501), with Claude Opus 4.5 close behind (1492)

What’s Still Missing

For all the progress, agents still hit hard limits:

-

Memory and context rot: As agent conversations extend, models lose track of earlier context – a phenomenon researchers call “context rot.” Performance degrades well before hitting theoretical context limits. Current solutions (RAG, summarization, external memory stores) help but don’t solve the fundamental problem. Recent research suggests we need memory as a “first-class primitive” rather than an afterthought.

-

Computer use reliability: Still a work in progress. Anthropic’s Claude computer use, OpenAI’s Computer Using Agent, and Google’s browsing agents all struggle with multi-step workflows that require authentication, error recovery, and state management. This is a key blocker for agentic last-mile reliability for many use cases, and I expect labs will double down on computer use in 2026.

-

Multi-agent coordination: When multiple agents collaborate, they face context pollution (sharing too much irrelevant information), handoff failures, and cascading errors. The AAIF standards work is partly about solving this, but production multi-agent systems remain brittle.

The so what: The agent market has traction in narrow domains with verifiable outputs – coding, customer support, document processing. The path to general-purpose agents runs through solving memory, reliability, and coordination problems that are still active research areas. There are many exciting agent startups working on specialized domains ranging from security to market research, though, and I expect 2026 will prove one or more of those markets to have a breakout “killer app” moment like coding had in 2025.

V. Science Had Its Moment 🔬

This section is short, but it belongs in the retrospective as it’s my personal favorite! I believe in the dream of AI as a true change agent for humanity, and that dream starts and ends with scientific progress.

-

Anthropic AI for Science Program (May): Anthropic launched a program offering up to $20,000 in API credits to researchers working on high-impact scientific projects, with a focus on biology and life sciences.

-

AlphaEvolve (May): Google announced AlphaEvolve – a Gemini-powered agent that designs novel algorithms. It discovered improvements to matrix multiplication and computer chip layouts that humans hadn’t found.

-

AlphaGenome (June): DeepMind released AlphaGenome, an AI model that predicts how DNA variants impact gene regulation. It processes up to 1 million DNA base-pairs at single-letter resolution—a breakthrough for understanding non-coding regions (98% of the genome) linked to disease. Available via API for non-commercial research.

-

Chai-2 (June): Chai Discovery released Chai-2, the first AI system to reliably design antibodies from scratch. With a 16-20% hit rate (vs. 0.1% for traditional computational methods), Chai-2 compresses months of drug discovery into two weeks. Former Pfizer CSO Mikael Dolsten called it a potential game-changer for de novo medicine design.

-

Math Olympiad Gold (July): Both OpenAI and Google DeepMind achieved gold medal scores (35 out 42 points) at the International Mathematical Olympiad – a first for AI. OpenAI’s experimental reasoning LLM and DeepMind’s Gemini Deep Think each solved 5 of 6 problems under competition conditions: 4.5-hour time limits, no tools, natural language proofs. The breakthrough came just a year after DeepMind’s AlphaProof earned silver with 28 points using specialized formal languages. This time, both systems worked end-to-end in natural language.

-

OpenAI for Science (September): Kevin Weil announced OpenAI for Science, a dedicated initiative to build AI as “the next great scientific instrument.” The team works with researchers across math, physics, biology, and computer science – GPT-5.2’s December release showed early results, including helping resolve an open problem in statistical learning theory.

-

Claude for Life Sciences (October): Anthropic launched its first formal entry into life sciences – connecting Claude with lab tools like Benchling, PubMed, 10x Genomics, and Synapse.org. The goal: support researchers from literature review through regulatory submission, compressing days of analysis into minutes.

-

BoltzGen (October): BoltzGen is released, an open-source model that generates novel protein binders ready for drug discovery pipelines – going beyond structure prediction to actual molecule design. Released under the MIT license for unrestricted commercial and academic use.

-

AlphaFold Usage (November): Five years after AlphaFold 2 solved protein structure prediction, over 3 million researchers in 190 countries use it.

-

Genesis Mission (November): The Trump administration launched Genesis – a national initiative to build an AI platform using federal scientific datasets. Managed by the Department of Energy, it aims to train scientific foundation models and create AI agents for hypothesis testing and automated research workflows. The executive order explicitly compared the ambition to the Manhattan Project.

The so what: Every major AI lab now has a dedicated science strategy. The question isn’t whether AI will accelerate research – it’s which domains will see breakthroughs first, and whether the traditional academic pipeline can adapt to AI-assisted discovery moving at startup speed. This is the area in 2026 I am most excited to watch! 🧬🧪

What Actually Mattered

Still with me?! Thanks for hanging on! Deep breaths. That was a lot of info. After all, it was a BUSY year! Stepping back from the noise, what actually defined 2025?

1. Great Products Beat Distribution 🏆

This might be the biggest surprise. Microsoft has Copilot in every Office app. Google has Gemini in Search, Gmail, Android. OpenAI is the AI brand everyone knows.

And yet: Anthropic went from 12% to 32% enterprise share while OpenAI dropped from 50% to 25%. Cursor – a startup code editor – reached $29B valuation while GitHub Copilot growth stalled. The companies that made the best products for specific workflows won, even against massive distribution advantages.

This wasn’t supposed to happen. Distribution is usually a huge moat. But in AI, the difference between “works okay” and “works great” is so large that users switch despite friction. Developers abandoned VS Code + Copilot for Cursor + Claude because the productivity difference was obvious.

2. Efficiency Mattered More Than Scale ⚡

DeepSeek’s $6 million training run didn’t just embarrass larger labs. It proved architectural innovation could substitute for brute-force compute. Moonshot’s Kimi K2 Thinking trained for $4.6 million. MiniMax M2 delivers Claude-competitive performance at 8% of the cost.

The “just scale it” era gave way to something smarter: mixture-of-experts, inference-time compute optimization, better data curation, and training efficiency improvements.

The nuance: $6M gets you SOTA for open source models. Frontier closed-source models still cost hundreds of millions. But the gap is narrowing, and the cost to be “competitive enough” dropped dramatically.

3. Standards Emerged 🔗

MCP going to the Linux Foundation. AGENTS.md hitting 60,000+ projects. Google’s A2A protocol for agent-to-agent communication.

When OpenAI, Anthropic, and Block co-found a neutral foundation together, you know the market has decided interoperability matters. The “walled garden” phase is ending. The “platform” phase is beginning.

4. Multimodal Became Baseline 🎬

Text-only models already feel dated. Every major model handles images natively. Video understanding is landing. Voice synthesis is commoditized.

The expectation shifted from “can this model do X” to “why can’t this model do everything?” That’s a high bar, but it’s the new bar.

5. OpenAI Rewrote Its Own Rules 📜

October’s restructuring split OpenAI in two: the nonprofit OpenAI Foundation now has legal control over a public benefit corporation called OpenAI Group PBC, which is free to raise funding and acquire companies without restriction. The Foundation holds 26% equity and appoints the board; Microsoft holds 27% (~$135B); the remaining 47% goes to employees and investors.

The Microsoft renegotiation ended cloud exclusivity. Elon Musk tried to block it and offered $97.4B to acquire the company outright. Now reports are surfacing that OpenAI is trying to raise at a $830B valuation.

The mission hasn’t changed. Everything else (structure-wise) has.

Looking Forward: 2026 🔮

Here’s what I’m watching:

“}]] Read More in AI Supremacy