The Altman Principle

“The most reliably predictable trend of the next 100 years: every year, humanity will use significantly more computing power than the previous year.” – Francois Chollet, December 22nd, 2024.

Rising Energy Costs of the AI Infrastructure Race will become a Major Problem

Recently the AI Sustainability lead over at Salesforce got me thinking about the massive dollar costs of running this OpenAI’s o3 in dollar terms and what that means for its environmental costs?

-

You sure this is sustainable AI bro?

-

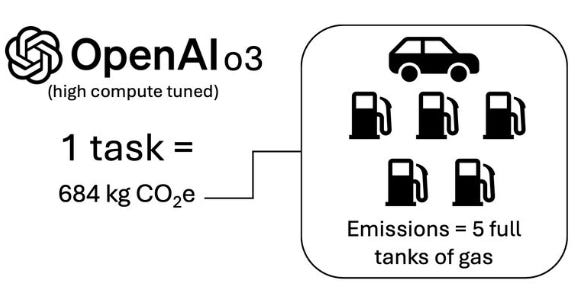

According to Boris Gamazaychikov the AI Sustainability lead of Salesforce and using cost data from OpenAI’s benchmark results of their o3 announcement, we can estimate the energy and carbon footprint of a single task on the ARC-AGI benchmark (for the high-compute version of o3). Each task consumed approximately 1,785 kWh of energy—about the same amount of electricity an average U.S. household uses in two months.

Boris said this translates to 684 kg CO₂e, based on last month’s U.S. grid emissions factor. To put that into perspective, that’s equivalent to the carbon emissions from more than 5 full tanks of gas!

I’m not a climate scientist or an energy investor, but the energy consumption associated with the o3 model has broader implications for energy security and environmental sustainability, among other areas.

Less than five days ago at the time of writing, Sam Altman backed startup Oklo landed a massive data center power deal. The new agreement would see Oklo building enough small modular reactors (SMR) by 2044 to generate 12 gigawatts of electricity for Switch’s data centers, which today serve a wide range of companies, including Google, Nvidia, Tesla, PayPal, JP Morgan Chase, and others. It’s no wonder Billionaires have orchestrated in recent years to dismantle ESG concerns and green technology and zero carbon pledges. Cloud companies are the same corporations that are building more and bigger datacenters and the same companies who benefit the most from ever larger and more capable models.

OpenAI is pursuing an ambitious plan to construct massive 5-gigawatt (GW) data centers across various locations in the United States. This initiative aims to support the growing demands of artificial intelligence (AI) processing, which requires substantial computational power. At what price to the energy grid do you suppose?

⚡ AI Infrastructure at all costs

These Datacenter gigacenters of AI compute or Data center campuses that power AI and cloud computing are going to expand, multiply and get tremendously big in just the next couple of years with 2025 to 2027 being among the biggest spikes of AI infrastructure our civilization has ever seen.

In 2024, it is projected that major players like Amazon, Microsoft, and Alphabet spent a combined total of over $240 billion on AI-related infrastructure. This represents a substantial increase from previous years, reflecting a growing demand for AI capabilities and services. In 2025, this could go up considerably.

Renewable energy alone won’t be sufficient anytime soon to meet their power needs. What this will do to the cost of energy in some regions will likely be alarming. But is society ready for the energy costs and environmental factors involved in scaling AI infrastructure?

In 2025, Amazon, Google, Meta, and Microsoft by themselves are expected to churn through $300 billion in capex next year. OpenAI has reportedly engaged with the Biden administration for multiple massive 5-gigawatt (GW) data centers. Elon Musk has acquired Saudi investments for his massive datacenter for xAI.

Data centers are one of the key reasons US power demand is expected to jump 16% over the next five years, after decades of flat usage, Grid Strategies has forecast.

But if things continue to accelerate it could be a lot more than that. Altman, the CEO of OpenAI, is also Oklo’s chairman and he favors leveraging money from the UAE. It’s not so surprising the oil rich Middle East are behind funding both Altman and Musk’s datacenter ambitions. But wait, there’s more.

American Big Oil also wants to help BigTech with their datacenter energy problem. Exxon Mobil and Chevron are jumping into the race to power AI data centers. Big Energy wants to be the solution, not just an old problem. Exxon plans to build a natural gas plant to power a data center and use carbon capture technology to slash the plant’s emissions. Exxon estimates decarbonizing AI data centers could represent up to 20% of its total addressable market for carbon capture and storage by 2050.

Technology companies are increasingly looking into liquid cooling for its data centers as increasing performance needs also ramp up overheating risks. Traditionally, data centers have large aisles to allow cooled air to circulate as overheating can lead to equipment failure and downtime. In theory, liquid cooling should enable these facilities to pack more servers into their existing footprint and it’s all becoming big business. Datacenter proliferation is creating opportunities for a whole new world.

I don’t see how higher future energy costs don’t become a reality. The Machine World will increasingly compete with people for more energy. In the current paradigm, this means BigTech vs. the regions where they build their data centers. This could cause real-estate, water and community chaos – and not just a crisis from energy demands alone.

Data centers in the U.S. could consume as much electricity by 2030 as some entire industrialized economies.

The reality in 2025 is that the power needs of AI are growing so large that the tech companies are searching for sources of electricity that are more reliable than renewable energy.

Nuclear plants take a long time to build, so turning to Natural Gas is highly probable. AI doesn’t wait for pesky timelines which is where Big Oil will come to the rescue. The early stages of the AI ramp up or what I call the great datacenter boom is going to be a bonanza of BigTech getting more implicated in the Energy industry that will set a dangerous precedent.

BigTech is getting Too Powerful (🔋 get it?)

Overall, the IDC expects global datacenter electricity consumption to more than double between 2023 and 2028 with a five-year CAGR of 19.5% and reaching 857 Terawatt hours (TWh) in 2028. Electricity is by far the largest ongoing expense for datacenter operators, accounting for 46% of total spending for enterprise datacenters and 60% for service provider datacenters.

Datacenter Density in the U.S., a 2024 Snapshot

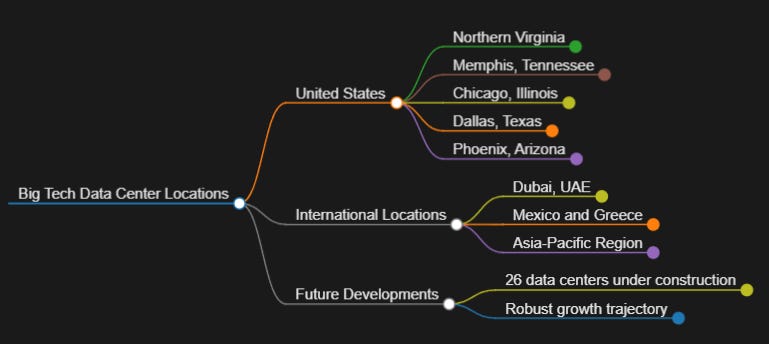

Data centers in the United States are primarily located in several key regions, driven by factors such as access to power, connectivity, and favorable economic conditions.

-

Northern Virginia – over 300 data centers and approximately 3,945 megawatts of commissioned power.

-

Dallas region – 150 data centers, Dallas benefits from low real estate costs and a strong economy.

-

Silicon Valley (Northern California): Known for its tech innovation, Silicon Valley has over 160 data centers. Despite high costs, its robust connectivity options attract many.

-

Phoenix: With over 100 data centers and around 1,380 megawatts of power, Phoenix offers lower taxes and affordable real estate.

-

Chicago: This city has more than 110 data centers and is strategically located between major markets.

Overall, global data center electricity consumption is expected to more than double between 2023 and 2028.

-

However between 2028 and 2033, it could do far more than double, and we are not ready.

Datacenters will soon need more Electricity than Entire Cities

🔌 BigTechs’s big problem: energy to power their AI greed.

Microsoft is helping to bring the Three Mile Island nuclear reactor back online by purchasing power from the plant. Amazon and Alphabet’s Google unit are investing in next-generation, small nuclear reactors. Meta recently called on companies to send it proposals to build new nuclear plants. In early December, 2024 Meta announced a $10 billion development for a datacenter campus in Louisiana 4 million sq ft, 2GW.

Entergy recently proposed to develop a 1.5GW natural gas plant in Louisiana, near Holly Ridge, for a new data center customer. Entergy’s motto is “we power life”.

According to market research company Omdia, the generative AI market, which was valued at $6 billion in 2023, could reach $59 billion in 2028. This means Microsoft and Meta bough expensive bleeding edge GPUs from Nvidia in 2024 hand over fist. Microsoft likely bought the most to feed OpenAI, relative to Amazon and Google to feed Anthropic. Meanwhile all of this makes Nvidia and TSMC ridiculous monopolies.

In April 2024, Amazon Web Services (AWS) revealed plans to invest $11 billion in a new data center campus in Northern Indiana. In the PR Amazon was mostly boasting about job creation, no mention of the energy infrastructure.

As the Cloud and the Machine World evolve, the demand for energy will likely surpass the supply. What happens then? What do the activities of Elon Musk in Memphis, Tenn, or Meta in Louisiana or Amazon in Indiana even mean? This is only the beginning.

Where do we expect this to lead exactly? Nvidia has incredible AI chips and TSMC has world-class efficiency nearly in producing world-leading semiconductors nearly impossible for anyone to compete with. But the majority of the revenue of Generative AI is being added to the companies with the biggest earnings and growth and those who have the most skin in the game, further augmenting the one percent in wealth of the planet, and their control over the future.

Meanwhile in 2024, Microsoft and Google were very active building and making plans to build datacenters abroad and in foreign countries especially in Europe and Southeast Asia. The U.S. is exporting their AI infrastructure and training in machine learning to other parts of the world to maintain their BigTech monopoly power and acquire cheaper labor like a veritable AI colonialism of a new era. It’s not just ChatGPT that is swallowing the competition.

If AI eats Software, who is paying for the energy? We are. We all are.

10% of all Energy for Datacenters by 2028?

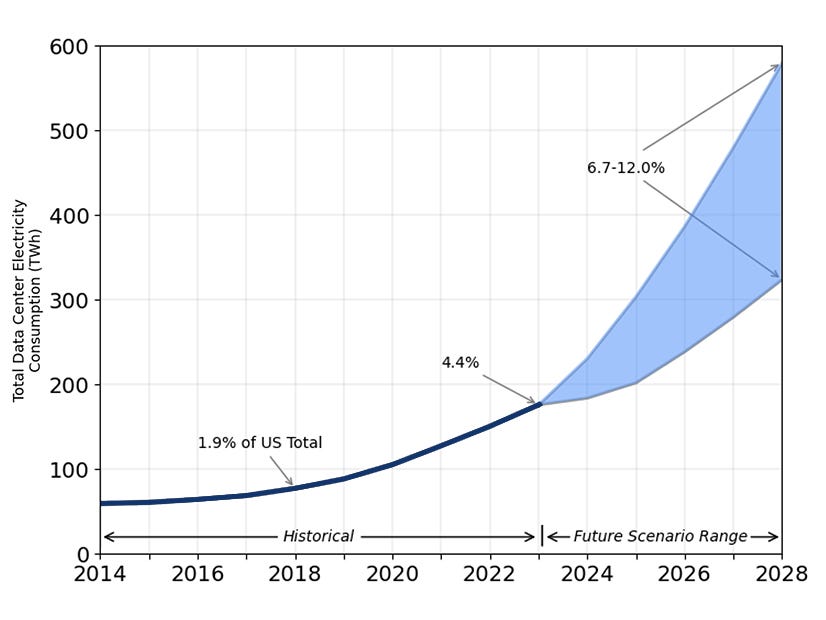

By 2028, energy use at U.S. data centers could rise to 6.7 to 12% of all energy demand nationwide, topping out at 580 TWh, according to Berkeley Lab researchers.

We are goign to go so far above historical averages so fast, we won’t be able to cope. U.S. data center annual energy use remained stable between 2014– 2016 at about 60 TWh, continuing a minimal growth trend observed since about 2010.

US data center demand as a percentage of total US power consumption:

So how different is that future going to look like for energy demand and prices?

-

2018: 1.9%

-

2023: 4.4%

-

2028: 6.7% – 12% (estimate).

I assume the U.S. Department of Energy (DOE) is going to be overwhelmed. It’s hard frankly to imagine otherwise.

🎄 The New Reality

The voracious appetite of data centers for electricity could spike more than threefold over the next four years, rising from 4.4% of U.S. power demand in 2023 to as high as 12% in 2028, according to the Lawrence Berkeley National Laboratory.

-

The report indicates that total data center electricity usage climbed from 58 TWh in 2014 to 176 TWh in 2023 and estimates an increase between 325 to 580 TWh by 2028.

-

The compound annual growth rate should hit between 13% to 27% between 2023 and 2028.

The U.S. data center sector was estimated to have consumed about 61 billion kilowatt hours (kWh) in 2006, equivalent to 1.5% of total U.S. electricity consumption, that we could hit nearly 10% just twenty years later is pretty mad, but it’s what comes later that’s the crazy part for civilization. They don’t do the modeling far ahead enough to realize where this leads.

Utilities consistently emphasize data centers, and specifically AI, as drivers of projected increases in electricity demand. Amorphous, all-American, BigTech’s AI.

A Machine World of our energy sucking overlords.

Merry Christmas! 🔔

Read More in AI Supremacy