[[{“value”:”

Welcome to another article from AI Supremacy, a Newsletter about AI at the intersection of tech, business, society and the future. Welcome as well to our new readers who are joining 170,000 others. Check the rising publications on our host platform in the Technology category here. Welcome to join our new community that is an extension of our AI Supremacy readers joined by other AI writers in a more interactive setting to discuss the frontiers of AI and other niche topics.

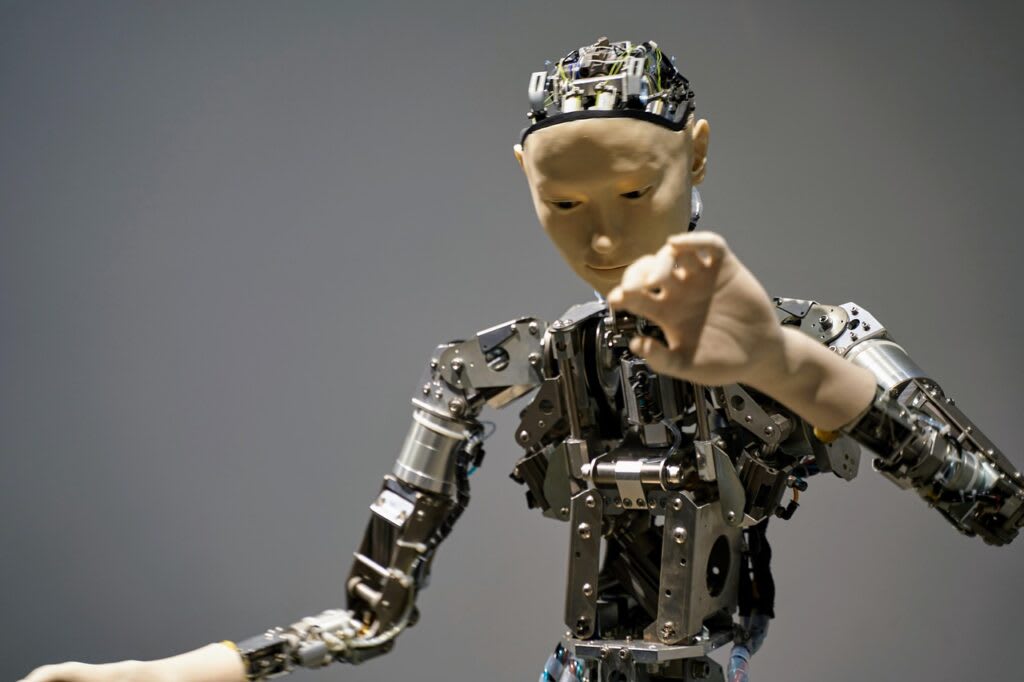

I am becoming more bullish on the intersection of AI and robotics than many others, especially those fairly critical of how LLMs are translating into real business value. Let me try to explain and summarize why. One of the reasons I’m more optimistic about the near future or robots is due to how quickly world foundational models are evolving and are likely to evolve in the 2025 to 2030 period.

-

This article is going to be a deep premium deep dive report that’s month by month on what occurred so far in robotics in 2025 by of Droids Newsletter.

🔴 This article is an introduction for AI enthusiast who might want to know what’s going on in Robotics, Physical AI and humanoids. View our robotics section here.

What is a World Foundational Model?

World foundation models (WFMs) are neural networks that simulate real-world environments as videos and predict accurate outcomes based on text, image, or video input.

These LLMs will accelerate how robotics can learn to do tasks and work together in a variety of ways. Companies like Google, Nvidia, Meta, Physical AI and Skild AI, among others, are working on this. Everyone wants to build the “software brain” for the future of robotics.

In a short span of time, Nvidia, Ai2, Google and others released their WFMs with relevance to robotics simulation.

Nvidia’s Cosmos 🌌

Earlier this week Nvidia unveiled its new world AI models, libraries, and other infrastructure for robotics developers, most notable of which is Cosmos Reason, a 7-billion-parameter “reasoning” vision language model for physical AI applications and robots.

The Omniverse libraries and Cosmos foundation models are aimed directly at speeding up robotics solutions. Cosmos WFMs, downloaded over 2 million times, let developers generate diverse data for training robots at scale using text, image and video prompts. What’s new? Nvidia’s Cosmos Transfer-2, which can accelerate synthetic data generation from 3D simulation scenes or spatial control inputs, and a distilled version of Cosmos Transfers that is more optimized for speed. Cosmos Transfer-2 is essentially a fully upgraded Cosmos world model for robots.

Ai2 Announces Breakthrough

On August 12, Ai2 launched MolmoAct, a breakthrough robotics model that brings structured reasoning into the physical world — enabling robots to understand language, plan in 3D, and act with control and transparency. Physical AI, where robotics and foundation models come together, is fast becoming a growing space with both BigTech and emerging startups.

Who are Ai2? The Allen Institute for AI are a Seattle based non-profit AI research institute founded in 2014 by the late Paul Allen. They develop foundational AI research and innovation to deliver real-world impact through large-scale open models, data, robotics, conservation, and beyond.

MolmoAct is fully open-source, reproducible, and built to unlock safer, more adaptable embodied AI — with all weights, data, and benchmarks being released publicly. MolmoAct is truly innovative the company claims—the first model able to “think” in three dimensions! I view Ai2 as an important open-weight non-profit in the emerging tech space.

MolmoAct, based on Ai2’s open source Molmo, “thinks” in three dimensions.

Watch the Launch Video on MolmoAct

2 minutes, 45 seconds.

At a high level, MolmoAct reasons through three autoregressive stages:

-

Understanding the physical world.

-

Planning in image space.

-

Action decoding.

Ai2 classifies MolmoAct as an Action Reasoning Model, in which foundation models reason about actions within a physical, 3D space.

How is MolmoAct SOTA? 🌠

NVIDIA’s GR00T-N1-2B model was trained on 600 million samples with 1,024 H100s, while Physical Intelligence trained π0 using 900 million samples and an undisclosed number of chips.

Ai2 evaluated MolmoAct’s pre-training capabilities through SimPLER, a benchmark containing a set of simulated test environments for common real robot manipulation setups. MolmoAct achieved a state-of-the-art (SOTA) out-of-distribution task success rate of 72.1%, beating models from Physical Intelligence, Google, Microsoft, NVIDIA, and others.

-

Reasoning-first: Goes beyond end-to-end task execution to explain how and why actions are taken

-

Language to action: Translates natural commands into spatially grounded behavior

-

Transparent + safe: Users can preview and steer robot plans before they act

-

Efficient + open: Trained on 18M samples in ~24 hours; outperforming larger models (71.9% on SimPLER)

A New Class of Model: Action Reasoning

According to Ai2 – MolmoAct – is the first in a new category of AI model that we can refer to as an Action Reasoning Model (ARM), a model that interprets high-level natural language instructions and reasons through a sequence of physical actions to carry them out in the real world.

What this suggests to me is WFMs will enable new capabilities in speeding up robotics training that enables general purpose robots faster than anticipated.

🤖 Articles on Robotics and Humanoid Robotics

(see Section) For deep reports and journalism on robotics, I’m a huge fan of .

-

Chinese EV Makers’ Foray into AI: Redefining the Future of Mobility

Learn more about Robots

Google’s Genie 3: A new frontier for world models 🧞♀️

Around a week ago, Google released Genie 3. While WFMs remain limited in 2025, Genie 3 offers improvements like real-time interaction and better memory.

“Genie 3 is the first real-time interactive general-purpose world model,” Shlomi Fruchter, a research director at DeepMind

Meta Superintelligence Lab 💎

In June, 2025 before Meta went on its legendary 2025 poaching spree of AI talent, they had unveiled a new AI model called V-JEPA 2 that it said can better understand the physical world.

I believe Meta’s new Meta Superintelligence Lab could further accelerate how WFMs evolve to assist the development of consumer robotics and robotics training not foreseen for decades more or less taking place in the late 2020s and 2030s.

You might also remember in September last year, leading AI researcher Fei-Fei Li raised $230 million for a new startup called World Labs, which aims to create what it calls “large world models” that can better understand the structure of the physical world. So if GPT-5 is proof of a scaling bottleneck for general purpose LLMs, now we can fine-tune WFMs for robotics in greater focus.

Companies to Watch 🐉

-

Nvidia

-

Thinking Machine Labs (who are building open-weight models)

-

Apple (developing consumer robotics)

-

Ai2

-

Unitree Robotics

-

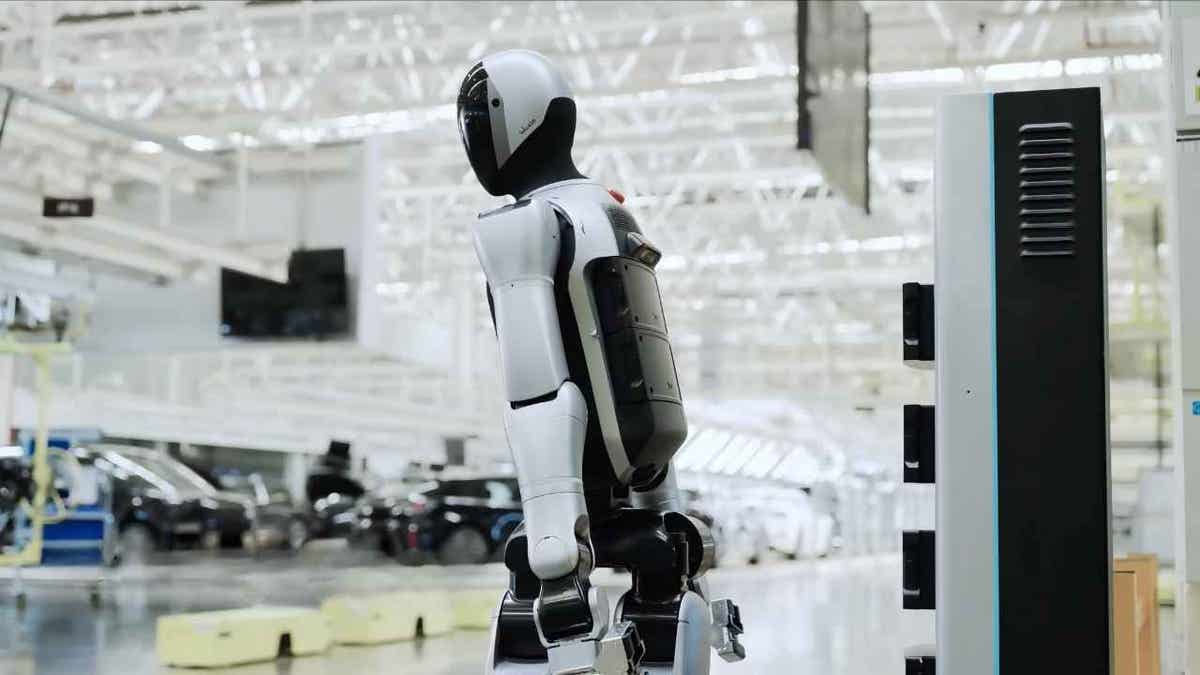

Tesla Optimus Robotics

-

Amazon

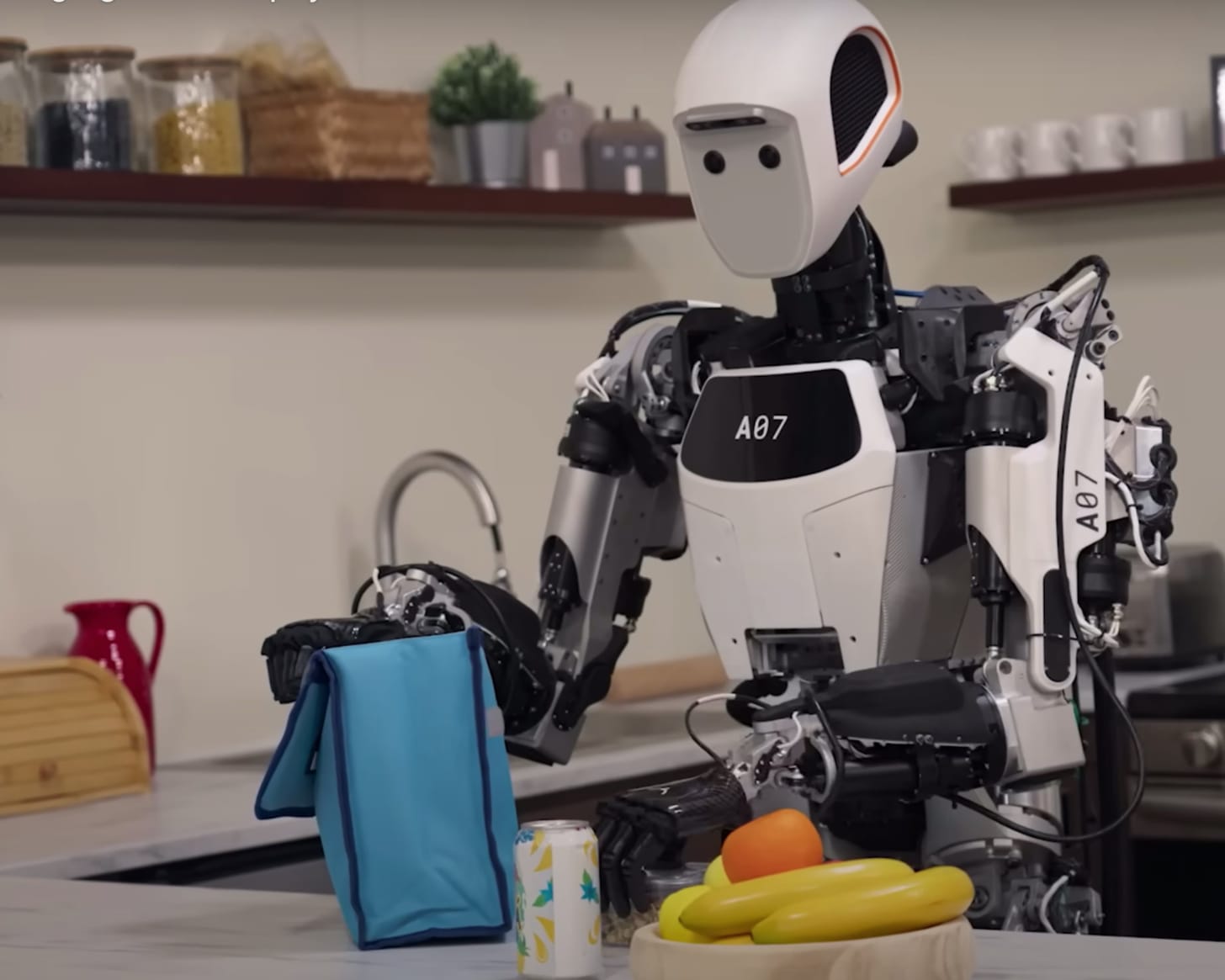

Consumer robotics will also be fairly interesting to watch in terms of the China vs. United States dynamic, given how aggressive China’s EV startups have been vs. American innovation.

-

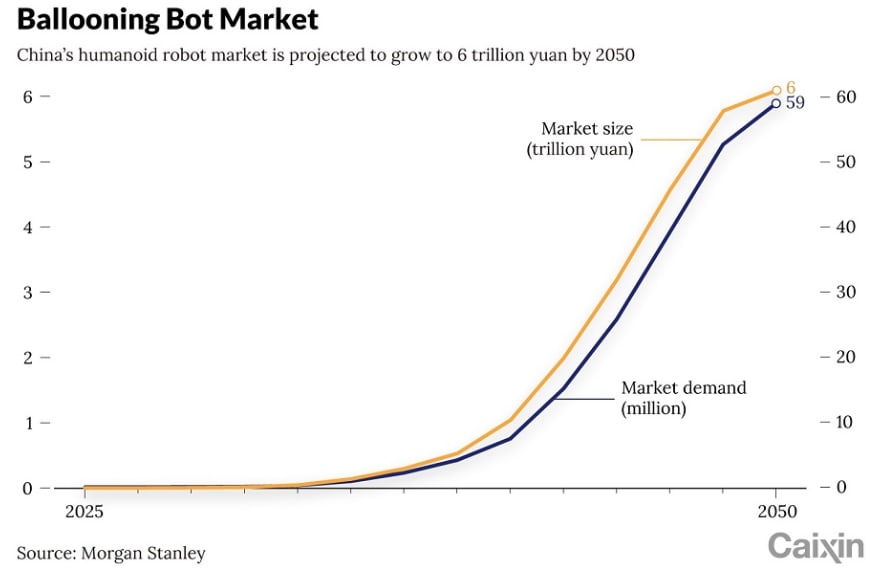

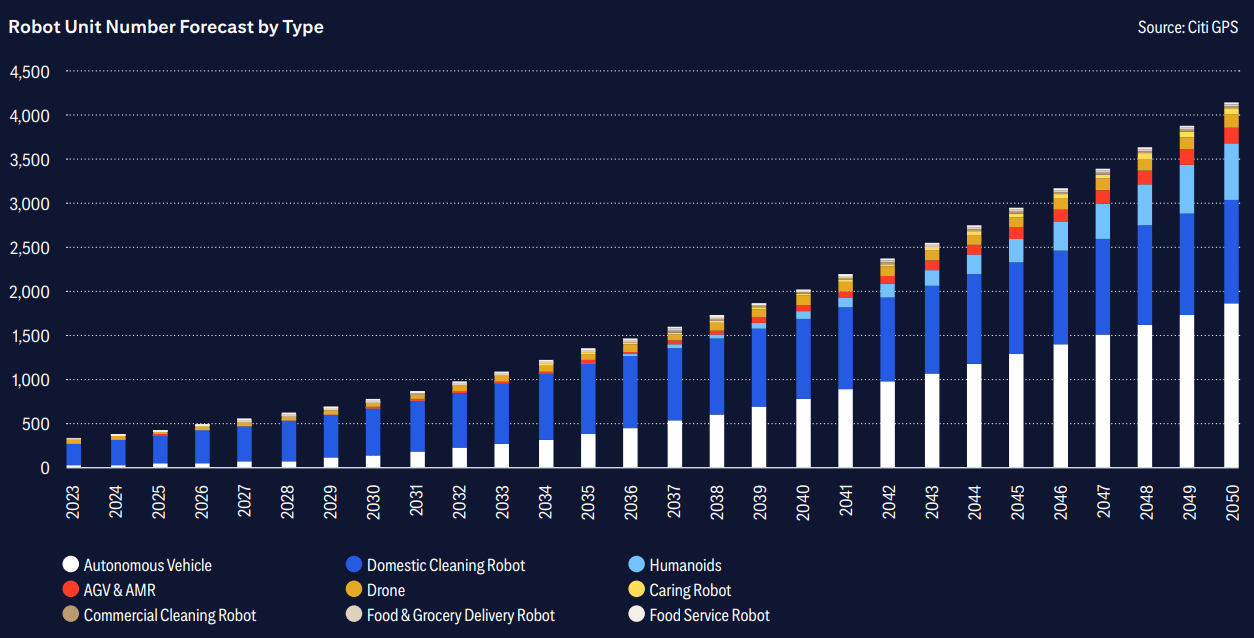

Some analysts see Humanoid robotics as a $5 to $7 Trillion opportunity in the near future (e.g. the next 25 years).

A 25 Year Explosion of Robots in Human Civilization

-

Generative AI’s impact on robotics may have been underestimated in 2023 and before and we’re finding out it’s more impactful with WFMs and other techniques.

-

AI moving in a direction AI recursive self improvement (ARSI) may also accelerate adapting robots to the real world in the late 2020s in a “GPT moment for Robotics” I’m anticipating around 2027. We are already starting to see this in 2025 in the domain of AI coding.

⚓︎ From the Robot Lighthouse 🌊

Here is a list of articles I found useful for context on the robotics industry in my general reading on the topic:

-

Robots can program each other’s brains with AI, scientist shows:

-

Allen Institute for AI bolsters robotics leadership: UW prof Dieter Fox leaves Nvidia to lead robotics initiative at Allen Institute for AI

-

OpenMind wants to be the Android operating system of humanoid robots. OpenMind is building a software layer, OM1, for humanoid robots that acts as an operating system.

-

Amazon-backed Skild AI unveils general-purpose AI model for multi-purpose robots.

-

New vision-based system teaches machines to understand their bodies via MIT’s Computer Science and Artificial Intelligence Laboratory (CSAIL). Read paper

-

Augmentus raises Series A+ funding to reduce robot programming complexity

-

Hexagon launches AEON humanoid robot for industrial applications

-

Agility Robotics, Boston Dynamics see leadership changes. Aaron Saunders, chief technology officer at Boston Dynamics, announced on LinkedIn that he is stepping down from that role. And Melonee Wise, chief product officer at Agility Robotics, is leaving the company at the end of the month.

-

Domino’s deploys Boston Dynamics’ robot dog to deliver pizza and fend off seagulls on beaches. 🌴

-

Google says its new ‘world model’ could train AI robots in virtual warehouses.

-

AI-powered exoskeletons are helping paralyzed patients walk again.

-

Humanoid robot swaps its own battery to work 24/7 via UBTech: Instead of shutting down to recharge, the Walker S2 walks to a nearby swap station. When one battery starts to run low, the robot turns its torso, uses built-in tools on its arms and removes the drained battery.

Let’s get into our deep dive mid-2025 Robotics report:

The Year the Robots got up and Walked Out

A Mid-Year Chronicle of Humanoid Revolution

-

Check out the notes of Diana Wolf our lead robotics reporter.

Ai2’s advances basically open-source continued WFM developments that help train robots in real-world tasks better and faster. Nvidia and all the companies I’ve mentioned are a big part of this too.

-

This article is a great primer on all that has occured in robotics news so far in the first half of 2025.

“}]] Read More in AI Supremacy