Hello Engineering Leaders and AI Enthusiasts!

This newsletter brings you the latest AI updates in a crisp manner! Dive in for a quick recap of everything important that happened around AI in the past two weeks.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

⚔️ Alibaba’s Qwen3 challenges AI’s biggest names

💸 Baidu slashes AI costs to take on DeepSeek

🎨 OpenAI drops image API to fuel visual AI

🗣️ Undergrads build AI voice model to rival giants

🏢 Mechanize wants AI to fully automate jobs

⚡ Google’s Gemini 2.5 Flash targets fast, smart AI

🧠 OpenAI’s o3 and o4-mini raise the bar for AI reasoning

🌐 OpenAI plans its own AI-powered social network

🧠 Knowledge Nugget: What AI Can’t Replace: Intuition, Alignment, and Taste by

Let’s go!

Alibaba’s Qwen3 challenges AI’s biggest names

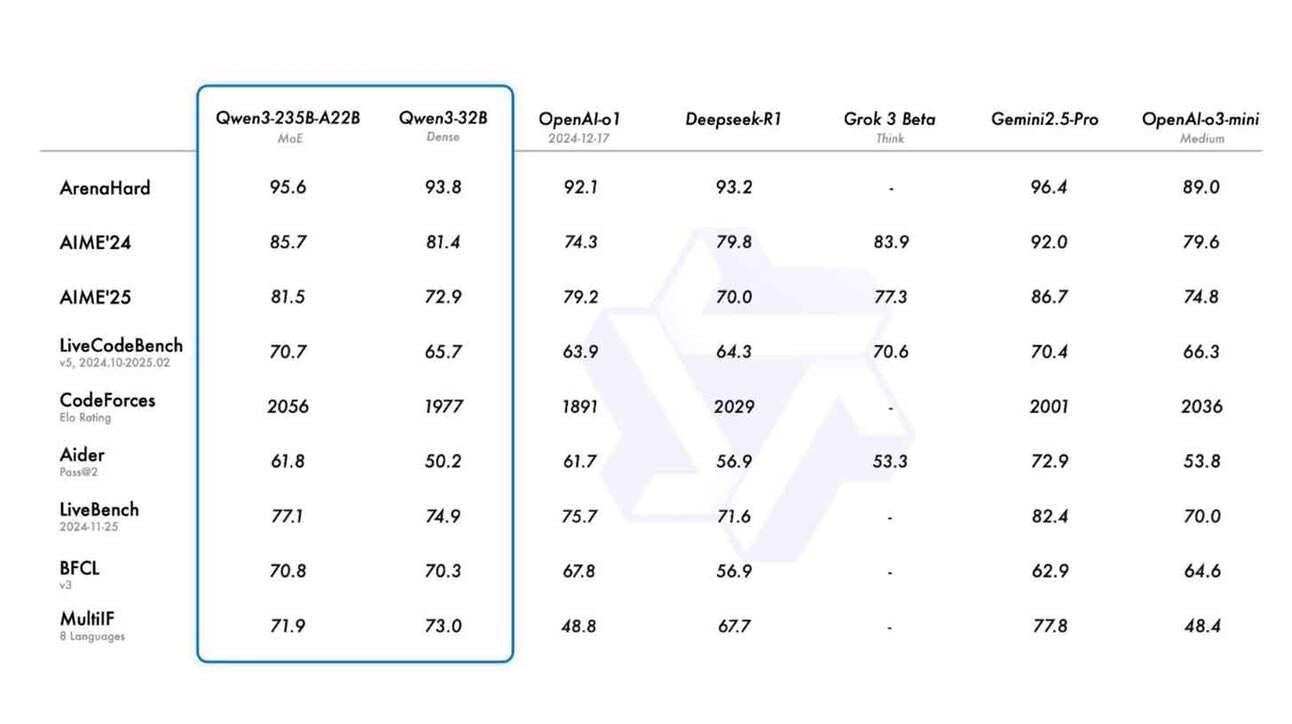

Alibaba’s Qwen AI lab has dropped Qwen3, a new family of eight open-weight language models ranging from 600M to 235B parameters, and the top-tier Qwen3-235B is benchmarking alongside models like OpenAI’s o1, DeepSeek-R1, and Grok-3. The models include a hybrid reasoning system that toggles between fast, low-latency responses and deep analytical thinking.

Qwen3 also supports 119 languages and comes with enhanced coding and agentic skills, allowing more complex task execution. All models are released under Apache 2.0, available through Hugging Face and other deployment platforms.

Why does it matter?

Qwen3’s release further signals China’s serious play in the open-weight frontier. It also adds pressure on U.S. labs to keep pace in both performance and licensing freedom, a growing factor for enterprise AI adoption.

Baidu slashes AI costs to take on DeepSeek

At Baidu Create 2025, Baidu unveiled two new AI models, Ernie 4.5 Turbo and Ernie X1 Turbo, with better multimodal reasoning, faster response times, and dramatically lower prices. Ernie 4.5 Turbo now costs just 20% of its previous version, and Ernie X1 Turbo is half the cost of its predecessor. Baidu also launched new apps like Xinxiang for task management and showcased realistic AI-powered digital humans.

Beyond cheaper models, Baidu is leaning into standards like the Model Context Protocol (MCP) to make AI integration smoother for developers, echoing trends seen with APIs and USB in tech history.

Why does it matter?

Baidu’s push to undercut DeepSeek on cost and capabilities reflects China’s AI momentum and signals that global AI competition may increasingly hinge on price, speed, and integration, not just raw model power.

OpenAI drops image API to fuel visual AI

OpenAI has rolled out GPT Image 1, its natively multimodal model, to the public via its API (gpt-image-1). Initially launched inside ChatGPT, it quickly became a hit, generating over 700 million images in just the first week. Now, businesses and developers can directly integrate text-to-image capabilities, detailed image editing, and style rendering into their platforms and tools.

Big names like Adobe, Figma, Airtable, and Quora are already using the model to speed up creative workflows, polish product visuals, and scale marketing assets.

Why does it matter?

By embedding image generation into APIs, OpenAI is turning visual AI into a background service, much like cloud storage or payments. This could reshape design, e-commerce, and marketing workflows, making AI-generated visuals the new default, not the exception.

Undergrads build AI voice model to rival giants

Korean startup Nari Labs, founded by two undergrads, has launched Dia, an open-source text-to-speech model that claims to outperform ElevenLabs and Sesame in expressiveness, timing, and handling nonverbal cues like laughter and coughing. Built without outside funding but using Google’s TPU Research Cloud, it supports emotional tones and multiple speaker tags.

The team plans to turn Dia into a consumer app for social content creation and remixing, signaling how high-quality voice AI is rapidly becoming accessible to small teams.

Why does it matter?

Dia shows that high-end AI voice models are no longer the exclusive domain of big companies. Open access to computing and research tools is lowering the barrier for new entrants, much like how open-source software reshaped the early internet era.

Mechanize wants AI to fully automate jobs

Tamay Besiroglu, co-founder of AI research firm Epoch, has launched Mechanize, a startup aiming to create virtual workplaces where AI agents can train to perform complex white-collar tasks. The goal? Achieve what they call the “full automation of all work.” Mechanize plans to simulate office environments so agents can learn to handle long-term projects, interruptions, and collaboration.

Why does it matter?

Mechanize isn’t the first to bet that AI will eventually run the entire office, but pushing this vision publicly could intensify already growing concerns over AI-driven job losses. Full automation may be inevitable, but it’s unlikely to be embraced quietly.

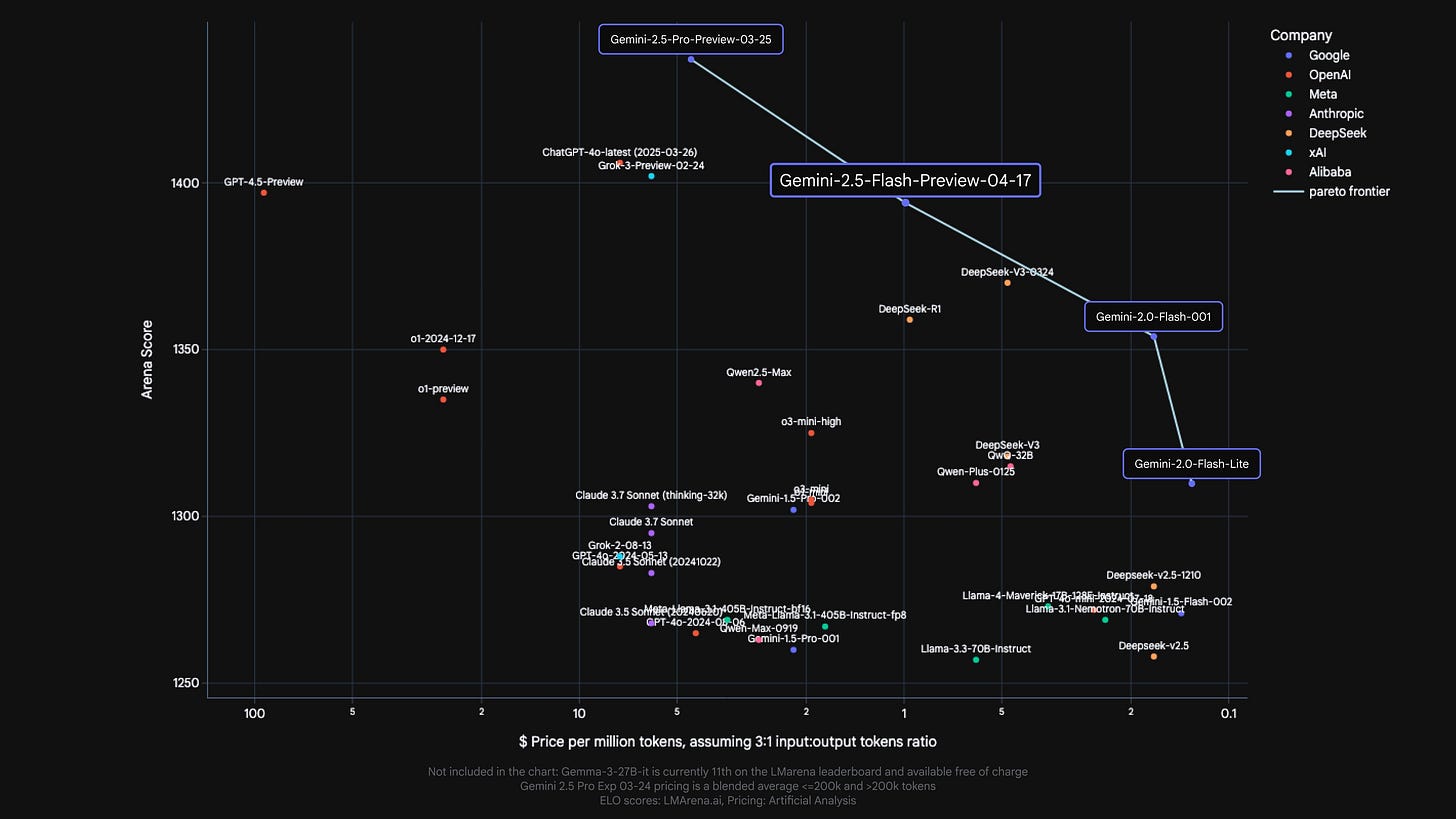

Google’s Gemini 2.5 Flash targets fast, smart AI

Google has released Gemini 2.5 Flash, a hybrid reasoning model that matches OpenAI’s o4-mini and beats Claude 3.5 Sonnet on STEM and reasoning benchmarks. It features stronger visual analysis and a new “thinking budget” that lets developers fine-tune cost vs. quality.

The model is available via API through Google AI Studio, Vertex AI, and as an experimental option in the Gemini app.

Why does it matter?

Gemini 2.5 Flash pushes AI toward smarter cost-performance tradeoffs, giving developers finer control without paying premium model prices. It’s a sign that AI design is moving past raw power to focus more on efficiency and flexibility.

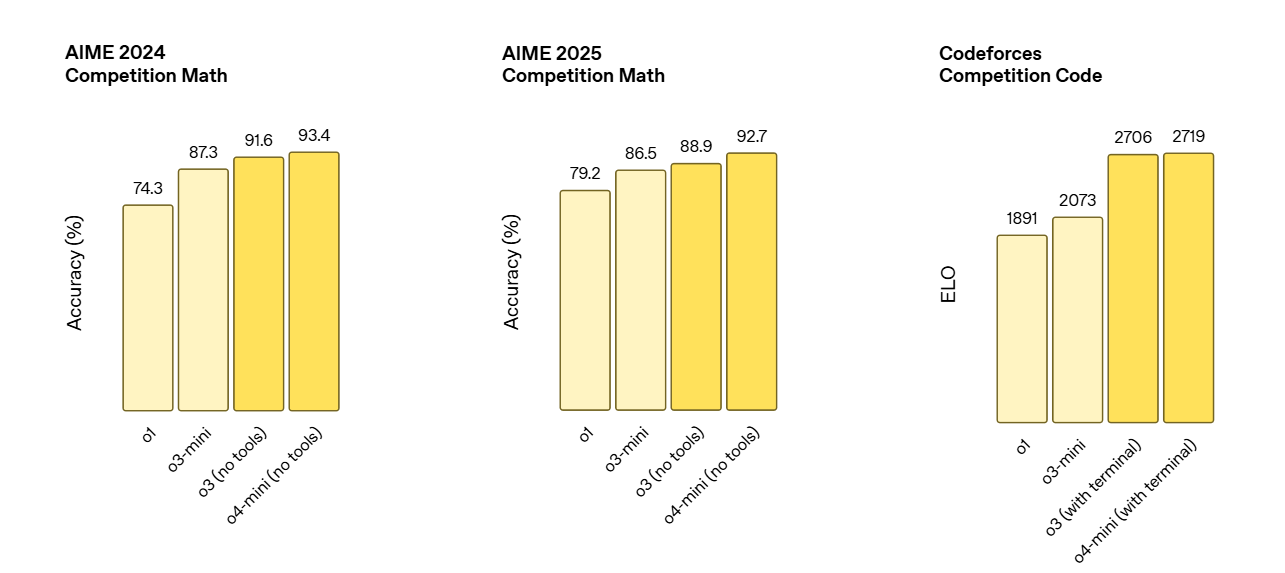

OpenAI’s o3 and o4-mini raise the bar for AI reasoning

OpenAI has unveiled o3 and o4-mini, its newest reasoning models, aimed at setting new standards across coding, math, science, and multimodal problem-solving. o3 pushes state-of-the-art performance, while o4-mini offers faster, more cost-efficient reasoning, even saturating tough benchmarks like AIME 2025 math.

Both models now use ChatGPT tools agentically and are the first to integrate “thinking with images.” OpenAI also introduced Codex CLI, an open-source terminal-based coding agent.

Why does it matter?

OpenAI’s new models blur the line between text, code, and visuals, hinting that future AI won’t just answer questions, but orchestrate tools and data across different mediums. It’s a step toward AI systems that can operate more autonomously, reasoning across domains, taking initiative, and functioning more like a human assistant than passive tools.

OpenAI plans its own AI-powered social network

According to multiple sources, OpenAI is building a prototype of a social network that could either integrate into ChatGPT or launch as a standalone app. The early concept revolves around a social feed focused on ChatGPT’s image generation, with CEO Sam Altman quietly gathering outside feedback on the idea.

If launched, it would put OpenAI in direct competition with X and Meta, while giving it access to real-time user data that could sharpen future model training.

Why does it matter?

If past viral trends like the Ghibli-style images are any guide, OpenAI could build a massive user base fast and shift from chasing data to owning it.

Enjoying the latest AI updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you’ll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: What AI Can’t Replace: Intuition, Alignment, and Taste

In this sharp piece, explains why fast AI tools won’t save early-stage product teams from building the wrong thing. While AI now drafts, prototypes, and brainstorms at lightning speed, it doesn’t help teams decide what not to build. That’s still on product leaders, and it’s where alignment, judgment, and taste come into play.

As Jean Hsu notes, the teams that win won’t just ship faster, they’ll prune faster. AI helps with output, but only teams with a clear sense of direction can turn that output into something meaningful. Product strategy remains a human game, just with more powerful tools.

Why does it matter?

As AI takes over more execution work, the bottleneck shifts from doing to deciding. Teams that can’t align on what matters will drown in plausible-sounding output. In a world of AI-generated abundance, product taste, judgment, and shared focus become rare and therefore, valuable.

What Else Is Happening❗

🛍️ OpenAI adds AI-powered shopping to ChatGPT Search, with visual product comparisons, review insights, and upcoming memory-based personalization.

🧠 Anthropic launches a “model welfare” research program to explore whether future AI systems could gain consciousness or deserve moral consideration.

🎨 Adobe expands Firefly with new high-res AI models, third-party integrations, collaborative tools, and an upcoming mobile app.

🎵 Google DeepMind upgrades its Music AI Sandbox with Lyria 2 for higher-quality generation, real-time editing, and expanded musician access.

📰 The Washington Post partners with OpenAI to bring article summaries and links directly into ChatGPT responses.

🏛️ UAE unveils plans to integrate AI into its lawmaking process, aiming to cut legislative development time by 70% through a new Regulatory Intelligence Office.

🧠 DeepMind researchers propose “streams”, real-world feedback loops for AI learning to move beyond the limits of human-generated training data.

🖥️ Microsoft’s Copilot Studio adds “computer use,” letting AI agents interact with websites and desktop apps directly through GUIs.

🔍 Anthropic upgrades Claude with autonomous web research and Google Workspace integration for deeper, context-rich answers.

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you next week! 😊

Read More in The AI Edge