Hello Engineering Leaders and AI Enthusiasts!

This newsletter brings you the latest AI updates in just 4 minutes! Dive in for a quick summary of everything important that happened in AI over the last week.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

📊 Anthropic’s Opus 4.5 Crushes Code Tests

💻 OpenAI’s Codex-Max takes on 24-hour coding

🚀 Google drops Gemini 3 AI upgrade

🧠 xAI’s Grok 4.1 gets a personality upgrade

🌐 Google’s Nano Banana Pro hits 4K AI visuals

💡 Knowledge Nugget: Treat AI-Generated code as a draft by Addy Osmani

Let’s go!

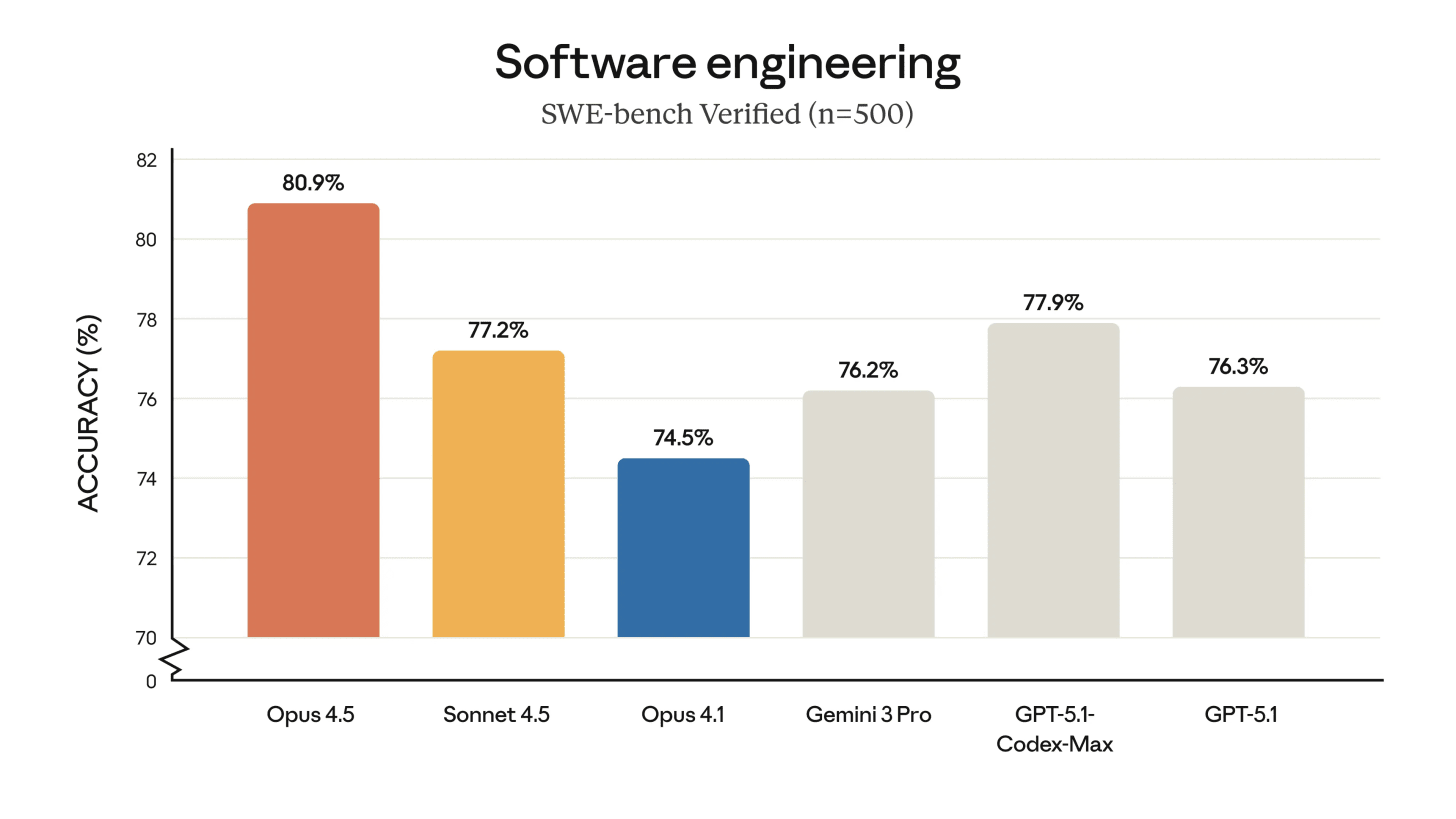

Anthropic’s Opus 4.5 Crushes Code Tests

Anthropic has dropped Claude Opus 4.5, its new flagship model that’s going head-to-head with OpenAI’s GPT-5.1 and Google’s Gemini 3. The model just became the first to crack 80% on SWE-Bench Verified, the gold standard for AI coding benchmarks, while also setting new records for tool use, reasoning, and problem-solving. Anthropic is positioning it as their “most robustly aligned model” from a safety perspective, matching or beating Gemini 3 across multiple benchmarks.

Opus 4.5 comes with a 66% price cut compared to Opus 4.1, while also delivering massive efficiency gains. Anthropic also rolled out unlimited chat lengths, Claude Code integration in desktop apps, and expanded access through Chrome and Excel plugins.

Why does it matter?

Opus 4.5 lands in a crowded week for frontier AI, dropping right after GPT-5.1 and Gemini 3 hit the market. The price cut is Anthropic’s direct response to longstanding criticism that Claude costs too much, forcing OpenAI and Google to reconsider their pricing strategies.

OpenAI’s Codex-Max takes on 24-hour coding

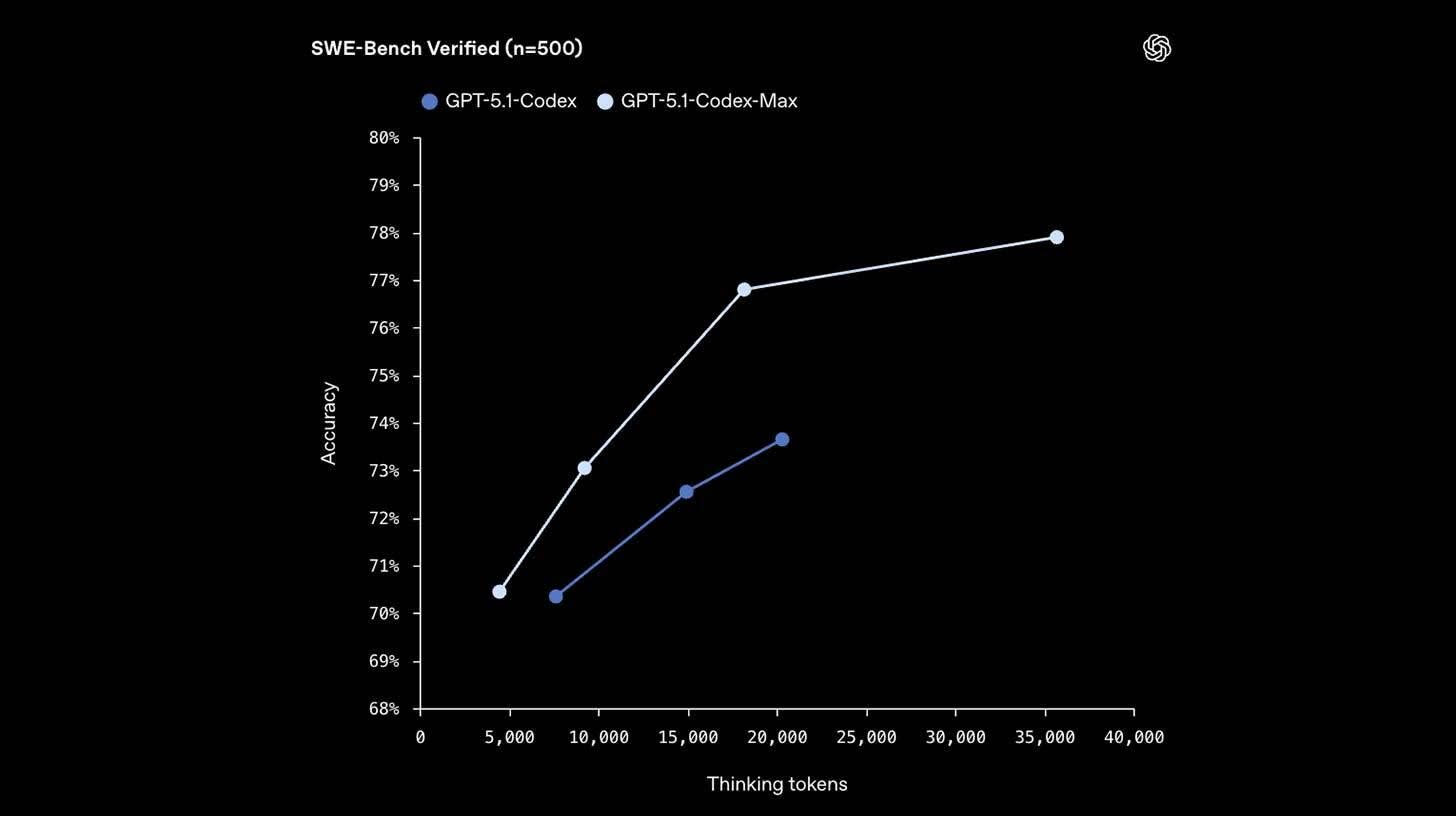

OpenAI has introduced GPT-5.1 Codex-Max, its newest agentic coding model built for long, complex development tasks. The standout upgrade is a “compaction” system that trims session history while preserving context, letting the model work across millions of tokens and continue coding for 24 hours or more without losing track of the project. Codex-Max also shows sharp gains over Codex-High and outperforms Google’s Gemini 3 Pro across coding benchmarks.

OpenAI says the model is faster and more efficient, using 30% fewer tokens and delivering better reasoning performance on real-world development tasks.

Why does it matter?

Gemini 3 grabbed most of the attention, but Codex-Max quietly pushes coding performance higher without a major model overhaul. And those 24-hour sessions keep extending how long top AI models can stay locked into a task.

Google drops Gemini 3 AI upgrade

Google has launched Gemini 3, its new top-tier AI that hits record scores across major reasoning benchmarks, surpassing earlier marks set by GPT-5. The system performs strongly in scientific knowledge, math, multimodal reasoning, and tool use, while landing just behind Claude Sonnet 4.5 in coding. Gemini 3 also showcases new generative UI capabilities, powering features like AI Mode in Search by creating visual layouts on the fly.

Alongside the launch, Google introduced Antigravity, a free platform designed for building agentic coding workflows. It offers browser control, asynchronous execution, and orchestration across multiple agents.

Why does it matter?

Gemini 3 marks a real comeback moment for Google, landing scores that finally put it ahead of OpenAI on key reasoning tests. Coupled with a wide product ecosystem and a fresh push into agents, Google is signaling it’s ready to shape the next phase of AI

xAI’s Grok 4.1 gets a personality upgrade

xAI has introduced Grok 4.1, a new upgrade to its flagship model that focuses heavily on creativity and emotional intelligence rather than pure logical reasoning. Early tests showed that 4.1 delivers the highest emotional intelligence score among major systems, responding with more empathy, tone awareness, and personality. It also ranked No. 1 for user preference in LM Arena under the codename “quasarflux.”

The update includes a sharp improvement in reliability, hallucinations dropped from 12% to just 4%, with factual mistakes reduced by two-thirds. Grok 4.1 also scored near the top for creative writing tasks, placing right behind GPT 5.1 on the Creative Writing v3 benchmark.

Why does it matter?

Everyone is watching the big model launches from Big Tech, but xAI is carving out its own moment, doubling down on creativity and emotional tone, the kind of “vibe” upgrades that often feel more noticeable to everyday users than raw reasoning boosts.

Google’s Nano Banana Pro hits 4K AI visuals

Google just unveiled Nano Banana Pro, a next-gen image model that combines 4K output, multi-person identity preservation, and professional-level editing tools. It can juggle up to 14 visual references at once while maintaining consistent character identities across five people, making complex compositions actually manageable.

By pulling live data from Google Search, the model ensures visuals and text stay accurate and contextually relevant, making it useful for designers, educators, and developers working on high-detail projects.

Why does it matter?

Nano Banana Pro marks a major leap in visual AI, finally cracking the text rendering problem that’s plagued image generators. The biggest differentiator is how it transforms into design assistant that understands context, not just prompts.

Enjoying the latest AI updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you’ll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Treat AI-generated code as a draft

In this article, Addy Osmani highlights that developers are shipping more AI-generated code than ever, but many are skipping the step that matters most: reading it. AI can produce clean, confident-looking snippets that pass tests yet hide subtle flaws like hallucinated functions, insecure patterns, and brittle edge-case handling. And when developers stop reviewing these drafts, they lose the ability to connect a code’s behavior back to its intent, making it harder to debug, extend, or even trust their own work.

This has downstream effects. Code reviews are getting heavier as AI increases diff sizes, juniors submit PRs they can’t fully explain, and teams struggle with “almost correct” code that slows debugging. Senior engineers report that over-reliance on AI also erodes core skills; developers stop reading docs, stop reasoning about problems, and become dependent on instant AI answers instead of building real understanding.

Why does it matter?

Treating AI output as final code creates brittle systems and weaker engineers. Teams that treat AI as a first draft (not an author) ship more reliable software, maintain accountability, and preserve the engineering intuition that keeps complex systems working.

What Else Is Happening❗

🎮 DeepMind unveils SIMA 2, a Gemini-powered AI agent that teaches itself new skills in unseen virtual worlds, achieving up to 75% task success vs. SIMA 1’s 30%.

🎭 2wai launches “HoloAvatars”, AI avatars of deceased loved ones from just minutes of footage, letting users interact with digital versions of relatives across life events.

🧠 Baidu released ERNIE 5, its new omnimodal model, alongside Famou, a self-evolving AI agent designed to discover optimal solutions in complex scenarios.

⛅DeepMind unveiled WeatherNext 2, a weather forecasting AI that generates predictions 8x faster and can simulate hundreds of possible weather shifts in a minute.

🤖 Microsoft launched Agent 365, a platform for managing, securing, and governing AI agents, with capabilities like agent registry, performance analytics, and more.

🤝 Saudi Arabia’s HUMAIN partnered with xAI, Nvidia, and AWS to deploy 600K GPUs and build data centers, while backing Luma AI’s $900M raise for a 2GW supercluster.

💬 OpenAI rolled out group chat to all tiers, letting up to 20 users collaborate with ChatGPT simultaneously while keeping group sessions isolated from personal memory.

🎨 Black Forest Labs launched Flux.2, a new image model family with 10-reference consistency and 4MP outputs, undercutting Google’s Nano Banana Pro on pricing.

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you next week! 😊

Read More in The AI Edge