Hello Engineering Leaders and AI Enthusiasts!

This newsletter brings you the latest AI updates in just 4 minutes! Dive in for a quick summary of everything important that happened in AI over the last week.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🎬 OpenAI launches Sora 2 with audio and Cameos

💸 a16z reveals where startups really spend on AI

🧠 Samsung researcher’s 7M AI beats DeepSeek and Gemini

⚖️ OpenAI says GPT-5 cuts political bias by 30%

🖼️ Microsoft debuts in-house image model

💡 Knowledge Nugget: What’s the role of trust in AI? by Marko Katavic

Let’s go!

OpenAI launches Sora 2 with audio and Cameos

OpenAI has unveiled Sora 2, the newest version of its AI video generator that now produces realistic videos with synchronized audio and dialogue. Beyond smoother physics and improved scene transitions, Sora 2 can render longer 5–10 second clips that maintain visual and narrative coherence across complex settings.

Alongside the model, the company rolled out a Sora social app that lets users create, remix, and insert themselves into AI-generated scenes through a new “Cameos” feature. Currently limited to North America, the app will expand globally, with Sora 2 Pro and API access planned for developers soon.

Why does it matter?

Sora 2 is a major leap, bridging the gap between AI generation and real cinematic storytelling. The Cameos feature, on the other hand, could fuel a wave of viral, creator-led AI content, but it also tests whether AI-native social apps can sustain engagement beyond the initial hype.

a16z reveals where startups really spend on AI

a16z’s new ‘AI Application Spending Report’ offers a rare peek into where real startup dollars are going. Drawing from transaction data of over 200,000 businesses, the study found that AI startups spend the most on OpenAI and Anthropic, followed by a rising wave of creative and coding tools capturing a meaningful share.

Platforms like ElevenLabs, Canva, and Replit are emerging as go-to picks for startups investing in generative content and developer productivity. Beyond hype, the data suggests that AI adoption is shifting from experimentation to operational integration, with startups paying for tools that offer tangible workflow gains.

Why does it matter?

While OpenAI and Anthropic topping the list is expected, the rise of creative and vibe coding tools is the real signal showing that the AI boom is quietly shifting from infrastructure to execution and ROI.

Samsung researcher’s 7M AI beats DeepSeek and Gemini

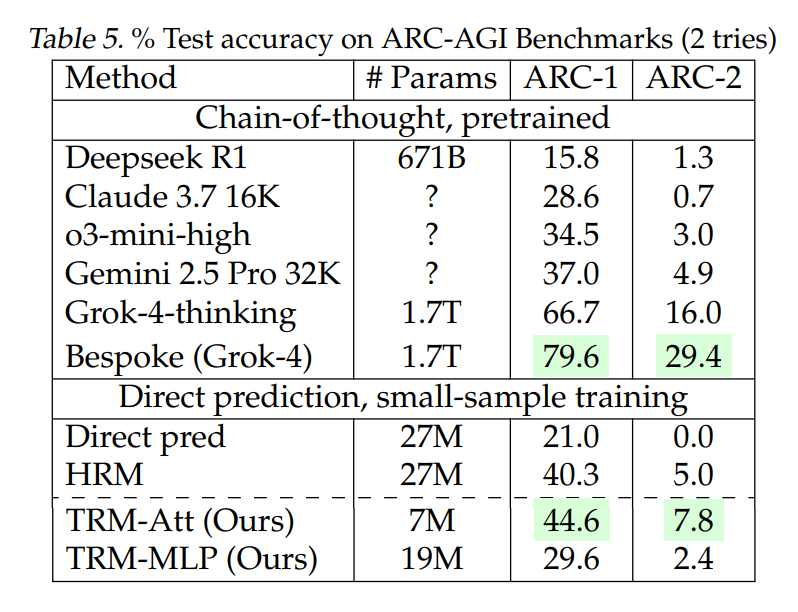

Samsung researcher Alexia Jolicoeur-Martineau unveiled the Tiny Recursion Model (TRM), a 7M-parameter system that surprisingly outperformed models thousands of times larger on complex reasoning benchmarks like ARC-AGI.

Rather than generating answers in a straight line, TRM “thinks in loops,” repeatedly drafting, critiquing, and refining its reasoning up to 16 times through an internal scratchpad process.

Why does it matter?

As the AI race tilts toward ever-larger models, TRM shows that progress may come from rethinking how models reason, not how big they get. While its wins are limited to reasoning puzzles for now, it points to a future where iteration and self-reflection could become the real benchmarks of intelligence.

OpenAI says GPT-5 cuts political bias by 30%

OpenAI released new research measuring how its latest model, GPT-5, handles politically sensitive topics. Using 500 test prompts across 100 issues, the study found a 30% drop in bias compared to earlier models, particularly in emotionally loaded questions. The team identified three main bias types: models expressing personal opinions, highlighting one-sided perspectives, or amplifying the user’s tone.

Interestingly, analysis of live ChatGPT traffic found that less than 0.01% of its responses exhibited political bias. Still, the study notes that responses to strongly liberal prompts tend to show slightly higher bias than conservative ones across all tested models.

Why does it matter?

With millions using ChatGPT, even tiny biases can influence public understanding of political issues. While OpenAI’s improvements help, emotionally/politically charged prompts can still reinforce users’ preexisting views, so they must be accompanied by transparency and independent audits.

Microsoft debuts in-house image model

Microsoft AI has launched MAI-Image-1, the company’s first internally developed image generation model, now ranked among the top 10 on LMArena. Trained with a focus on creative flexibility and photorealism, the model was built using curated datasets and evaluation methods shaped by feedback from professional creators.

MAI-Image-1 stands out for its lighting accuracy, scene realism, and visual diversity, while maintaining faster generation speeds than many larger competitors.

Why does it matter?

MAI-Image-1 marks Microsoft’s growing pattern of building in-house models following MAI-Voice-1 and MAI-1-preview. They may not rival GPT yet, but the direction is clear: Microsoft is quietly laying the groundwork to rely less on OpenAI and more on its own AI stack.

Enjoying the latest AI updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you’ll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

Knowledge Nugget: Agentic AI runs on tools

In this piece, Marko Katavic explores how AI is disrupting one of humanity’s oldest social mechanisms: ‘trust’. For millennia, humans have relied on trust as a shortcut to navigate persuasion: we trust experts, institutions, and brands, so we don’t have to verify everything ourselves.

But AI breaks this mental model. People can’t easily decide whether to treat generative AI as a tool, a media source, or a thinking partner, and each requires a different kind of trust. Most users apply the wrong one, assuming mechanical reliability where subjective judgment is actually at play.

Why does it matter?

As humans delegate more decisions to AI, misplaced trust can lead to poor advice, misinformation, or exploitation. Until we evolve a new “trust heuristic” for AI, the biggest danger isn’t model bias or hallucination. It’s the illusion that these systems deserve our confidence.

What Else Is Happening❗

🔥 OpenAI will launch a more human-like ChatGPT and introduce age-gated features, including adult content, in December.

⚡ OpenAI teamed up with Broadcom to build 10GW of custom AI chips by 2029, marking its biggest step yet toward self-designed compute infrastructure.

🏢 Google launched Gemini Enterprise, a no-code AI agent platform for businesses to build, deploy, and manage workplace assistants.

🧩 Reuters found weekly AI use has nearly doubled worldwide, led by ChatGPT, but 62% of people still reject fully AI-generated news.

🖱️ Google launched Gemini 2.5 Computer Use, an API model that autonomously clicks, types, and navigates web apps, beating OpenAI and Anthropic on benchmarks.

🧬 Duke University unveiled TuNa-AI, a robotic platform that designs drug nanoparticles, boosting cancer treatment efficiency by 43% in lab tests.

⚡OpenAI signed a multi-year deal with AMD for 6GW of compute and up to a 10% equity stake, expanding its AI infrastructure beyond Nvidia.

🚀 OpenAI’s Dev Day 2025 unveiled ChatGPT app integrations, agent-building tools, and API access to GPT-5 Pro, Sora 2, and new real-time voice models.

🎨 Google released PASTA, an AI that learns your personal aesthetic style through repeated image choices, no prompt engineering needed.

💻 Google released Jules Tools, a CLI and API that lets developers run its autonomous coding agent directly from terminals and connect it to workflows.

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you next week! 😊

Read More in The AI Edge