[[{“value”:”

If you enjoy my effort of covering/curating and commenting on this this report and think it may be useful to someone, feel free to share it:

Good Morning,

I’ve been checking out some new AI related reports and it’s already that time again: the State of AI Report 2025 has come. By the way, I share a lot of AI-news related tidbits over the week on Notes, in case you are interested in following them.

Now in its eighth year, the State of AI Report 2025 is reviewed by leading AI practioners in industry and research. Let’s clearly state that this is a report by a Venture Capital firm that invests in AI startups. It’s been around a while and is by Air Street Capital’s Nathan Benaich and his team: (we’ll be reviewing this report in some depth today).

-

We also have their State of AI Compute Index to check out.

-

Take their survey: 👋 Help shape the State of AI Report! Here.

-

Semianalysis by has devised a new benchmark InfeferenceMAX, where Nvidia appears to lead.

On a recent earnings call, Nvidia CEO Jensen Huang estimated that between $3 trillion and $4 trillion will be spent on AI infrastructure by the end of the decade.

I’m hoping you’ll read this report summary/synthesis in the context of the demand for compute (surge in 2025) that’s rising exponentially due to all of these new capital allocations into datacenters. To get an idea of the scale of all of this:

Reports estimate that AI-related capital expenditures surpassed the U.S. consumer as the primary driver of economic growth in the first half of 2025, accounting for 1.1% of GDP growth. – Source.

Before we get into the main report and some of its important topics we still need to talk about the AI bull market bubble dynamics (yet again!):

This article is way too long to read in your inbox or on mobile:

AI Bubble Talk 🌊

According to Madison Mills (Axios): Oppenheimer (Goldman Sachs Group Inc. strategist Peter Oppenheimer) lays out three hallmark bubble traits:

-

Rapidly rising asset prices (check).

-

Extreme stock valuations (check).

-

Rising significant systemic risks driven by increased leverage (getting there!).

Understanding Jevons Paradox in context of AI Compute 💭

Jevons paradox, first described by economist William Stanley Jevons in the 19th century, observes that technological improvements in the efficiency of resource use often lead to increased overall consumption of that resource, rather than decreased. The classic example is the steam engine: as engines became more coal-efficient, the cost of using steam power dropped, spurring broader adoption in industry and transportation.

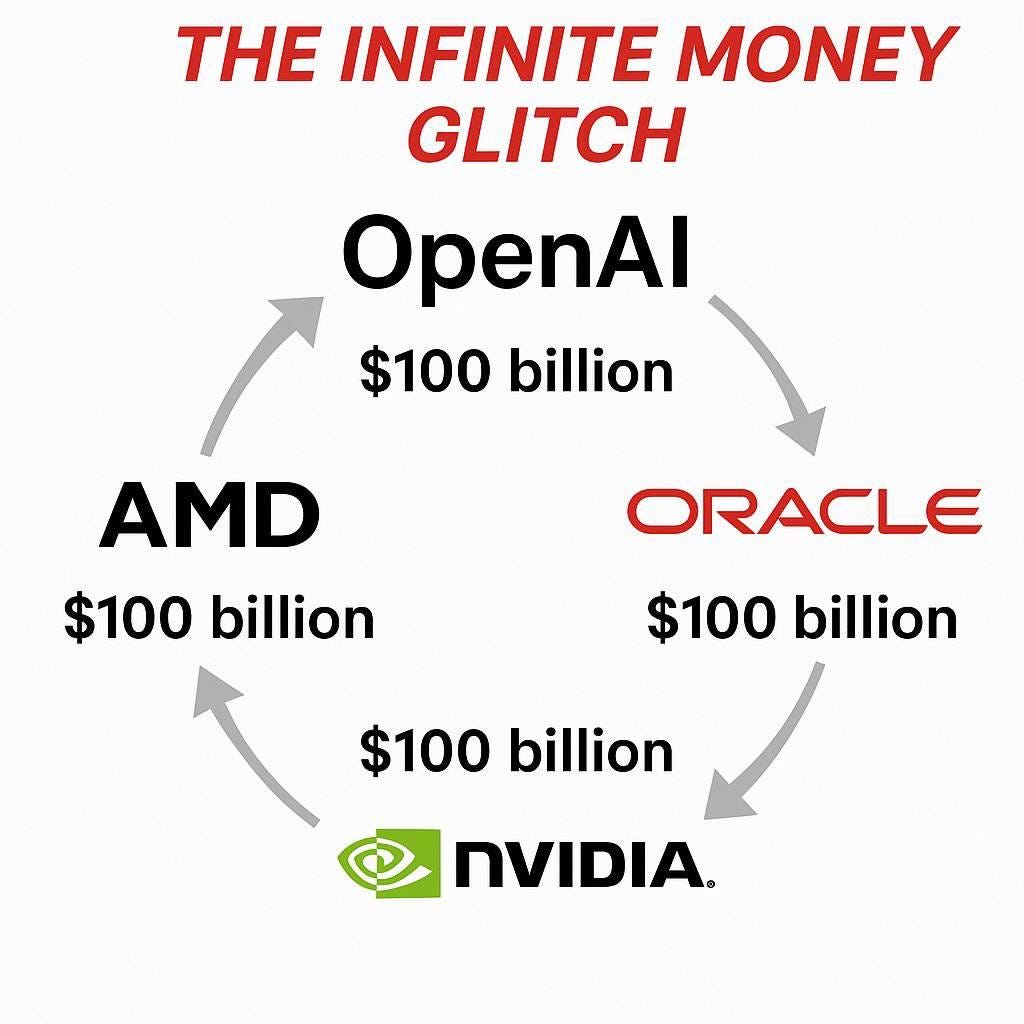

In the context of AI Infrastructure and compute this is translating to the 2025 to 2030 period. How OpenAI and Nvidia Commoditize each other and their key partners is fascinating, AI compute—primarily the processing power from GPUs, TPUs, and data centers needed for training and running models—has exploded since 2024 and seems to be following this pattern.

Trajectory

Bubbles will “develop when there is a combined surge in stock prices and valuations to an extent that the aggregate value of companies associated with the innovation exceeds the future potential cash flows that they are likely to generate,” Oppenheimer says. Put another way:

EROOM’s Law

Originally from pharma R&D1, but the pattern generalises: invention accelerates, adoption drags. The faster the invention cycle, the wider the gap between what tech can do and what organisations can absorb. Most companies live on the adoption curve, not the invention curve. If we behave like it is 1999, we’ll burn money on theatre.

Tycoons view Positive Bubbles

“Positive bubbles.” Mark Zuckerberg and Jeff Bezos’ take may be useful: sometimes we over-invest in a frontier, many bets fail, and the rails remain. Think railways, electrification, and the web after 2000. The crash hurt, the infrastructure stayed. In that sense you can get a positive bubble that seeds the next S-curve. Scaling-law logic points the same way. Each wave lays foundations for the next.

-

The State of AI Report 2025 is backwards looking, so it doesn’t tell us much that we don’t already know. Still it’s always very interesting. walks us through it (video).

AI Compute is King 👑

In the context of 2025’s AI compute demands, advancements in hardware and algorithms—such as NVIDIA’s optimized architectures and cost-effective model training (e.g., DeepSeek’s R1 at $5 million)—have lowered per-operation expenses, facilitating widespread adoption across enterprises and research. This has amplified total resource needs, including energy consumption projected to triple U.S. AI-related power by 2028 and necessitating an estimated $6.7 trillion in data center investments by 2030, underscoring the need for sustainable infrastructure like nuclear energy to support sustained growth. The demand for compute now drives a lot of the narrative.

Microsoft’s $1 Billion support for OpenAI in 2019 set this off and is one of the key historical catalysts. This doesn’t happen without Microsoft. Since Generative AI increases Cloud growth and sales, BigTech drove the catalyst that started the demand for AI compute movement that’s so pervasive just six years later.

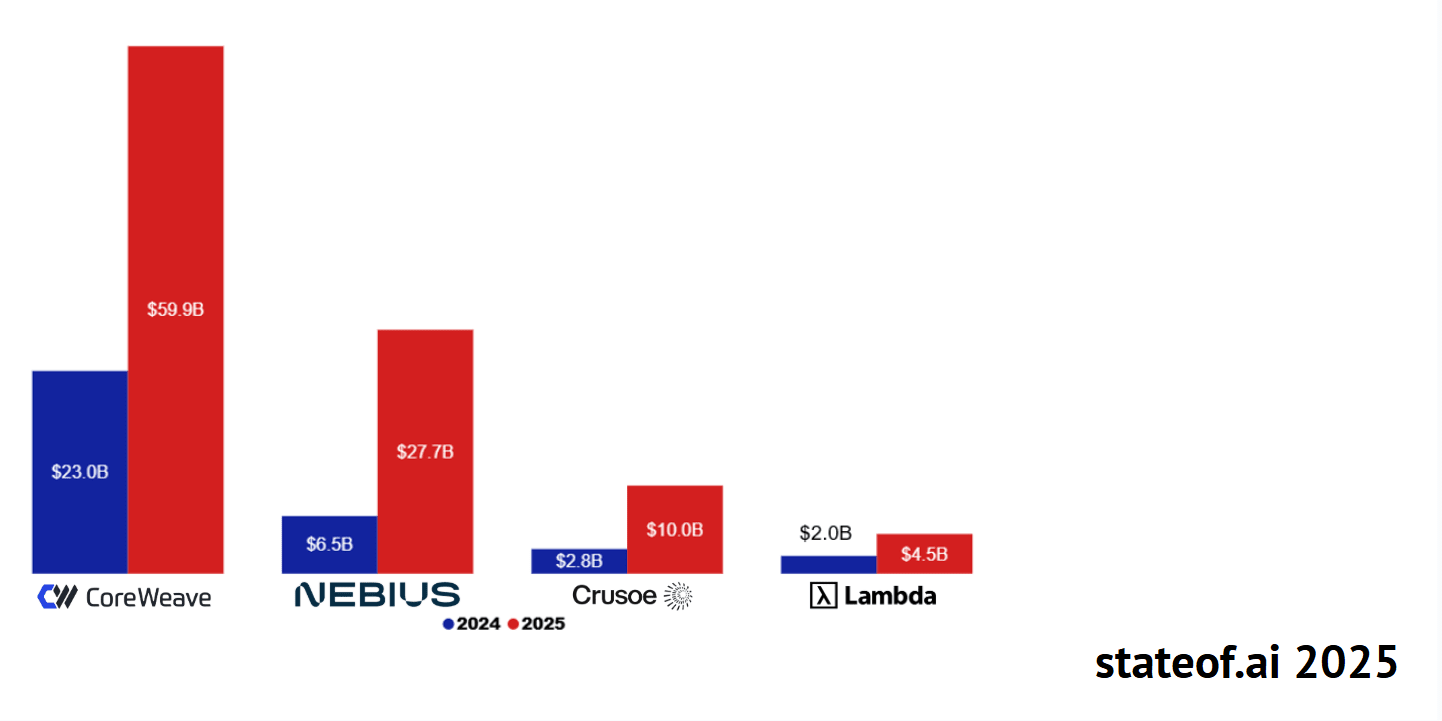

In this AI compute is King dynamics world, you can include Oracle, TSMC, Broadcom along with Nvidia joining the magnificent seven, to become the Leading Ten. If you include how fast OpenAI, Anthropic and xAI are pushing the AI Infrastructure and Gen AI innovation parameters, you have a lot of capital flowing and scaling globally that makes the demand for AI compute insatiable in the 2025 to 2030 period. This will mean more leveraged debt. The Leading Ten along with the big three of BigAI, also has other major LLM makers to watch like Thinking Machines and Reflection AI on the open-source models front. So ten BigTech companies and five major BigAI U.S. players. That’s fifteen major companies that will spur more demand for compute and their various proxies like CoreWeave, Nebius, Lambda, and about ten more crypto miners pivoting to become GPU renters.

-

BigTech (magnificent seven) with a semiconductor boom (Nvidia, TSMC, Broadcom).

-

Rise of BigAI: OpenAI, Anthropic, xAI, Thinking Machines (who famously lost one of their co-founders to Meta recently) and Reflection AI.

-

Neo Clouds, AI chip makers, Hyperscalers: Oracle, AMD, CoreWeave, Nebius, Softbank etc… roughly 20 companies partnering together to push the demand for AI compute faster.

Headlines & Geopolitics

China tightened export controls for critical rare-earth metals on Thursday. On Friday October 10th, President Donald Trump revived threats to hike tariffs against China. President Donald Trump announced he will impose an additional 100% tariff on goods from China, on top of the 30% tariffs already in effect, starting November 1 or sooner. So that’s 130%. Trump also said that the U.S. on that same date also would impose export controls on “any and all critical software.” The U.S. and China are in heated trade negotiations. Around 70% of the global supply of rare earths minerals comes from China.

With the demand for compute being front and center, energy is now considered part (interview with CNBC) of the AI stack.

Let’s take a look inside this report together.

The sections that most interest us for understanding the State of AI 2025 situation in later in the year are:

-

Research: Technology breakthroughs and their capabilities.

-

Industry: Areas of commercial application for AI and its business impact.

-

Politics: Regulation of AI, its economic implications and the evolving geopolitics of AI.

-

Safety: Identifying and mitigating catastrophic risks that highly-capable future AI systems could pose to us.

Some Key takeaways 🌌 (looking back)

-

OpenAI retains a narrow lead at the frontier, but competition has intensified as Meta relinquishes the mantle to China’s DeepSeek, Qwen, and Kimi close the gap on reasoning and coding tasks, establishing China as a credible #2.

-

Reasoning (e.g. AI reasoning models) defined the year, as frontier labs combined reinforcement learning, rubric-based rewards, and verifiable reasoning with novel environments to create models that can plan, reflect, self-correct, and work over increasingly long time horizons.

-

Optimistically: AI has the potential to become a scientific collaborator, with systems like DeepMind’s Co-Scientist and Stanford’s Virtual Lab autonomously generating, testing, and validating hypotheses. In biology, Profluent’s ProGen3 showed that scaling laws now apply to proteins too.

-

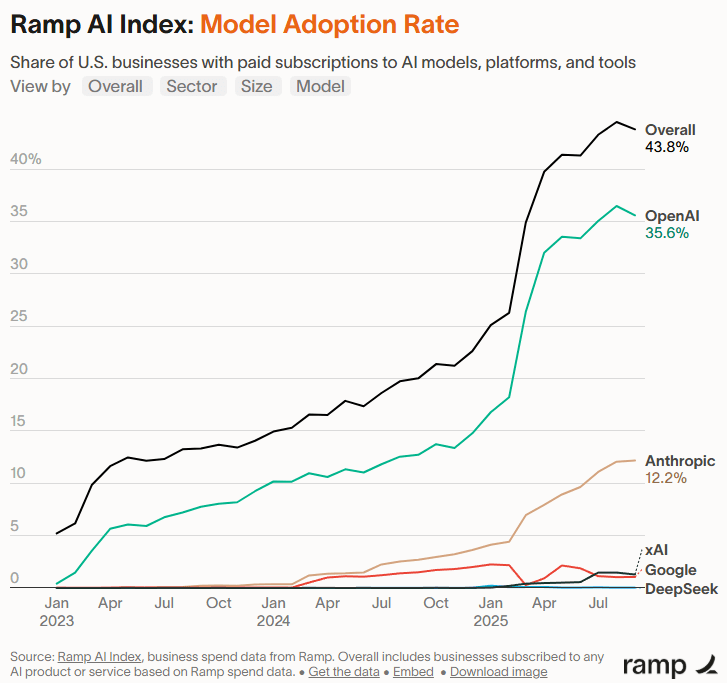

Younger companies are adopting AI more fluently with: Commercial traction accelerating sharply. Forty-four percent of U.S. businesses now pay for AI tools (up from 5% in 2023), average contracts reached $530,000, and AI-first startups grew 1.5× faster than peers, according to Ramp and Standard Metrics.

-

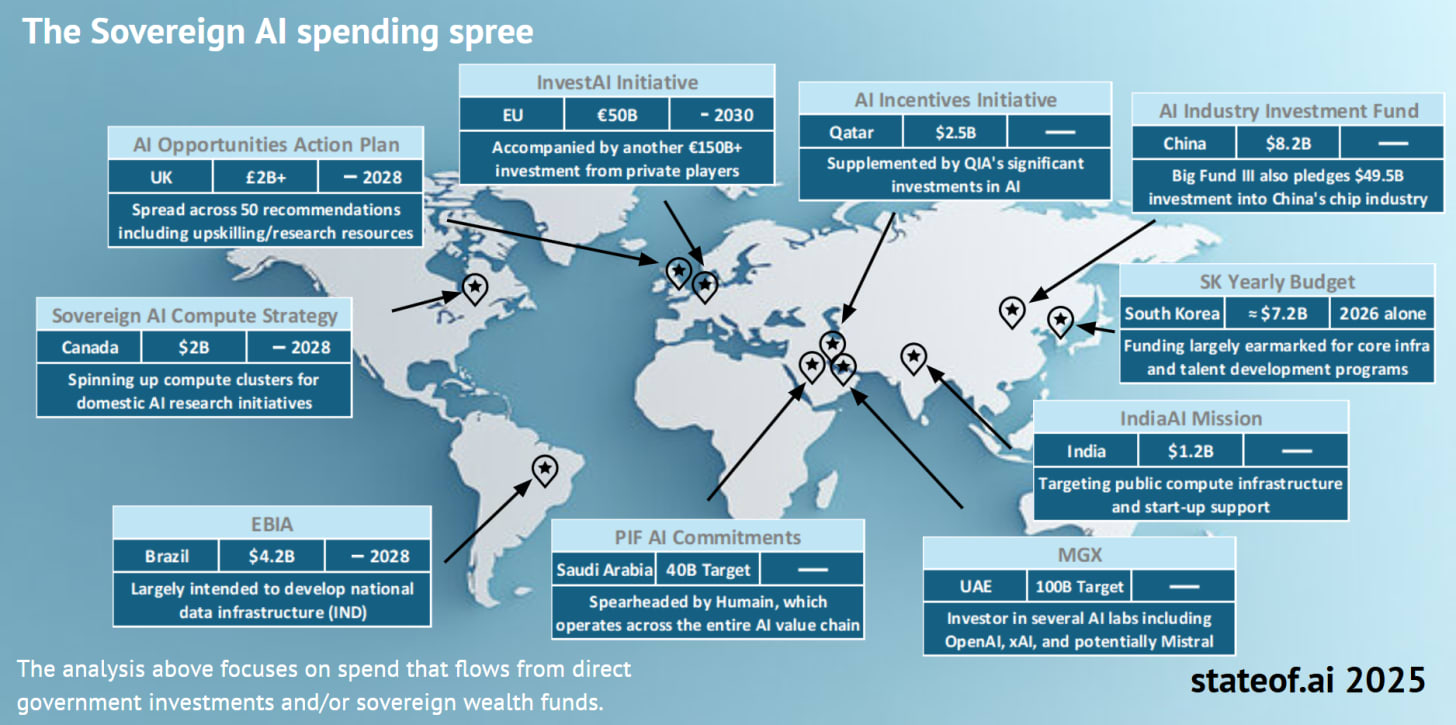

The industrial era of AI has begun. Multi-GW data centers like Stargate signal a new wave of compute infrastructure backed by sovereign funds from the U.S., UAE, and China, with power supply emerging as the new constraint. AI geopolitics is moving the needle where the U.S. has leaned (2024-2025) into “America-first AI,” Europe’s AI Act stumbled, and China expanded its open-weights ecosystem and domestic silicon ambitions.

Nathan is the General Partner of Air Street Capital, a venture capital firm investing in AI-first companies. He’s had quite a good run of late in the UK. (I’m not affiliated with either, this overview is just for my AI enthusiast readers).

Thanks to some of the people who put together the report: Zeke Gillman, Nell Norman, and Ryan Tovcimak.

The report is roughly a look back between September 2024 to roughly September 2025, so relevant for history of AI buffs. I do add in my own commentary, so read the source if you want the unfiltered academic tilt.

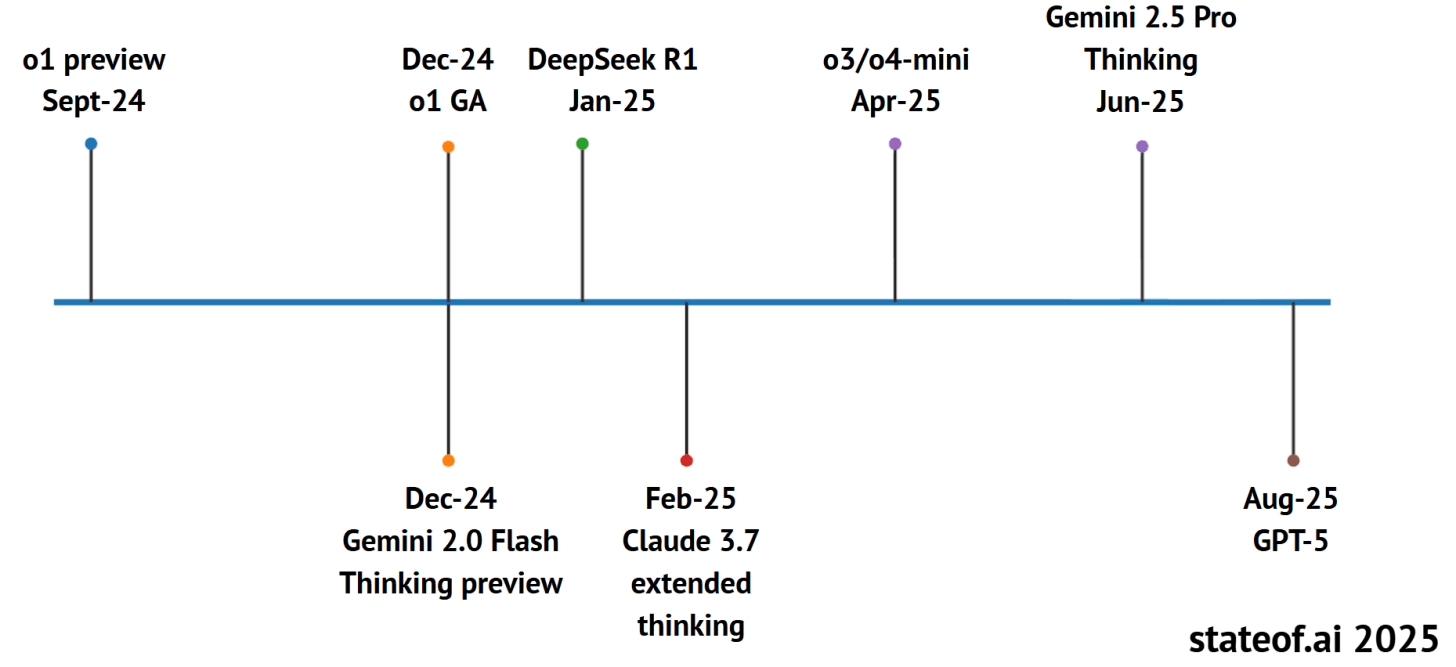

The Test-Time Compute Era begins with “Reasoning Models”

-

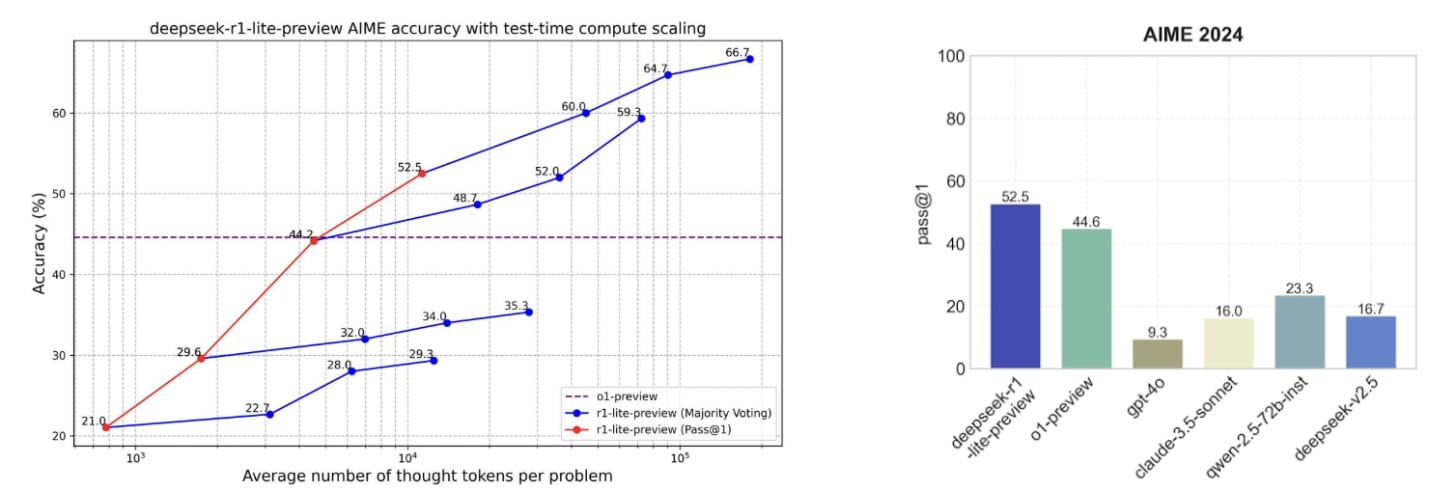

As 2024 drew a close, OpenAI released o1-preview, the first reasoning model that demonstrated inference-time scaling with RL using its CoT as a scratch pad. This led to more robust problem solving in reasoning-heavy domains like code and science.

The “DeepSeek” Moment 🚨

-

Barely 2 months after o1-preview, DeepSeek (the Chinese upstart AI lab spun out of a high-frequency quant firm) released their first reasoning model, R1-lite-preview, that’s built atop the earlier strong V2.5 base model. Like OpenAI, they showed predictable accuracy improvements on AIME with greater test-time compute budget. Note that DeepSeek-R2 is quite late (delayed) due to Huawei AI chip problems. [Awaiting Gemini 3, Grok 5, Claude Opus 4.5 and DeepSeek-R2 currently).

It’s All AI History Now 🌊

-

As of late 2025, China has taken the lead in the open-source (open-weight) stack, but not with DeepSeek, but rather with Alibaba Qwen.

Parallel Compute Routing with MoE Drastically Increased Demand for Compute

OpenAI models remain at the frontier of intelligence, but for how much longer? Anthropic models win in Coding and Google Gemini is catching up in consumer adoption.

-

OpenAI’s first-mover advantage is narrowing quickly in the 2024-2025 window.

-

Three days ago Google announced Gemini Enterprise. Meanwhile Anthropic is pushing global with its new funding and xAI is doubling down on AI Infrastructure. Meta did an AI talent push and Qwen iterated very quickly especially in the last nine months.

2025 saw AI-augmented mathematics

-

Math is a verifiable domain: systems can plan, compute, and check every step, and publish artifacts others can audit. So 2025 saw competitive math and formal proof systems jump together: OpenAI, DeepMind and Harmonic hit IMO gold-medal performance, while auto-formalization and open provers set new records. More AI startups related to pushing the frontiers of Science got funding.

Reasoning Models in the World in Action (Test-time fine-tuning) ⚗️

The scaling paradigm is shifting from static pre-training to dynamic, on-the-fly adaptation. Test-time fine-tuning (TTT) adapts a model’s weights to a specific prompt at inference, a step towards continuous learning. This on-demand learning consistently outperforms in-context learning, especially on complex tasks. It creates a new performance vector independent of pre-training scale.

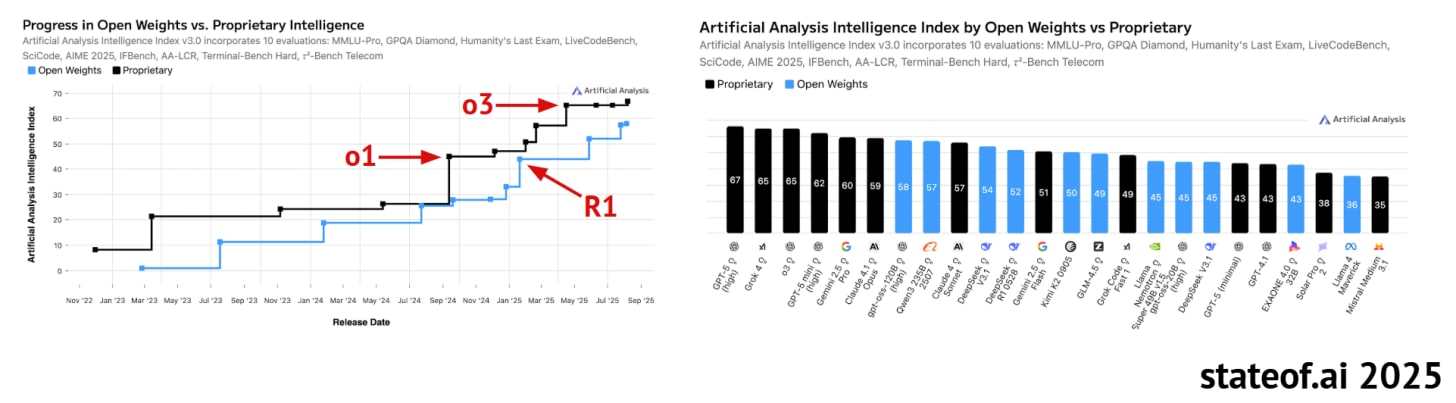

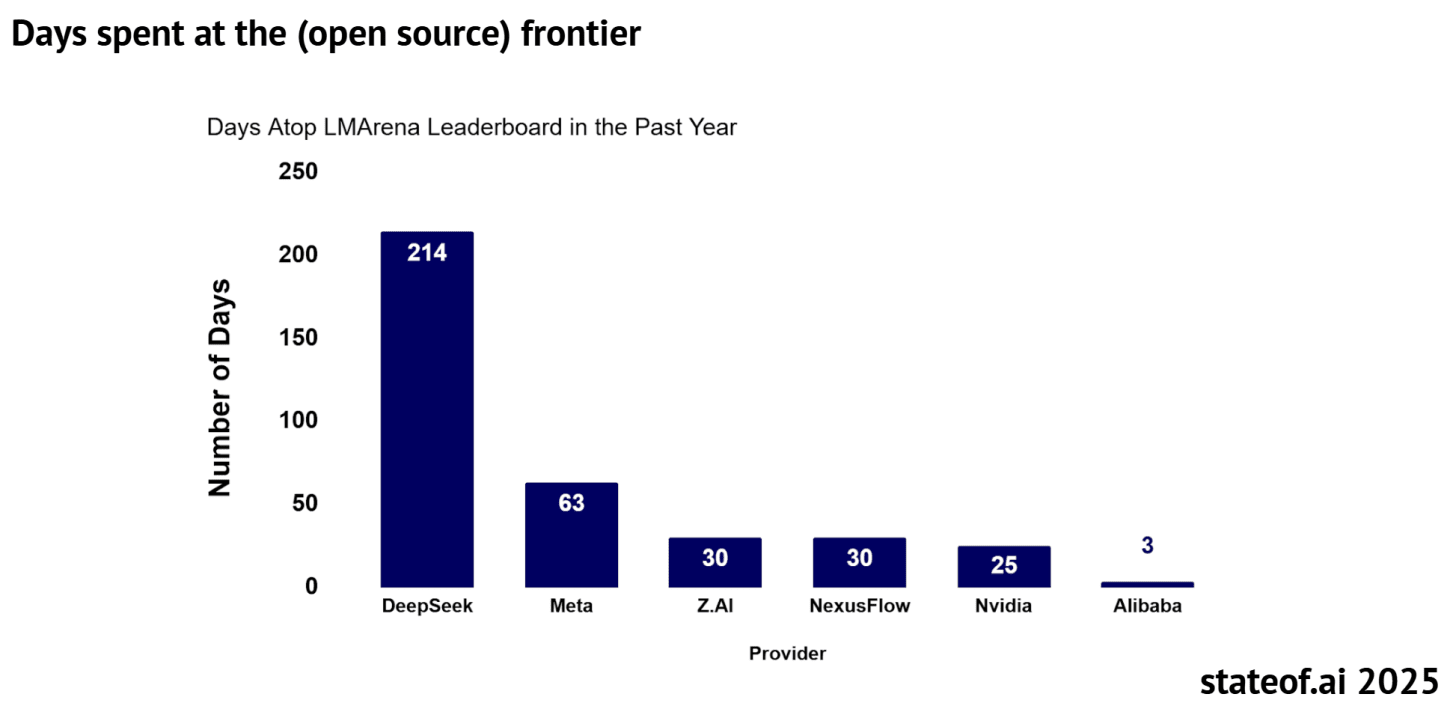

Open Weight vs. Proprietary Closed Source

A confusing timeline.

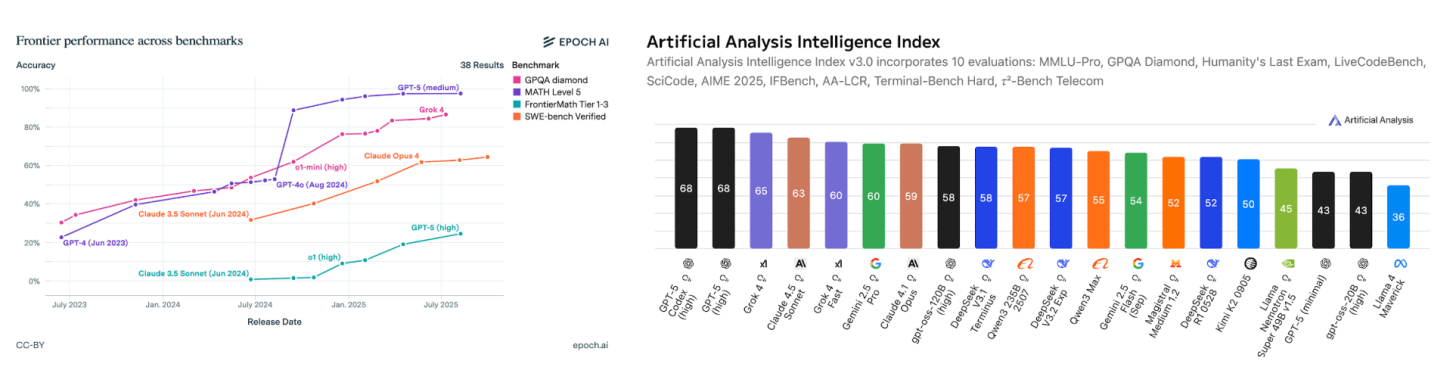

There was a brief moment around this time last year where the intelligence gap between open vs. closed models seemed to have compressed and narrow a bit. Then o1-preview dropped and the intelligence gap widened significantly until DeepSeek R1, and o3 after it. Today, the most intelligent models remain closed: GPT-5, o3, Gemini 2.5 Pro, Claude 4.1 Opus, and new entrant, Grok 4. Beside gpt-oss, the strongest open weights model is Qwen. Pictured are the aggregate Intelligence Index, which combines multiple capabilities dimensions across 10 evaluations. Also doubtful if Meta AI (likely to go closed model) or Thinking Machines and Reflection AI among other in the U.S. can catch China in open-weight models.

OpenAI pivots from the “wrong side of history” to aligning with “America-first AI”

The Donald Trump administration has brought with it high hopes for U.S. first AI re its comprehensive: “Winning the Race” U.S. Action Plan. With mounting competitive pressure from strong open-weight frontier reasoning models from DeepSeek, Alibaba Qwen and Google DeepMind’s Gemini and the US Government pushing for America to lead the way across the AI stack, OpenAI released their first open models since GPT-2: gpt-oss-120b and gpt-oss-20b in August 2025. These adopt the MoE design using only 5.1B (of 120B) and 3.6B (of 20B) active parameters per token and grouped multi-query attention.

-

The reality? The U.S. still lags behind China at the energy layer and Trump’s attack on Solar, Wind and renewable energy isn’t helping. Many AI Infrastructure projects in other countries are dogmatic and exploitative.

The Alibaba “Qwen” Moment (September, 2025)

-

Once a “Llama rip-off”, developers are increasingly building on China’s Qwen. Alibaba has decided to increase capex into AI Infrastructure with datacenter projects in many countries planned.

-

China’s Open-weight LLMs approach seems to be hitting a new level as a National strategy.

Meta’s Llama used to be the open source community’s darling model, racking up hundreds of millions of downloads and plentiful finetunes. In early 2024, Chinese models made up just 10 to 30% of new finetuned models on Hugging Face. Today, Qwen alone accounts for >40% of new monthly model derivatives, surpassing Meta’s Llama, whose share has dropped from circa 50% in late 2024 to just 15%.

In October, 2025 it’s fair to say China is 3-6 months ahead of the U.S. in open-source LLMs and their agentic capabilities. Meta (formerly Facebook)’s failure to make a decent Llama model spelled disaster as China (with plentiful engineers worked ahead) inspite of a handicap in AI chips, capital and compute.

Why the appeal?

China’s RL tooling and permissive licenses are steering the open-weight community. ByteDance Seed, Alibaba Qwen and Z.ai are leading the charge with verl and OpenRLHF as go-to RL training stacks, while Apache-2.0/MIT licenses on Qwen, GLM-4.5 and others make adoption frictionless.

China’s Momentum in Video Models

We think of the best video models being Google’s Veo 3 or OpenAI’s Sora but that may soon not be true.

From late‑2024, Chinese labs split between open‑weight foundations and closed‑source products. Tencent seeded an open ecosystem with HunyuanVideo, while Kuaishou’s Kling 2.1 and Shengshu’s Vidu 2.0 productize on speed, realism and cost. Models tend to use Diffusion Transformers (DiT), which replace convolutional U-Nets with transformer blocks for better scaling and to model joint dependencies across frames, pixels, and tokens.

China has more labs working on Video models showing some decent capabilities. They sprung up seemingly out of nowhere showing the AI talent density that China has in a wide range of labs.

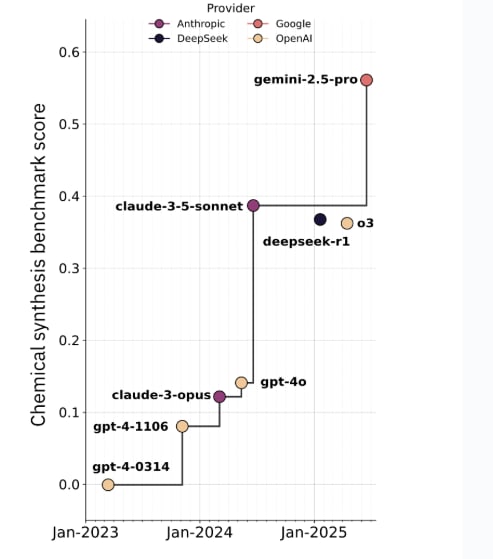

2024-2025 Saw advances in “World Models” and AI for Science

Chemical modeling has shifted from task-specific predictors to general LLMs that reason about synthesis strategy and mechanisms. The strongest results now come from large, general-purpose transformers used as “reasoning engines” and paired with classical search, rather than chemistry-specific generators.

Researchers are optimistic about LLMs for everything from materials to drug-discovery and development.

Work from the University of Liverpool and North Carolina State University shows that autonomous chemistry platforms can plan, execute, and analyze experiments in closed loops. i.e. a “Robot chemist”. Tech Optimists envision automated agentic science labs or Robot AI Labs putting innovation on auto-pilot. Specific papers and examples are given, though difficult to generalize into the real world. Everything from biotech to genomics is on the table:

Next-token modeling learns real biological dependencies from DNA, and Evo’s scaling study shows smooth, compute-predictable loss improvements with more data, parameters, and context, plus clear architecture effects.

AI “Benchmarks” are Flawed and Subject to Manipulation 👀

Researchers revealed systematic manipulation of LMArena as Meta tested 27 private Llama-4 variants before cherry-picking the winner: testing 10 model variants yields a 100-point boost.

-

Even as new benchmarks are created, most benchmarks are gamed. OpenAI and Google are hoovering up 40% of all Arena data while 83 open models fight over 30% of the scraps.

For example, Big Tech gets 68 times more data access than academic labs, with API-hosted models seeing every test prompt while third-party models only glimpse 20%. So how much can we trust or pay attention to these benchmarks and graphs when a BigTech model launches?

The “Sycophancy” Backlash of OpenAI

Agreeable chatbots contribute to new kinds of problems as their adoption proliferates. But….

“Sycophancy” isn’t a bug, it’s exactly what human feedback optimisation produces. A study of five major LLMs shows they consistently tell users what they want to hear rather than the truth.”

LLMs are professional yes-men, and we trained them to be that way! This apparent agreeableness created many lawsuits for OpenAI even around cases of AI-assisted suicide of minors. What sells commercial products also can be dangerous to users. GPT-4o was even brought back due to the attachment of ChatGPT customers missing it fondly when GPT-5 was released. Chatbots as drugs, with lots of debates around their use in education, with young people, and in settings like therapy or advice giving. Lots of societal impacts not seen in the Tech optimists’ slant.

Generative AI pushing Robotics Training

Merging the virtual and physical worlds: pre-training on unstructured reality – intersection of 3D worlds, robotics training and simulated training environments.

-

NVIDIA’s GR00T 1.5 represents a significant advance in data efficiency. It uses neural rendering techniques to construct implicit 3D scene representations directly from unstructured 2D videos. This allows it to generate a massive stream of training data for its policy, effectively learning from observation sin humans.

-

ByteDance’s GR-3 applies the next-token prediction paradigm to robotics. By treating vision, language, and action as a unified sequence, they can pre-train end-to-end. This approach is proving particularly effective when using 2D spatial outputs (e.g., action heatmaps) as an auxiliary loss, helping to ground the model’s understanding of physical space.

Is robotics and humanoid robotics the next frontier of U.S. vs. China competition? Advances in 2025 would suggest so. Funding has increases in robotics substantially in 2025 so far:

VC investment in robotics is bouncing back in 2025, with deal value up 170.5% QoQ and 263.2% YoY at the close of Q2.

Small Language Models are Becoming more Capable 📚

Another meta trend in 2025 is how well SLMs are doing on Agentic AI tasks.

Researchers from NVIDIA and Georgia Tech argue that most agent workflows are narrow, repetitive, and format-bound, so small language models (SLMs) are often sufficient, more operationally suitable, and much cheaper. They recommend SLM-first, heterogeneous agents that invoke large models only when needed. Read the paper.

-

Agents mostly fill forms, call APIs, follow schemas, and write short code. New small models (1–9B) do these jobs well: Phi-3-7B and DeepSeek-R1-Distill-7B handle instructions and tools competitively.

-

A ~7B model is typically 10-30x cheaper to run and responds faster. You can fine-tune it overnight with LoRA/QLoRA and even run it on a single GPU or device.

-

One can use a “small-first, escalate if needed” design: route routine calls to an SLM and escalate only the hard, open-ended ones to a big LLM. In practice, this can shift 40-70% of calls to small models with no quality loss.

So not only do we have Closed-source LLMs vs. Open-weight LLMs but increasingly video specific Models and SLMs geared for AI agentic tasks, among others.

What is driving more demand for compute?

-

More adoption of LLMs and more AI products

-

Higher Capex by BigTech leading to more datacenters

-

More Hyperscalers running at upper limits of “capacity”

-

More compute intensive products e.g. “reasoning” chatbots

-

Trends like ‘vibe coding’ and ‘AI agents’ are increasing usage commercial, consumer, developer and enterprise usage.

-

More global growth of the use of Chatbots and more versions – Gemini, Grok, ChatGPT, Claude, DeepSeek, Mistral Le Chat, etc…

Thus the increase in and accelerating demand for compute itself is the meta trend of the 2020s. That is leading to imminent power demands outside of the U.S. grid (and those of many countries) energy-grid capacities.

Vastly higher energy (and utility bill) prices will make this a political debate. While stock market bubbles and bruises might occur2, the demand for compute should keep accelerating for a variety of drivers not to mention National competition between the U.S. and China for global dominance.

Demand for Compute will keep going higher.

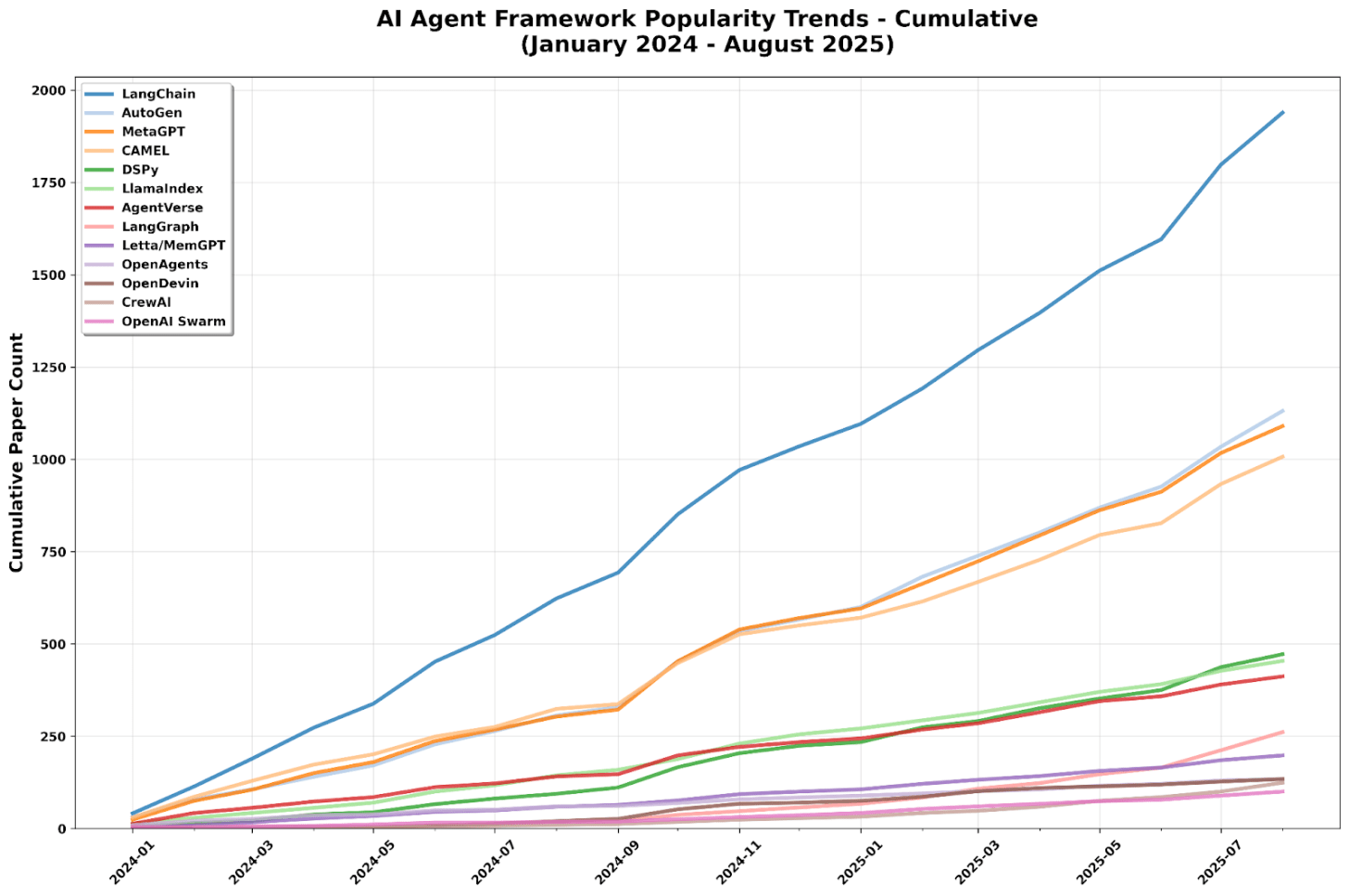

AI Agents Frameworks Exploded in 2025

Instead of consolidating, the agent framework ecosystem has proliferated into organized chaos. Dozens of competing frameworks coexist, each carving out a niche in research, industry, or lightweight deployment.

Meanwhile Anthropic’s MCP and Google’s Agent Payment Protocol (AP2) look like they are going to stick.

Stripe’s agentic commerce solutions also look fairly impressive. Meanwhile recent developments in App SDKs in ChatGPT mean it could evolve into a Super app. A lot of Generative AI’s real-world integration rests on the promise of these AI agents:

Tens of thousands of research papers per year are exploring a range of frontiers for AI agents as they move from ideas into production, including:

-

Tools: From plugins to multi-tool orchestration via shared protocols.

-

Planning: Task decomposition, hierarchical reasoning, self-improvement.

-

Memory: State-tracking, episodic recall, workflow persistence, continual learning.

-

Multi-agent systems: Collaboration, collective intelligence, adaptive simulations.

-

Evaluation: Benchmarks for open-ended tasks, multi-modal tests, cost and safety.

-

Coding agents: Bug fixing, agentic PRs, end-to-end workflow automation.

-

Research agents: Literature review, hypothesis generation, experiment design.

-

Generalist agents: GUI automation, multi-modal input and output.

While Cursor and Loveable (AI coding and vibe coding respectively) have shown great commercial progress in 2025, it’s all these AI agent experiments and pilots that could lead somewhere more tangible for society.

Agentic Memory started in 2025

Memory is no longer a passive buffer, it is becoming an active substrate for reasoning, planning, and identity.

INDUSTRY

SECTION TWO

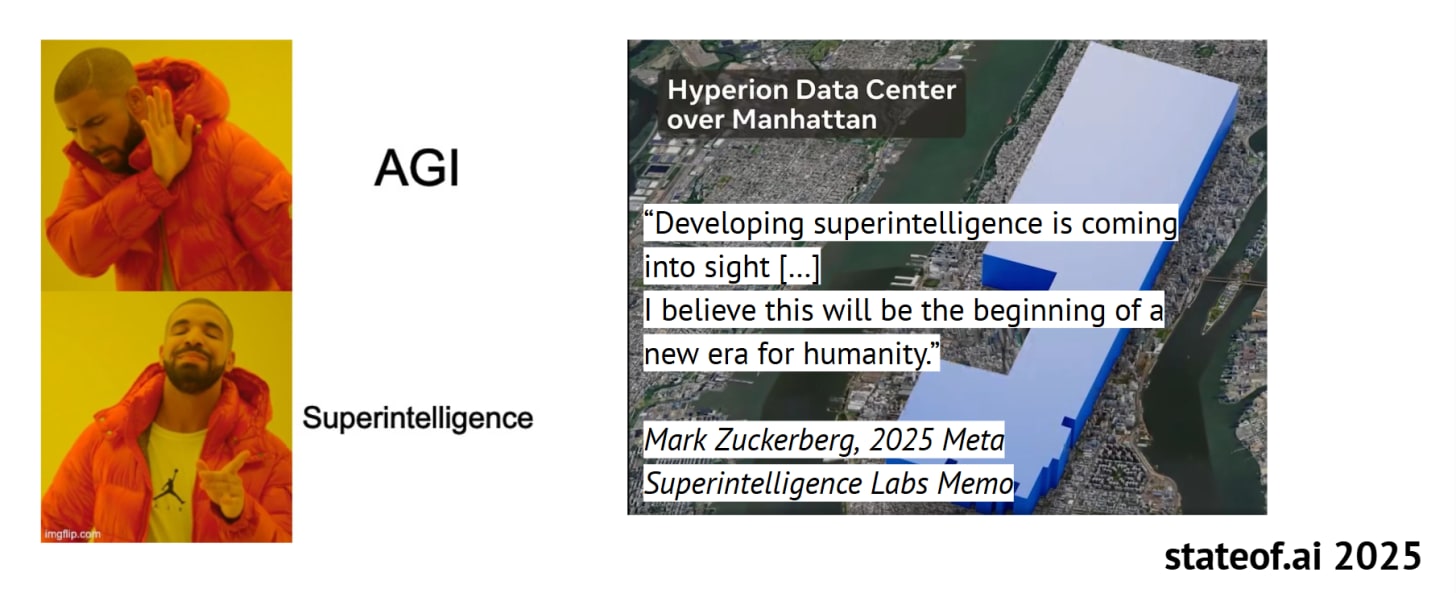

RIP AGI, long live Superintelligence

Tech executives are dropping terms like AGI for terms like Superintelligence to justify their Capex and AI emphasis of capital expenditures and odd capital allocation choices. Companies like Meta and Alibaba seem to use Superintelligence now. Alibaba Cloud is massive so getting developers to use their open-weight Qwen models make some sense, but Meta? The value play isn’t as clear.

-

The vocab of the vibe of AI is changing

-

But the datacenters keep getting larger and more numerous (the main trend of 2025 in AI is a Semiconductor boom caused by an AI Infrastructure building frenzy). It all seems in preparation for something uncertain AI might become at some relatively distant point in time. e.g. 2035.

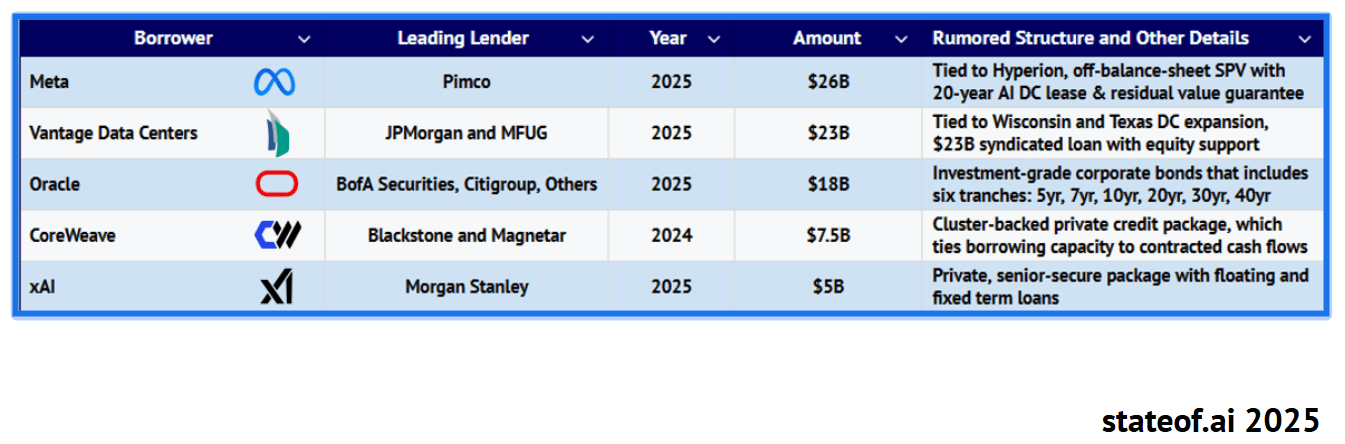

Fine if you are Google or Meta with billions in free cash from Ads, a more pressing concern if you are OpenAI, xAI or Anthropic that might not achieve profitability, well ever. OpenAI expects to spend Trillions of dollars it doesn’t have on AI Infrastructure, and that can only mean one thing – more debt. Debt derivatives like AI companies using Special Purpose Vehicle (SPVs), which is a legal entity created to hold assets and liabilities for a specific purpose. This structure allows investors, including Venture Capital (VC) firms and others, to pool their money to invest in high-value AI companies that may be difficult to invest in directly. The clearest examples doing this at scale are OpenAI, xAI, Softbank (funding OpenAI), Oracle, Alibaba and CoreWeave. High-risk high-reward.

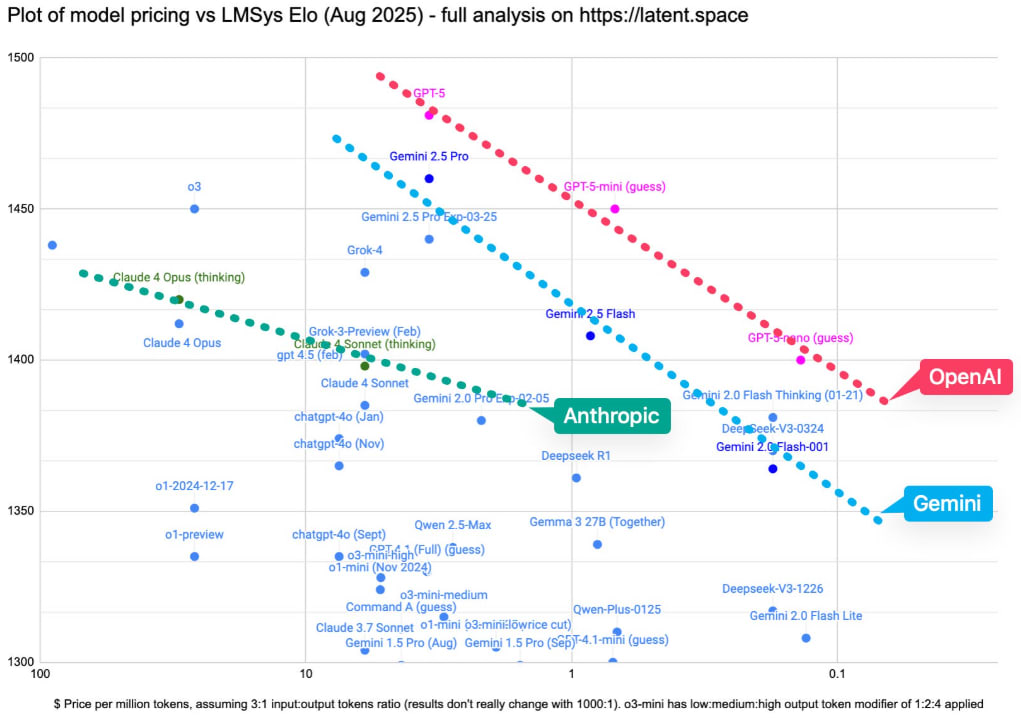

What does SOTA or Frontier Model Makers even mean any longer in 2025?

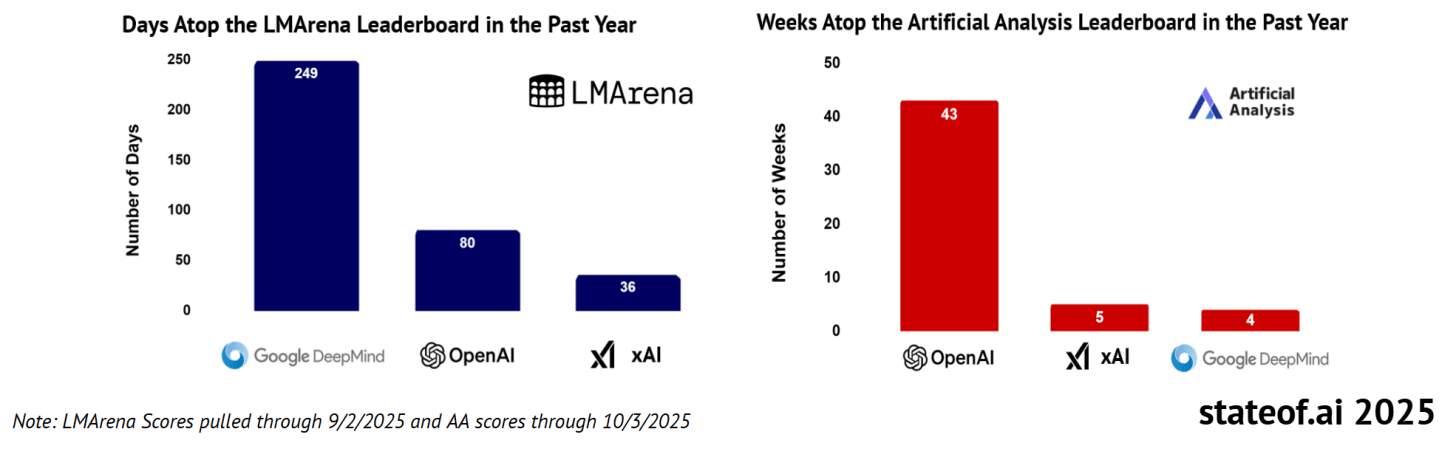

The absolute frontier remains contested as labs continuously leapfrog one another. However, along two of the most prominent metrics, some labs have been on top longer than others in the past year.

-

2025 has seen Google Gemini make tremendous progress vs. OpenAI and ChatGPT.

-

It’s seen Anthropic solidify its reputation as the best at making AI coding models thereby growing its developer, API marketshare and Enterprise AI momentum.

-

xAI, Meta and others are still moreover unproven. So if I had to pick four leaders it would be Google, OpenAI, Anthropic and Qwen. If Grok 5 performs well it could get a bit more credibility.

What is the Open-Weight Frontier?

-

Is it Qwen iterating on models so fast or Meta imploding its Capex budget poaching AI talent for a Llama that is likely not even to be a majority priority for them moving forwards? Or is it what Thinking Machines or Reflection AI are doing behind closed doors?

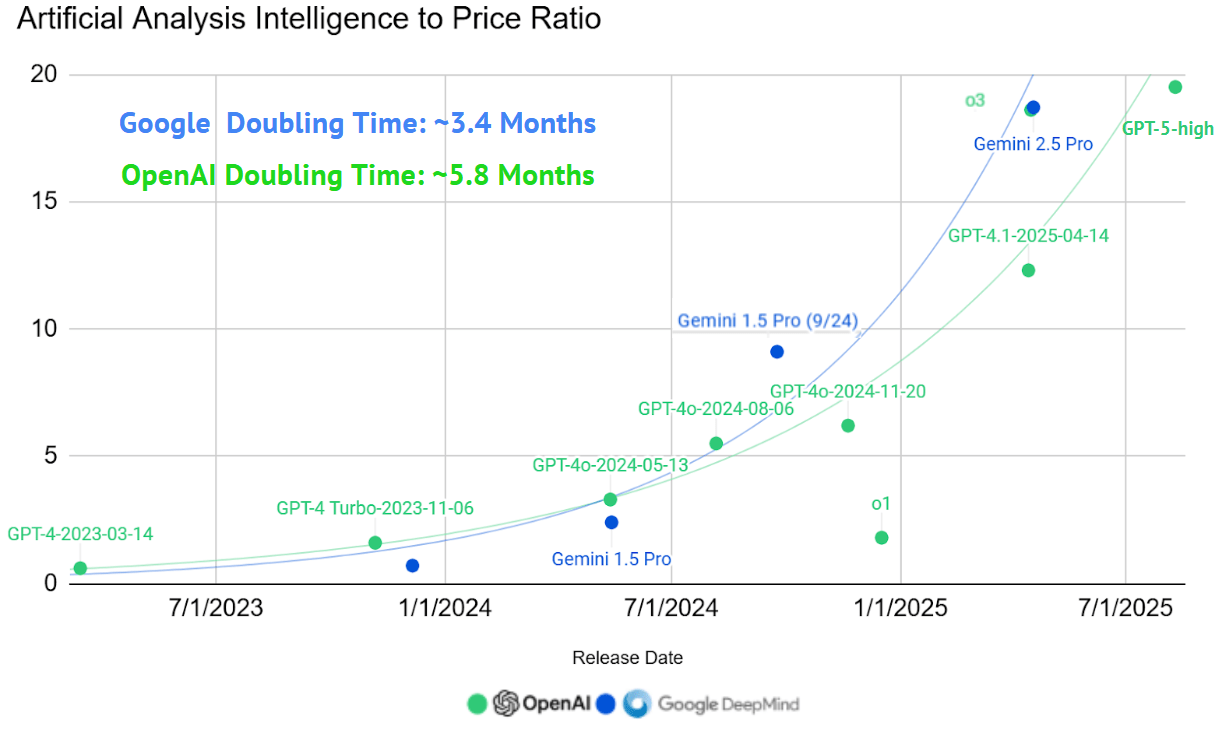

The Real Exponential view? Maybe More for less: trends in capability to cost ratios

The absolute capabilities achieved by flagship models continue to climb reliably while prices fall precipitously.

More or less the most impressive infographic each year from this report:

-

View more on Artificial Analysis.

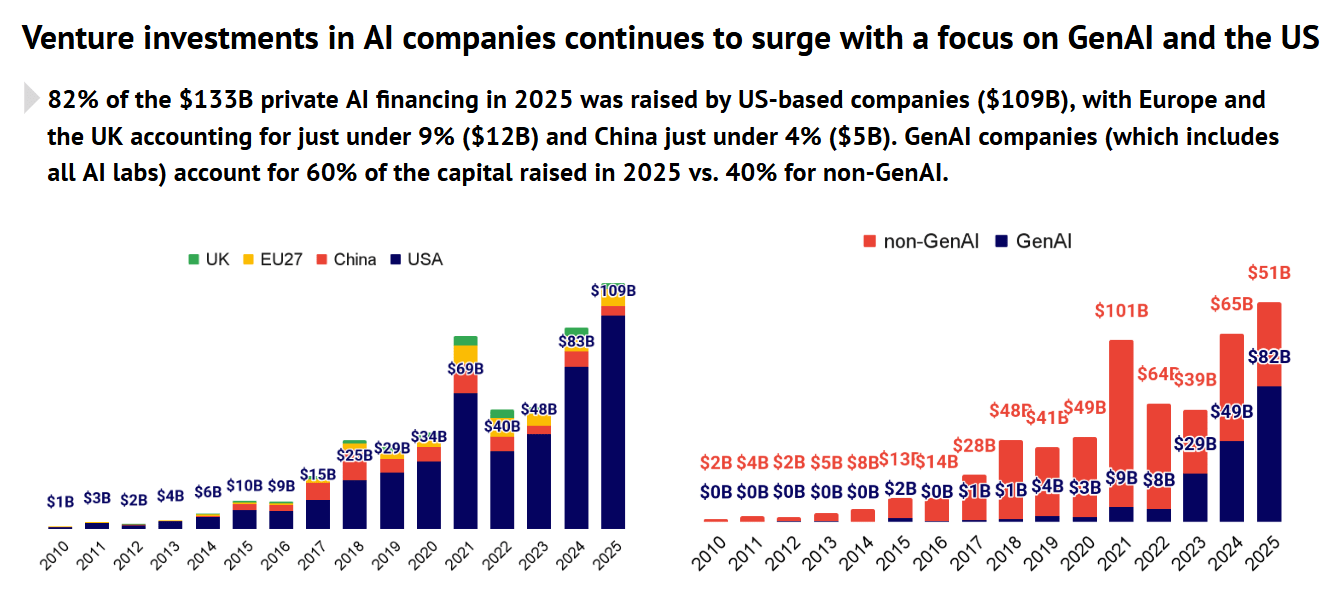

Venture Capital Funds are Splurging on AI startups

In another blockbuster quarter for AI funding, $45 billion — or around 46% of global venture funding — went to the sector, with 29% invested in a single company, Anthropic. This means as more capital goes to AI, less to go around for other industries around innovation. This is exceedingly risky if Generative AI doesn’t deliver tangible ROI and benefits.

The problem here is Venture Capital’s rising influence on media and social media, who have incentives to exaggerate who well Generative AI products and startups are actually doing. a16z itself owns a huge stake in this platform. Many of the AI reports I read are now from VCs (including this one). This is reflected in a lot of the statements of such reports that can be construed as misleading:

“AI has clearly shifted from niche to mainstream in the startup and investing world. On Specter’s rank of 55M+ private companies, which tracks 200+ real-time signals across team growth, product intelligence, funding, financials and inbound attention, AI companies now make up 41% of the Top-100 best companies (vs. 16% in 2022).”

-

This also artificially inflates the capability of U.S. hyperscalers to build datacenters in other countries and Nvidia to sell more AI chips with schemes like Sovereign AI. VC firms bumping these narratives is a bit like Nvidia participating in vendor financing you see.

“The AI bubble is now 17 times the size of the dot-com bubble and four times bigger than the 2008 global real-estate bubble, per MarketWatch.3”

The problem is a lot of the assets in a Datacenter have diminishing returns/need to be replaced. So ROI needs to come almost immediately to bear fruit.

BigAI has $10s of Billions in Revenue but where are the New Jobs for Society?

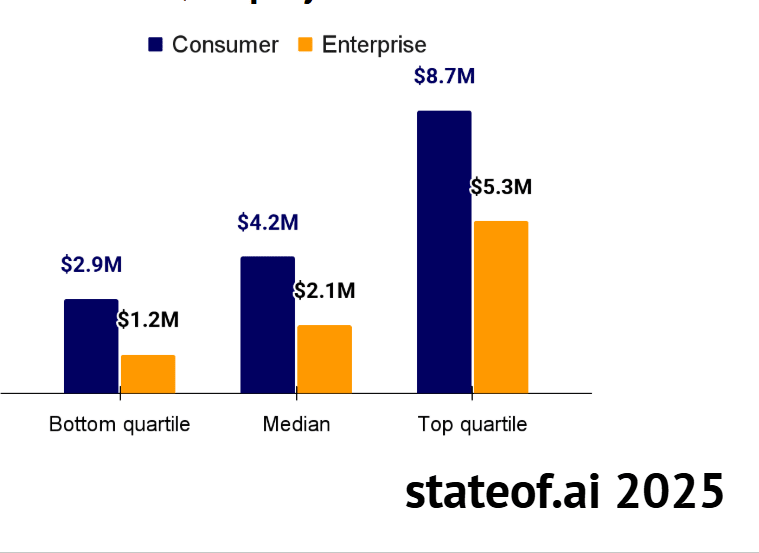

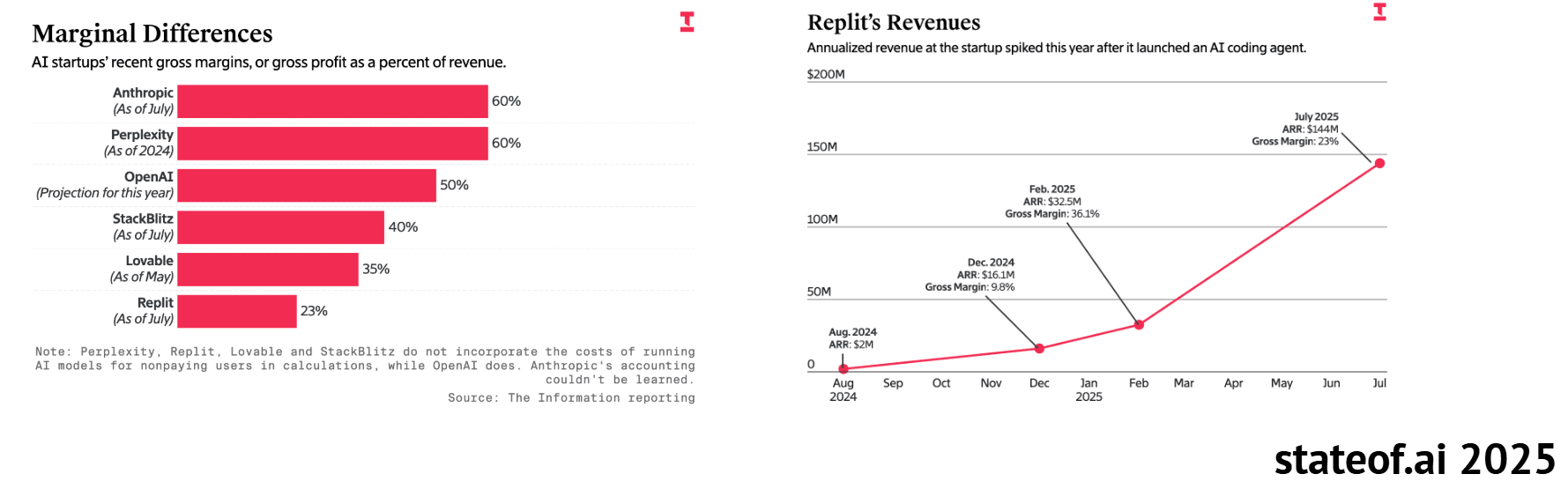

Venture Capital firms are boasting, but what is the reality of the ROI? A leading cohort of 16 AI-first companies are now generating $18.5B of annualized revenue as of Aug ‘25 (left). Meanwhile, an a16z dataset suggests that the median enterprise and consumer AI apps now reach more than $2M ARR and $4M ARR in year one, respectively.

-

There certainly are some “winners” in terms of revenue generation. Don’t ask about the margins though, due to the AI compute costs they might be worse than traditional SaaS.

-

A few BigAI firms show tremendous revenue ARR Growth:

-

OpenAI

-

Anthropic

-

Anysphere

-

xAI, etc…

-

They are also burning billions on AI Infrastructure, AI talent, growth and don’t seem to be creating many new jobs or ROI for society. Possibly helping BigTech be more efficient in their Earnings.

Sure, AI-first companies are now generating tens of billions of revenue per year. But what is the net benefit to? With AI Infrastructure, talent and model training so expensive, how do they even become profitable?

They conveniently leave that part out.

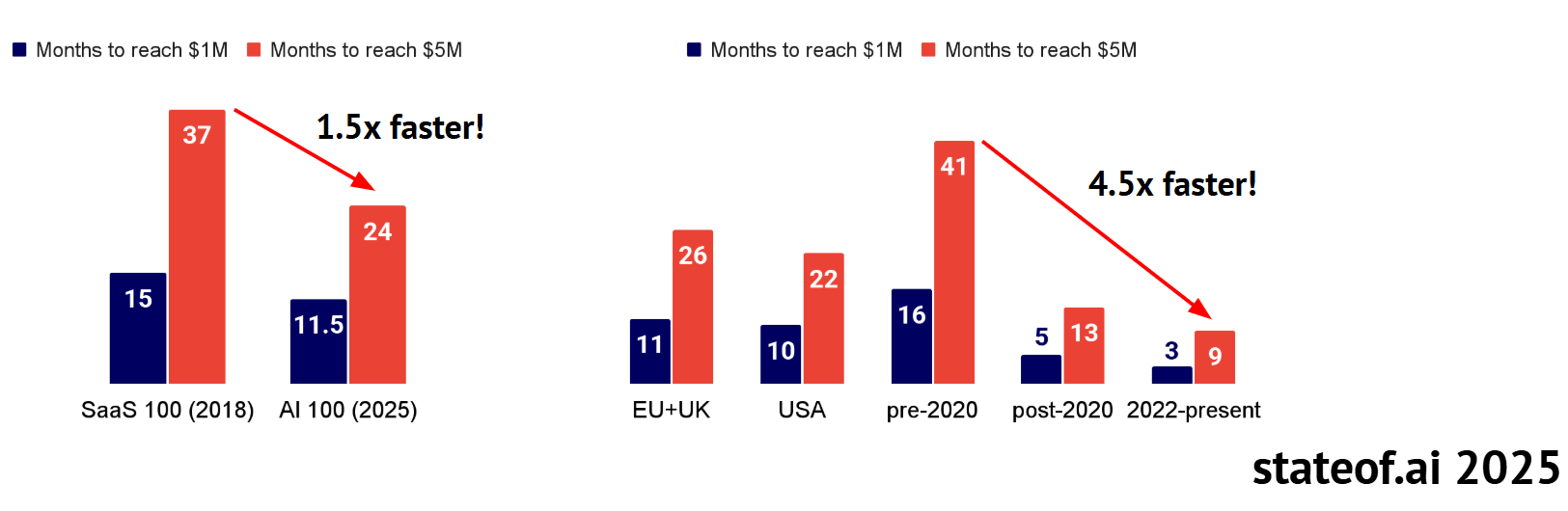

AI-first companies accelerate their early revenue growth faster than SaaS peers

-

They cherry pick Revenue growth as the go-to metric to track, without talking about margins (that’s a red flag!).

-

I get it, it’s supposed to make BigAI and VCs look good!

So you want to disrupt traditional SaaS but with worse margins than traditional SaaS? 😕

The likes of Cursor and Loveable as trends – vibe coding + AI coding also make Anthropic look good. So again you see the partner circular aspects in BigAI as well and not just with BigTech trying to boost AI Infrastructure and certainly accelerating the demand for compute. OpenAI doesn’t want to share the spoils instead it thinks ChatGPT can be a super-app. It’s Agentic Commerce Protocol isn’t just taking on Google but the likes of Stripe.

With so much funding and partner benefits:

AI-first companies continue to “outperform” other sectors as they grow according to VCs.

Analysis of 315 AI companies with $1-20M in annualized revenue and 86 AI companies with $20M+ in annualized revenue, which constitute the upper quartile of AI companies on Standard Metrics, shows that they both outpaced the all sector average since Q3 2023. In the last quarter, $1-20M revenue AI companies were growing their quarterly revenue at 60% while $20M+ revenue AI company grew at 30%, in both cases 1.5x greater than all sector peers.

They grow faster because we are in an adoption cycle, but this also accelerates consolidation, exists and just a few winners emerging. Huge capital allocation destruction not just in the datacenters with GPUs that become outdated in months to a few years, but on the AI startup side and actual demand for just a few winners, that often BigTech acquires through reverse-mergers. These reverse-acquisitions are really just “takeovers” for quick access to IP and talent skirting antitrust rules.

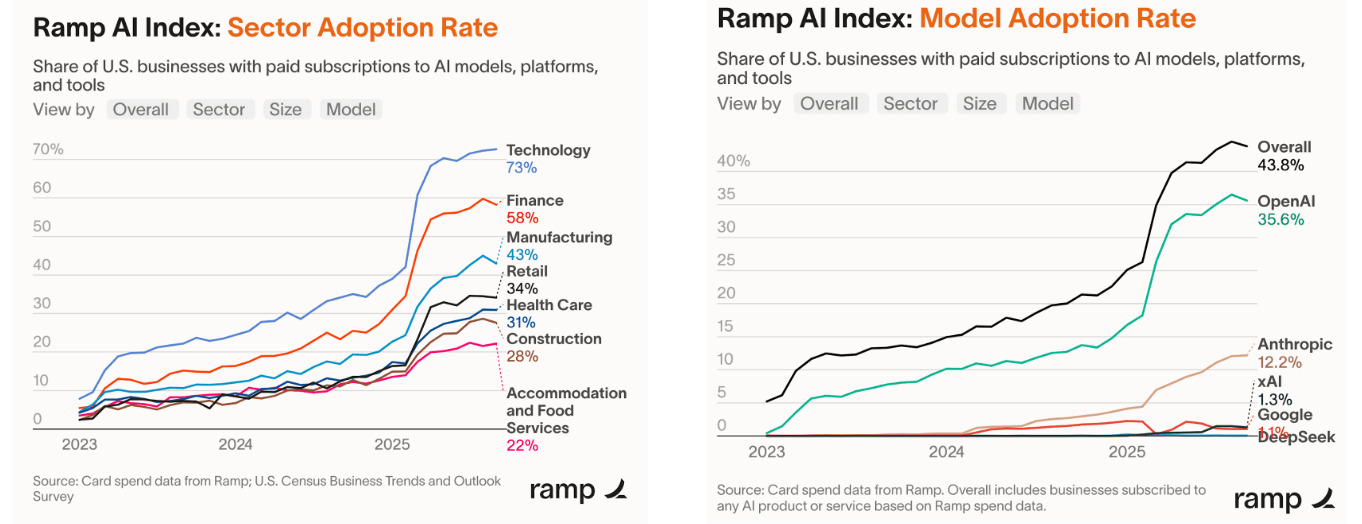

VCs thus partner with data providers in this case like Ramp, who show a stellar report card:

Ramp’s AI Index (card/bill-pay data from 45k+ U.S. businesses) shows the technology sector unsurprisingly leading in paid AI adoption (73%) with the finance industry not far behind (58%). Across the board, adoption jumped significantly in Q1 2025. Moreover, Ramp customers exhibit a strong proclivity for OpenAI models (35.6%) followed by Anthropic (12.2%). Meanwhile, there is very little usage of Google, DeepSeek and xAI.

To make matters worse this kind of VC-sponsored media is proliferating everywhere in 2025:

-

You might have noticed a hoard of “VC” media now dominating on LinkedIn, Substack, X and so forth. Well guess what, this isn’t journalistic coverage, it’s a form of sponsored PR. It’s all cherry-picked data.

-

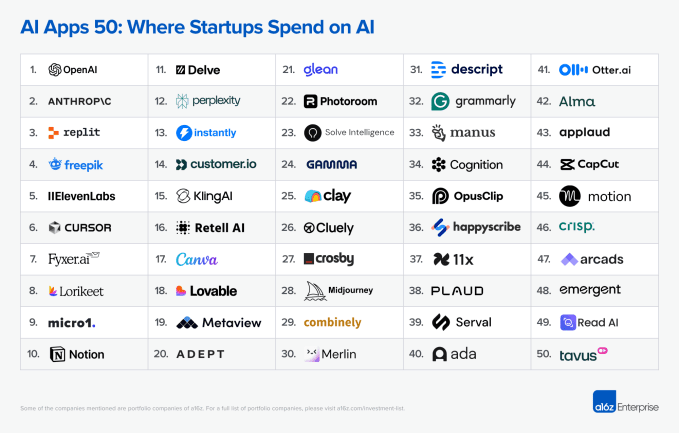

So that being said, what are the emerging AI startup sectors that are showing the most promise?

Audio, avatar and image generation companies see their revenues accelerate wildly

Market leaders ElevenLabs, Synthesia, Black Forest Labs are all well into hundreds of millions of annual revenue. Moreover, this revenue is of increasingly high quality as its derived from enterprise customers and a long tail of >100k customers and growing.

-

ElevenLabs grew annual revenue 2x in 9 months to $200M and announced a $6.6B valuation commensurate with a $100M employee tender offer. Customers have created >2M agents that have handled >33M conversations in 2026.

-

Synthesia crossed $100M ARR in April ‘25 and has 70% of the Fortune 100 as customers. Over 30M avatar video minutes have been generated by customers since launch in 2021 (right chart).

-

Black Forest Labs is said to be at ~$100M ARR (up 3.5x YoY) with 78% gross margin including a large deal with Meta worth $140M over two years. Separately, Midjourney has also entered into a licensing deal with Meta, the terms of which aren’t known.

Some of those first-mover advantaged AI startups of 2023 and early 2024 are showing good traction in their pioneering industries. We should also note good growth in particular in the intersection of Gen AI in legal and video models. I would actually consider those better than either audio or avatar generation.

-

Legal AI startups

-

Video Models (text to video)

-

Avatar

-

Audio

-

Image

Winners in SmallAI are Emerging

To get a better look at some of the trending winners in Generative AI:

GPT-5’s Launch was Poorly Executed

A worrying signs for OpenAI as Gemini 3, Grok 5 and DeepSeek-R2 shows up, is how poorly they did the launch.

GPT-5 leads the intelligence-per-dollar frontier with best-in-class benchmarks at 12x lower cost than Claude, but user backlash over the sudden removal of GPT-4o and o3 and concerns/teething problems about opaque router decisions that users aren’t used to overshadowed its technical achievements.

I tried to cover as it was happening:

I call BigAI the bigger players like Anthropic, OpenAI, xAI and Thinking Machines along with other model makers such as Mistral, Qwen, DeepSeek and Reflection AI – while I call SmallAI the domain specific AI startups. That’s not to say that someone like a Cursor couldn’t even into a big player making their own AI coding models one day. But it’s still essentially leveraging Claude Code’s coding supremacy as things stand today (it’s a wrapper).

-

As players like Google expand their list of AI products, token demand is surging which is accelerating the demand for compute like crazy.

Leading AI providers continue to record extraordinary demand at inference time

As Google Gemini and Alphabet’s AI products ramp up in 2025, so are xAI and Anthropic pushing through. This is leading to an incredible demand to build more datacenters globally.

-

This trend should continue into 2026 and into the 2030. I think analysts and writers usually overestimate the impact of AI on society and underestimate the demand for compute side generally speaking.

The report Adds:

-

“The demand for tokens has been largely supercharged by improved latency, falling inference prices, reasoning models, longer user interactions, and a growing suite of AI applications. Enterprise adoption has also continued to pick up in 2025.

-

Surging inference demand will place additional pressure on AI supply chains, particularly power infrastructure.

-

But given that all tokens are not created equal, we’d caution against deriving too much signal from aggregate token processing figures.”

Alphabet can flex token figures to appear like consumer are adopting its products and look good as well: [great LinkedIn posts by Gennaro here4]

Google’s internal data shows monthly token processing has exploded from under 10 trillion in early 2024 to over 1.3 quadrillion by late 2025 a 130x increase in 18 months. This reflects the rapid integration of generative AI across Google Search, Workspace, and Android.

Now multiply that by the demand and growth going into Anthopric, xAI and others too. BigAI is just ramping up at the beginning of their adoption curve in 2025, it’s just OpenAI that is a bit further ahead with the ChatGPT catalyst. The AI Infrastructure antics that OpenAI are doing with circular financing and partnerships (SVP debt)) deals, now others in BigAI like Meta, Alibaba, xAI and Anthropic will have to mimic just to keep up!

That will make the demand for compute + energy of 2025 look very tame and small compared to what’s coming. That does not mean AI isn’t a bubble, it means the AI Infrastructure demand for Cloud capacity pushes the U.S. into an Energy bottleneck faster than anticipated.

-

The report goes on to praise GPT-5 Codex in coding (slide 107), but I guess Claude Sonnet 4.5 hadn’t dropped yet. OpenAI hasn’t been ahead in AI coding for quite some time (the report was incorrect on this!). The reality is a bit closer to this graph:

I actually anticipate Gemini 3 and Grok 5 to make inodorous strides in AI coding too. I’m not sure GPT-5 Codex will age well as OpenAI has been losing API marketshare among developers all through 2025. The signs are pointing to OpenAI losing its SOTA grip in some domains. OpenAI is pivoting to a consumer apps AI company, so it’s very unlikely they will be global leaders in LLMs as a research lab for much longer. Especially when they are focusing so much on apps and things like AI Infrastructure and spread thin in experiments like consumer hardware, and building clones for X and LinkedIn. Recognizing this, to call them leaders in AI coding is really ignorant.

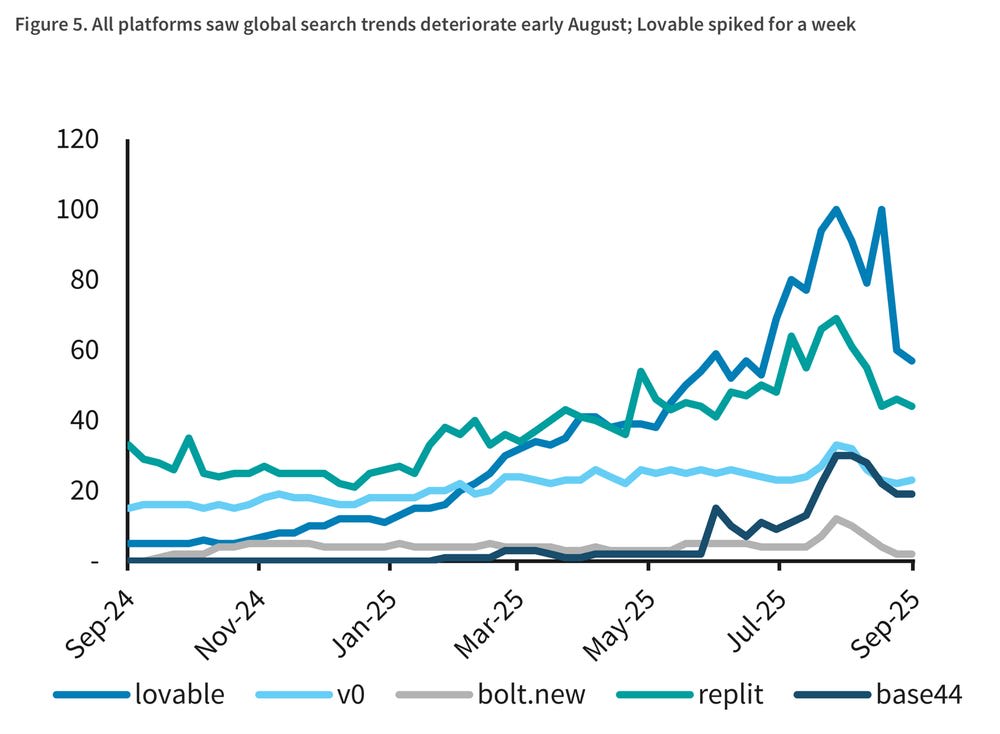

Vibe Coding Emerges as Significant Gimmick Trend of 2025 AI adoption

Vibe coding hits the bigtime. At least for the likes of Loveable. I don’t consider this a sustainable trend.

We might actually be at the start of a Vibe Coding winter, as the summer time of vibe-coding gives way to school:

still that’s not to say that Vibe coding didn’t have a great 2025:

The problem being in 2025 there are so many vibe coding tools and AI agents, consolidation is going to be brutal as only a few will see real traction.

Still some great adoption stories to be sure:

-

Swedish vibe coding startup Lovable became a unicorn just 8 months after launch, with a $1.8B valuation.

-

Using AI to write 90% of code, Maor Shlomo sold Base44 to Wix to $80M after 6 months.

-

Garry Tan says that for 25% of their current fastest growing batch, 95% of their code was written by AI.

Now will an internet full to the brim with AI agent pilots work out well too? 😂

Skipping the part about the security vulnerabilities in vibe-coded products and how half-baked they tend to be. At least it was an entertaining moment in 2025:

If Cursor thinks it’s worth $30 Billion as a wrapper, how much does Loveable think it’s worth? Something around $5 Billion most likely.

It’s always about the margins though:

How good can Cursor’s or Loveable’s Margins possibly be?

Coders love Claude Code and Cursor, but margins are fragile. The tension is stark: Cursor is a multi-billion dollar company whose unit economics are hostage to upstream model prices and rate limits set by its own competitors.

-

Some users are costing upwards of 50k/month for a single seat of Claude Code. Cursor and Claude have introduced much stricter usage limits to try to crack down on the costs of power users.

-

Cursor’s pricing power is limited because its core COGS are the API prices of Anthropic/OpenAI. When those providers change prices, rate limits, or model defaults, Cursor’s gross margin compresses unless it caps usage or shifts workloads off upstream APIs.

Life won’t always be good as a wrapper, even as Claude code is emerging itself as a legit destination. When does customer that is a partner become a competitor?

As AI coding gets better for Google, xAI and others, how do the wrappers compete even vs. Claude Code and GPT-5 Codex in the end? Amazon is doing so much capex investment in 2025 it will obviously results in fairly good AI coding tools too, among others. Microsoft will certainly be doing all it can to save the fate of Github Copilot (may it rest in peace). A kind of pointless endeavor given that Claude Code now powers Github Copilot too.

The Problem of Margins for BigAI and SmallAI

The margins at Gen AI native companies are likely to be worse than you think. GenAI won’t have the margins of digital Ads, or Cloud or even mobile apps in many respects.

“Gross margins are generally commanded by the underlying model API and inference costs and strained by token-heavy usage and traffic acquisition.”

This is on Slide 111.

Surprisingly, several major AI companies don’t include the costs of running their service for non-paying users when reporting their GM. Coding agents are under pressure even when revenue grows quickly. The primary levers to improve margins are moving off third-party APIs to owned or fine-tuned models, aggressive caching and retrieval efficiency, and looking to ads or outcomes-based pricing.

This is where the ROI problem thesis of the AI bubble also really gains traction. Neither is this difficult to verify or reason out:

-

AI labs mirror the foundry business: staggering investments are needed for each successive generation, where labs bear the front-loaded training expense. While recent models allegedly recoup this cost during deployment, training budgets surge. Pressure then mounts to drive inference revenue across new streams.

-

Inference pays for training: labs strive to allocate more of a model’s lifecycle compute to revenue-generating inference at the steepest margin possible. Our table* below illustrates the expected return on compute costs across varying inference margins and compute allocations.

So hence why OpenAI has to wildly pivot to apps and Ads to ever become profitable. Betting on even an Apple-esque hardware device with multiple risky acquisitions. That sort of revenue diversification smells like desperation to me. OpenAI and xAI will burn so much cash in the 2026-2030 period, it will make business models with a more Enterprise AI focus like Anthropic, Databricks and Cohere do much better I think.

Nor do I think the AI browser battleground is a big deal in the big picture. Maybe for Perplexity’s future it is though.

Is OpenAI really going to compete with Google in Search?

So far in 2025 I’m surprised how little impact OpenAI has had in search to be honest.

-

By August 2025, ChatGPT served 755M monthly users, giving it ~60% of the AI search market.

-

Google has implemented AI mode, AI Overviews and new kinds of search so well, it’s hard to think search in ChatGPT is going to be a major player here. In 2024 it didn’t look like the case.

The drop wasn’t drastic enough to hurt Google’s bottom lines enough:

-

SEM Rush data shows Google’s global search traffic fell ~7.9% year-over-year, the first significant dip in decades, even as it retained ~90% global share. Similarweb data shows Google search visits down 1-3% YoY throughout H1 2025, with Bing (-18%) and DuckDuckGo (-11%) also declining.

Given how Google has been able to scale Gemini and how popular tools like NotebookLM have become, Alphabet is looking more like the Generative AI winner than an incumbent now in the closer of 2025. OpenAI had a window of opportunity but seems to have squandered it (again I disagree with the slant of the UK based report). I’m of the opinion that even Grok might have a better chance of becoming a legit Search competitor in the medium term.

If a U.S. recession hits in 2026 paid AI adoption is likely to keep decreasing. OpenAI traffic lead may not be as substantial as it looked in 2024 a couple of years down the line of just less than 2% are covering to paid users of ChatGPT. They will also have more reasons to churn in the years ahead.

Why did AI spending fall in September, 2025?

The economic impacts of massive capital allocation in AI Infrastructure, AI talent and a weakening labor market and booming stock market might actually not be a great combination.

Is Social Commerce on Chatbot really going to be a thing?

OpenAI is introducing apps and a payments protocol thinking it’s the next TikTok of social commerce. But it’s not a consumer apps native company, it coming from the engineering background of being a research lab. It doesn’t understand a lot of the mechanics of what it is attempting to do and like Meta and Google that have sought to become relevant in social commerce, may end up falling flat. There are reasons why social commerce and live streaming work in Asia but not so well in the West.

This will mean OpenAI isn’t just competing with Google, but now with Meta and Amazon directly too. Competing with companies with cash cow business models. Even competing with Apple in AI hardware. This is not how you make friends.

Again Nathan’s report is oddly bullish about OpenAI’s approach and chances:

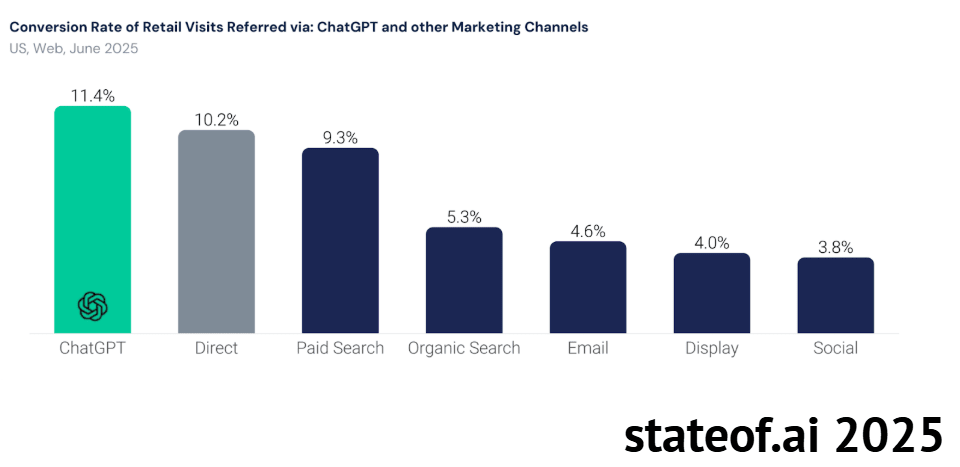

According to Similar Web data, retail visits referred by ChatGPT now convert better than every major marketing channel measured. Conversion rates rose roughly 5pp YoY, from ~6% (Jun ’24) to ~11% (Jun ’25). Although AI referrals are still a smaller slice of traffic, they arrive more decided and closer to purchase. Retailers must adapt by exposing structured product data, price and delivery options, and landing pages tailored to AI-driven intents. In fact, ChatGPT recently implemented Instant Checkout with Etsy and Shopify, and open-sourced their Agentic Commerce Protocol built with Stripe, to enable developers to implement agentic checkout.

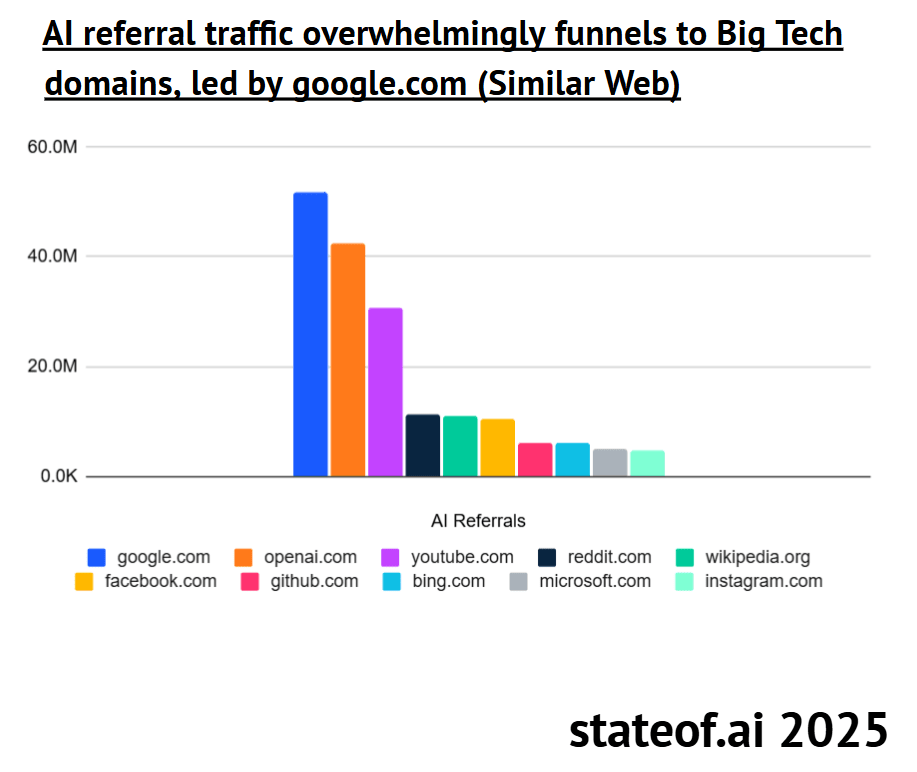

AI Referral Traffic Hints at OpenAI already becoming BigAI

But as new chatbot competitors emerge and scale their offerings, is that traffic relevance in AI referral traffic going to be sustainable for OpenAI? As Gemini and Grok increase in popularity what does that do to ChatGPT’s retail hub and app (Super App) experiments? You can have traffic like a Super app and still have no clue how to build a WeChat. In fact the world is against you being able to pull it off.

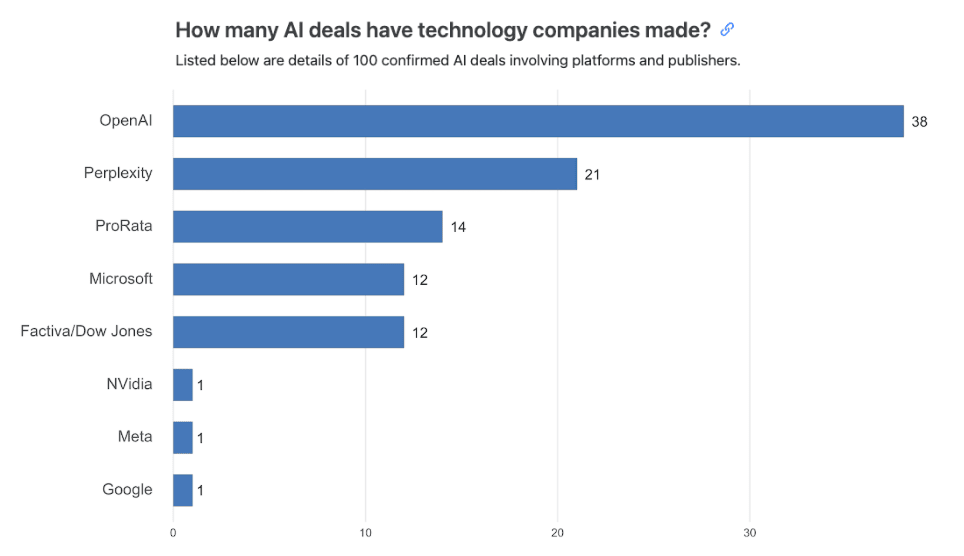

Journalism has Caved to OpenAI

The lure of traffic though does crazy things to businesses and industries just trying to survive.

-

News: Over 700+ news brands have signed AI deals, including the Washington Post, WSJ, Guardian, FT, The Atlantic, Condé Nast, and even NYT ($20-25M Amazon deal) (as they continue to sue OpenAI).

-

Music: Hallwood pens deal with top-streaming “creator” on Suno, Grammy winner Imogen Heap releases AI style-filters for fans to remix.

-

Video: AMC Networks formally embraces Runway AI for production (first major cable network to do so).

-

Publishing: Microsoft & HarperCollins deal for AI training (with author opt-outs).

Sam Altman is taking a Donald Trump approach to the art of the deal:

If media publishers are relying on another tech company for fairness and steady traffic, as the Ads turn up they might have another thing coming if Internet history is anything to go by.

OpenAI’s Inflated AI Infrastructure Scheme

Stargate: may the FLOPS be with you

Some history:

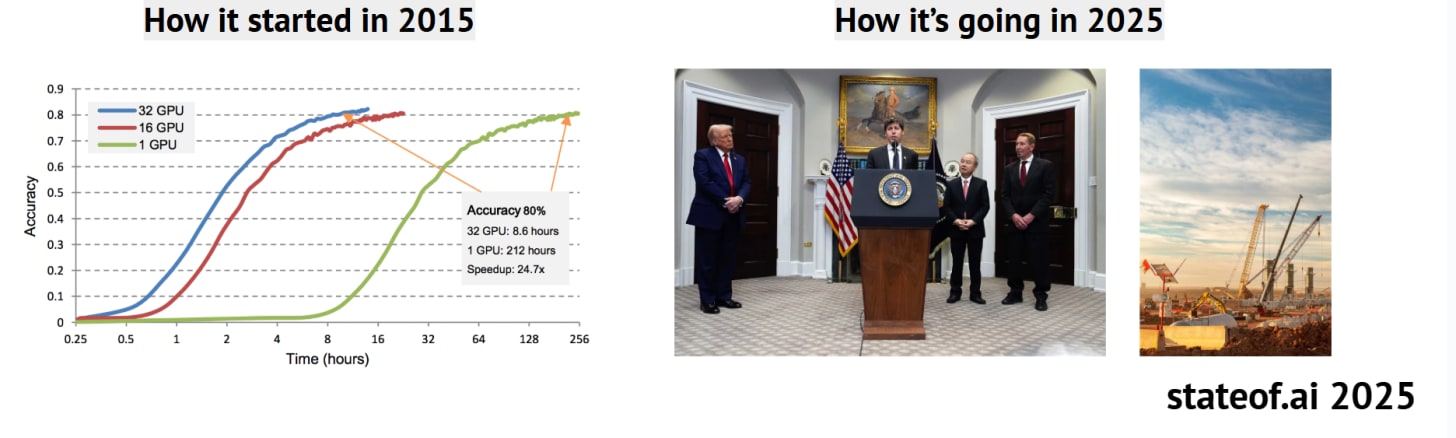

10 years ago, Baidu, Google and others had shown early scaling laws whereby deep learning models for speech and image recognition converged faster as more GPUs were used for training. Back then, this meant using up to 128 GPUs. In January this year, Sam Alman, Masayoshi Son, Larry Ellison and President Trump announced the Stargate Project, a gigantic 10GW-worth of GPU capacity to be built with $500B in the US over 4 years. This equates to over 4 million chips! The buildout is to be funded by SoftBank, MGX, Oracle, and OpenAI.

OpenAI is going to need more AI Infrastructure and compute than they can afford, which means a lot of debt. Still impressive about how far they have come in a decade:

They will need to keep raising absurd amounts of cash and debt to even allow for their plans to materialize. Which makes their approach to their Stargate approach feel more WeWork and Napster than anything that has come around since.

U.S. allied nations are being sold this with a plate of Sovereign AI.

Energy-rich nations are grabbing their ticket to superintelligence by partnering with OpenAI’s astute sovereign offering: a formalized collaboration to build in-country AI data center capacity, offer custom ChatGPT to citizens, raise and deploy funds to kickstart domestic AI industries and, of course, raise capital for Stargate itself.

It’s like countries giving donations for Nvidia GPUs hoping to keep up in the AI arms race. Somehow OpenAI is being carried by that National geopolitical FOMO. It’s getting painful to watch:

-

Stargate UAE is the first deployment of a 1GW campus with a 200 MW live target in 2026. Partners include G42, Oracle, NVIDIA, Cisco, and SoftBank).

-

Stargate Norway comes second and is a 50/50 joint venture between UK’s Nscale and Norway’s Aker to deliver 230 MW capacity (option to +290 MW) and 100,000 GPUs by end-2026.

-

Stargate India is reportedly in the works for 1 GW as OpenAI expands and offers a cheaper “ChatGPT Go”.

The report goes on to praise the transformation of the likes of Broadcom, Oracle and others in the AI Infrastructure and Semiconductor boom. Both are performing along with TSMC as if they were part of Mag 7 this year as well. We won’t even get into OpenAI’s debt and circular financing or Nvidia’s involving Oracle, AMD and others since we’ve covered it at length.

Still the U.S. and its hyperscalers at always at capacity so more Datacenters is the order of 2025.

AI labs target 2028 to bring online 5GW scale training clusters

Anthropic shared expectations that training models at the frontier will require data centers with 5GW capacity by 2028, in line with the roadmaps of other labs. The feasibility of such endeavors will depend on many factors:

-

How much generation can hyperscalers bring behind-the-meter? At such scale, islanding will likely not be practical, requiring data centers to tap into grid assets.

-

How quickly can players navigate the morass of permitting and interconnection? While reforms are underway, connection timelines for projects of this magnitude can take many years. Hyperscalers may skip queues through lobbying efforts and demand response programs, where they curtail draw during peak periods (a recent Duke study projects 76GW of new availability with curtailment rates at 0.25%).

-

What level of decentralization can be achieved? Many labs continue to pursue single-site campuses, yet distributed approaches are also advancing rapidly.

How well can hyperscalers navigate talent and supply chain shortages? Attempts to alleviate power infrastructure and skilled labor bottlenecks through the massive mobilizations of capital can overload the risk-appetite of supporting parties.

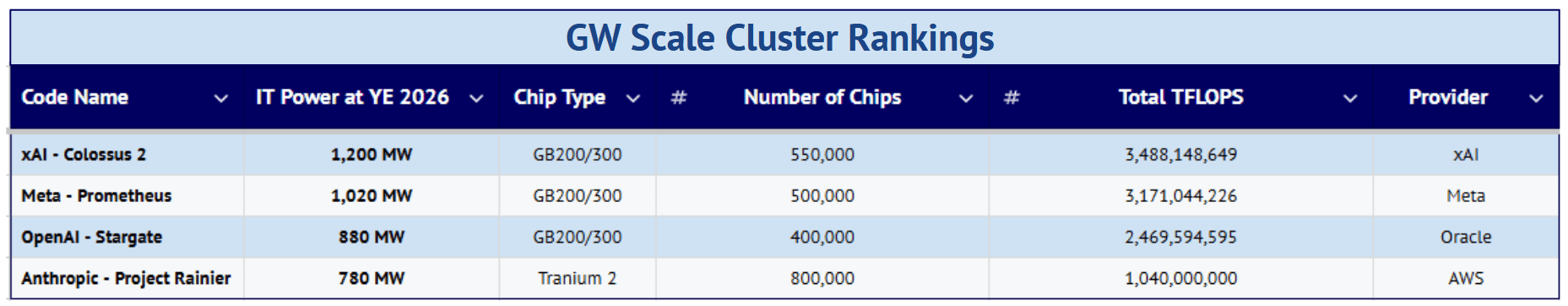

We do know the AI datacenter are also getting bigger and xAI’s project is beyond the norm here in speed and size. Most of the $20 Billion funding round around $18 Billion goes directly to this:

Measuring contest: planned ~1GW scale clusters coming online in 2026

-

Thus the demand for compute is enormous and only getting bigger.

Which is the central theme of 2025 and AI, the AI Infrastructure side is dominating.

-

xAI – Colossus 2

-

Meta – Prometheus

-

OpenAI – Stargate

-

Anthropic – Project Rainier (this is really Amazon’s)

-

Microsoft – Fairwater

The size and scope of the AI datacenters coming the GPUs required to keep them up to date is a huge investment that will need to be on-going likely to raise capex until debt will have to be taken out by even the richest corporations.

Indeed a certain amount of xAI, OpenAI’s, Oracle’s and even Meta’s AI Infrastructure is from debt, SVPs and third party debt deals.

It would appear that by 2028 the U.S. already hits its energy wall with regards to datacenters.

SemiAnalysis projects a 68 GW implied shortfall by 2028 if forecasted AI data center demand fully materializes in the US.

-

As an emerging pattern, this will force firms to increasingly offshore the development of AI infrastructure. Since many of the US’ closest allies also struggle with electric power availability, America will be forced to look toward other partnerships – highlighted by recent deals in the Middle East.

-

Projects that are realized on American soil will place further strain on the US’ aging grid. Supply-side bottlenecks and rapid spikes in AI demand threaten to induce outages and surges in electricity prices. ICF projects residential retail rates could increase up to 40% by 2030. These factors could further contribute to the public backlash directed at frontier AI initiatives in the US.

The U.S. grid is really a huge bottleneck for American leadership in AI continuing into the foreseeable future. The capex is thus a global endeavor and thus the geopolitical leverage of trade and other kinds of pressure. I will note here the Trump Administration’s presser on Taiwan here.

China’s Open-weight LLM and Agentic AI push is real in 2025

Although the report seems to downplay it.

Chinese labs have yet to advance the frontier, but compete in the open-weight domain

Chinese labs like Alibaba, DeepSeek, Moonshot, and MiniMax continue to release impressive open-weight models. A capability gap emerges between these models and most American open-source alternatives.

-

US open model efforts have disappointed. OpenAI’s open-weight models underwhelmed with performance trailing far behind GPT-5.

-

The restructuring of Meta’s “Superintelligence” team has cast doubts on their commitment to open-sourcing at the frontier. Other teams like Ai2 lag far behind in terms of funding. Although they recently landed $152M from NVIDIA and NSF, that figure pales in comparison to even OpenAI’s initial grant from 2015.

-

Conversely, Chinese organizations continue to push the envelope, while publishing troves of new algorithmic efficiency gains.

In mid August another Nathan, Nathan Lambert tried to rank some of these Chinese open-weight LLM players.

-

Qwen

-

DeepSeek

-

Moonshot AI

-

Zhipu AI (now called just Z.AI)

-

MiniMax

-

Tencent

-

ByteDance

-

Baidu, and so forth.

The report makes an interesting comparison of the pros and cons of Chip exports to China.

Doesn’t really matter since China decided to accelerate Huawei, SMIC and their semiconductor efforts while rather easily smuggling chips via Singapore and Malaysia or just rent from for cheap in the U.S. itself with the likes of Coreweave, Nebius, etc…

The U.S. has huge advantages in capital, top tier AI talent (some of which poached from China), Corporate backing, superior semiconductor supply-chains, better AI chips, Venture Capital funding and the Trump Administration. However China has plentiful engineers, a harder working culture, more AI talent overall, more experience building and scaling AI apps, more data, a better energy grid and other advantages of the CCP’s long-term planning and economic leverage.

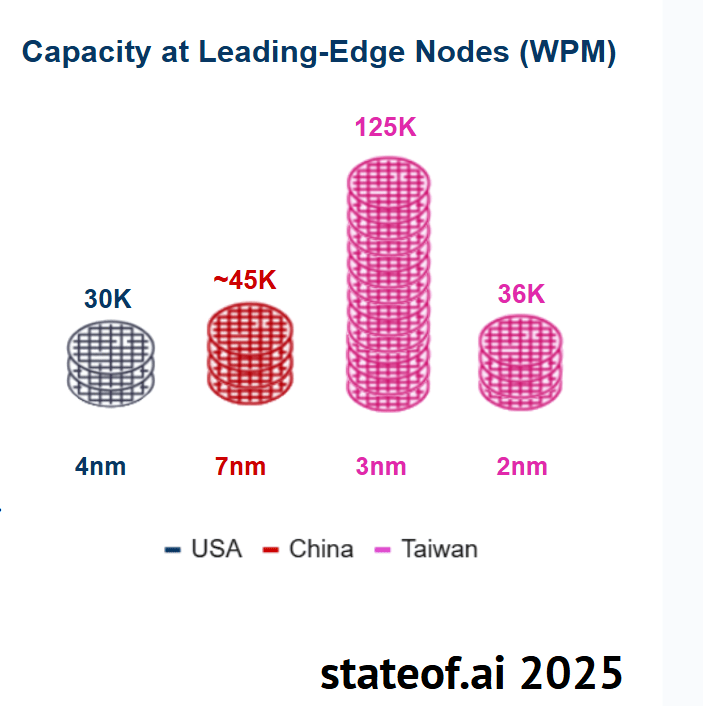

Taiwan is the key AI Supremacy Bluechip Asset

But the biggest advantage America has is TSMC and Taiwan by far that enables Nvidia to thrive and sell so many AI chips to the world.

Without Taiwan’s semi supply-chain and TSMC, this all falls apart. [see Slide 146].

Taiwan continues to reign supreme in terms of leading-edge manufacturing capacity, maintaining massive advantages in both generation and volume.

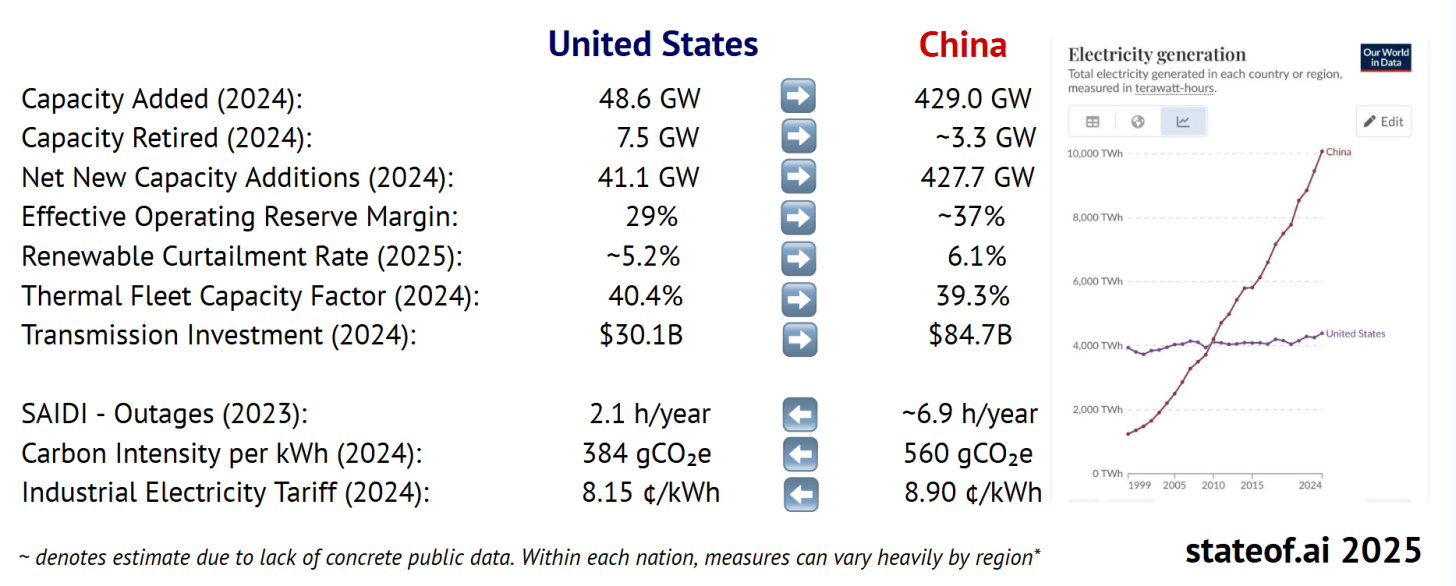

China’s energy grid is easily a decade ahead of the U.S. too. For all the showboating of the U.S in AI Infrastructure, why the rush when you can’t even power the future?

China’s Power Grid and Green Energy Supply is a Huge Advantage in a Few Years

-

China’s energy advantage is their AI Supremacy blue chip card.

-

So can China catch up in semiconductors and AI chips faster than the U.S. can catch up in power and energy? That is the question for the 2030s.

-

China’s Open-weight models are likely now just 3-6 months behind America’s leading closed-source models. In 2024 it was closer to 9 months.

Nvidia’s “Sovereign AI” Ploy really is U.S. Nationalism

Building your datacenters in their countries is not empowering them to be independent of your technology. Getting them to buy your AI chips and weapons is well, part of the American way now.

The U.S. has become way more coercive and geopolitically imperialistic here in Trump’s second term. Promising allies and countries their own AI stack is fairly deceptive (and places like the Middle East and the UK are dumb enough to fall for it):

Nations are seeking “sovereignty” for the same reason they have domestic utilities, manufacturing borders, armies, and currencies: to control their own destiny. Yet, there is a real danger of “sovereignty-washing.” Investing in AI projects to score political points may not always advance strategic independence.

That even Europe “controls its own destiny” in AI is getting absurd as Germany and France lag behind. As does Japan and India of course, it’s really China and the U.S. that count in this.

The ploy is big business for Nvidia though and now they are so aligned with Trump it’s a dangerous duo: (maybe the most shocking thing that occured in 2025 in the geopolitics of AI)

Jensen Huang continues to plead nations to increase their “Sovereign AI” investments. Already, this global campaign has been rewarded. During their recent Q2 FY 2026 earnings call, CFO Colette Kress claimed NVIDIA was on “track to achieve over $20B in sovereign AI revenue this year, more than double that of last year.”

Let’s remember that 2025 is early days of this meta trend.

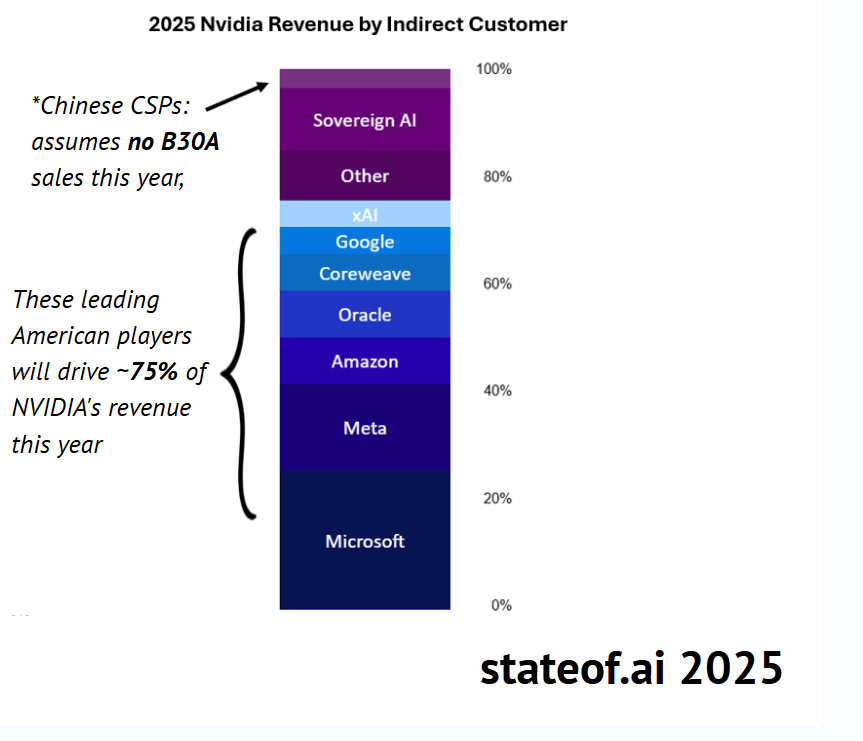

Nvidia’s customers at the end of the day are just BigTech (in particular Microsoft). NVIDIA’s data center revenue continues to be dominated by American cloud and AI giants, who now make up nearly 75% of NVIDIA’s total data center sales.

-

NVIDIA’s data center revenue projection for the calendar year 2025 floats between ~$170B-$180B, depending largely on the stringency and timing of upcoming export control decisions from both the US and China.

-

While hyperscalers ordered more chips in 2025, the two clouds with custom chips programs, Amazon and Google, dedicated a smaller percentage of capital expenditures toward NVIDIA purchases.

-

Direct purchases overwhelmingly flow to OEMs partners such as Dell, SuperMicro, Lenovo, and HPE.

Who are the Neo Clouds that Rent GPUs?

-

CoreWeave

-

Nebius (Netherlands, really a Russian company)

-

Crusoe

-

Lambda

-

Nscale (UK)

-

About a dozen crypto miners pivoting to GPU renting

NVIDIA’s circular GPU revenue loops

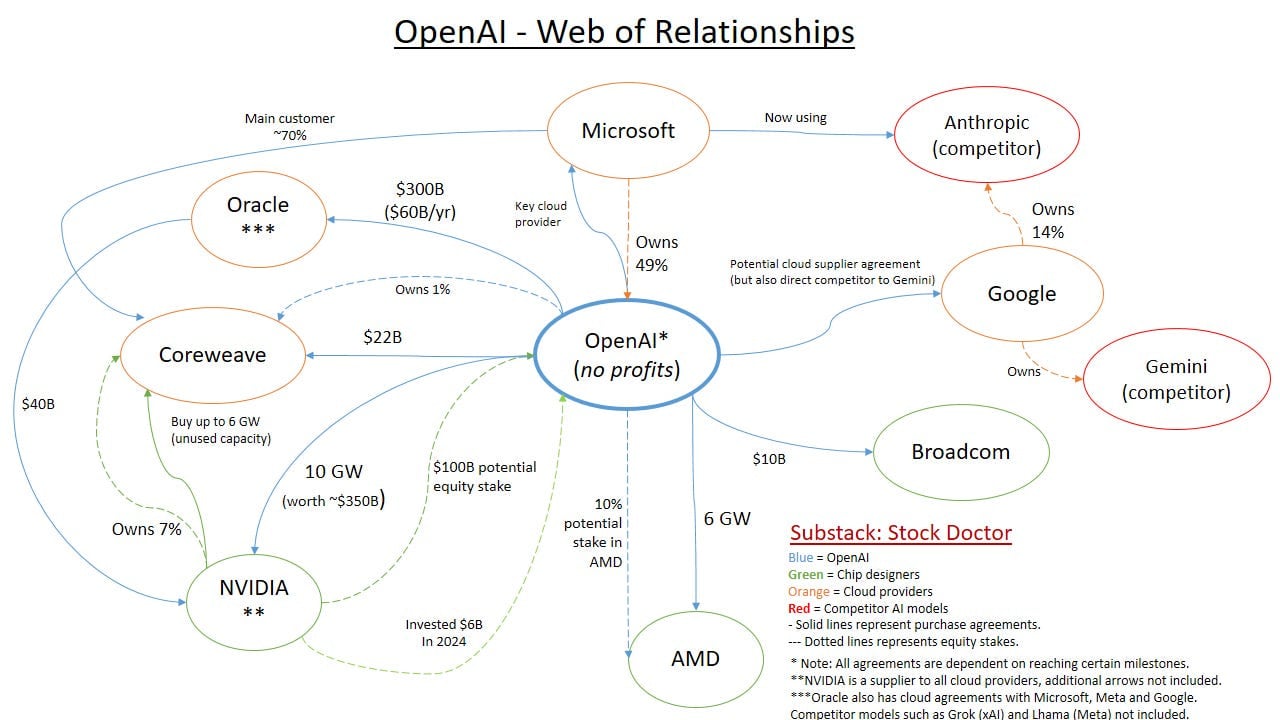

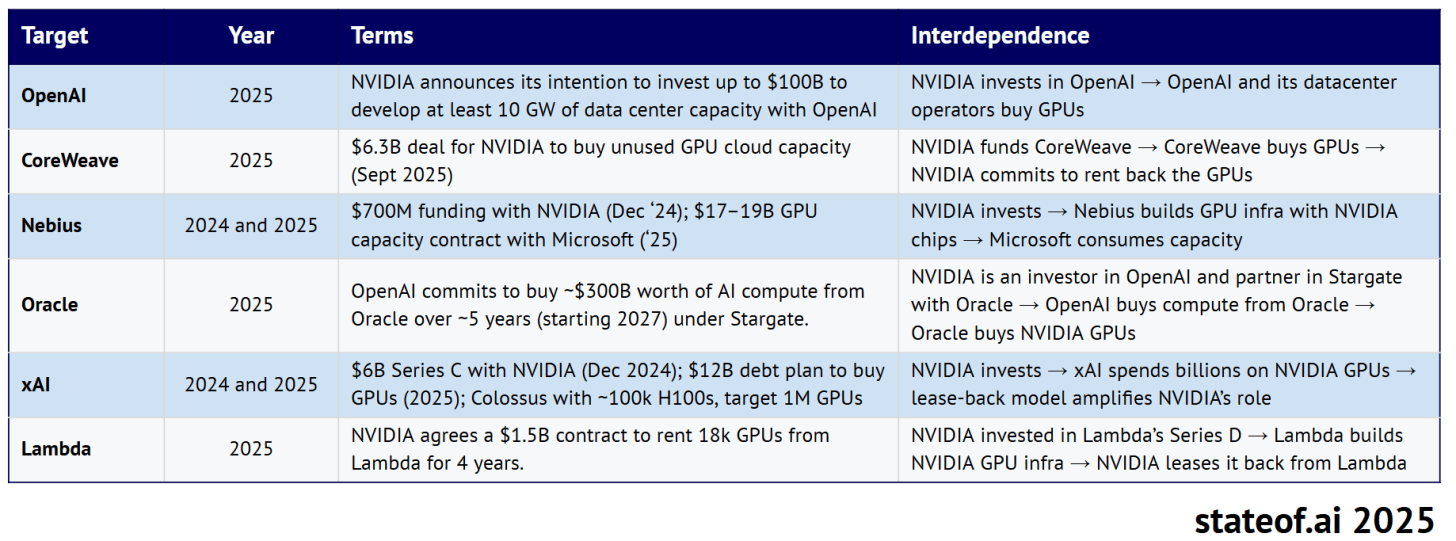

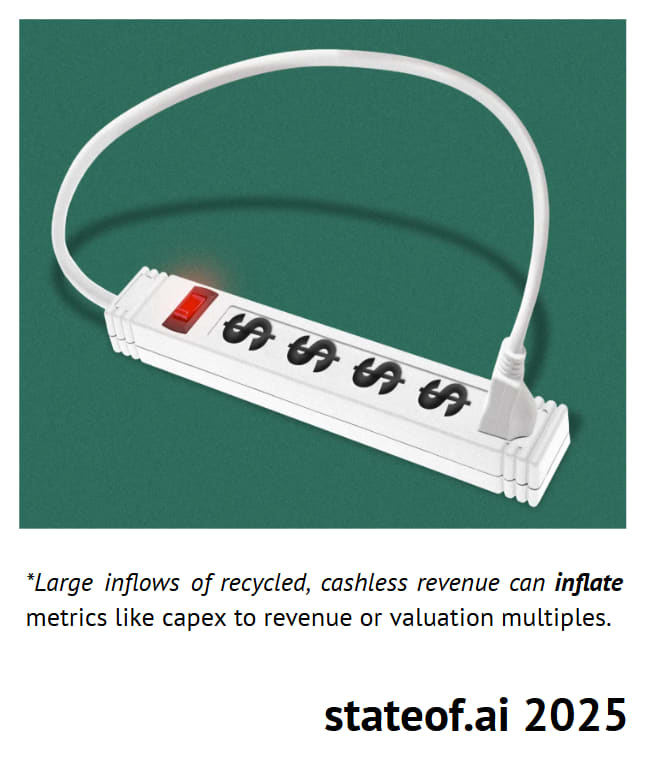

With OpenAI and CoreWeave you really see Nvidia’s circular and vendor financing scheme (that also accelerates the demand for compute).

Which is part of the vibe of 2025, a time of debt, SVP deals, and partner financing like we’ve never seen before.

-

The middle of the State of AI report is really where the interesting parts are found for me.

What it also does is raise the shareholder anticipation and capex frenzy which is all being rewarded by the U.S. AI driven bull-market.

U.S. capitalism and is own mechanics and now pushed by the U.S. administration means to try to “win in AI” has become political. If they are wrong, the capital allocation to lead in science, innovation and future technology is also compromised.

Full extent of AI Infrastructure Debt and Leverage is not fully known in 2025

We do know that debt will accelerate as the AI arms race itself accelerates with major power and AI Infrastructure constraints.

Some of the biggest risk-takers in this regard appear to be:

-

OpenAI with Softbank

-

Oracle

-

Meta

-

xAI

-

CoreWeave

These include major compromises by U.S. firms to the Middle East and oil money of the UAE and Saudi, including selling them weapons and first-access to AI chips

Petrodollars are bankrolling the American AI dream

From the position of Europe, the American are degrading themselves. In some effort to keep ahead of China.

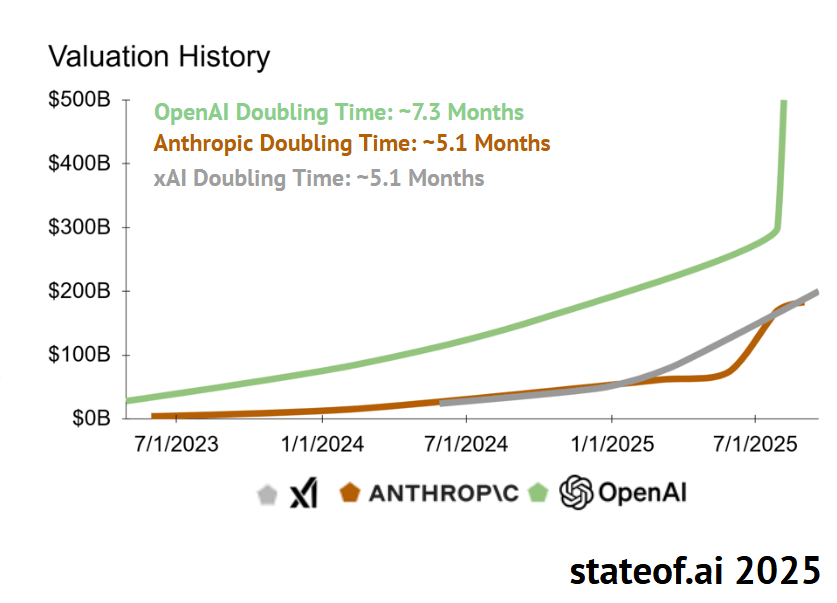

According to Dealroom:

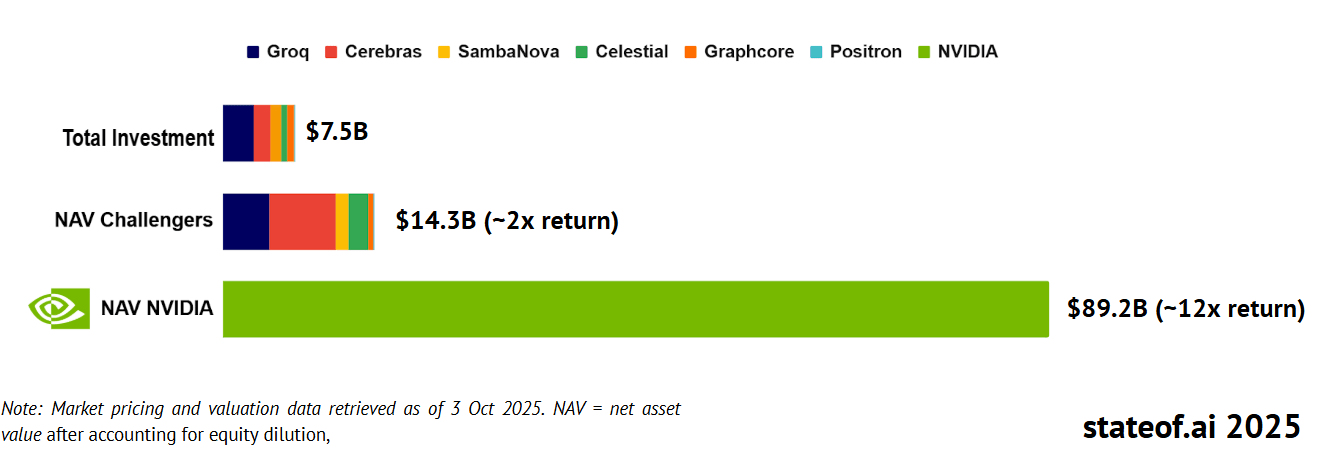

Nvidia’s Monopoly in AI chips and lead and dependence on TSMC continues in 2025:

-

AMD is generally considered Nvidia’s only real competitor and it doesn’t have much marketshare at all.

-

China’s speculative competitor is considered to potentially be Huawei.

Nvidia’s younger “challengers” are generally considered to be:

-

Cerebras (that was supposed to go IPO but but on the brakes recently).

-

Groq

-

Graphcore

-

SambaNova

-

Cambricon

-

Habana

-

Moore Threads

-

Biren Technology

-

MetaX

-

Enflame

The AI Chip Monopoly of Nvidia sets the U.S. tone for the 2020s

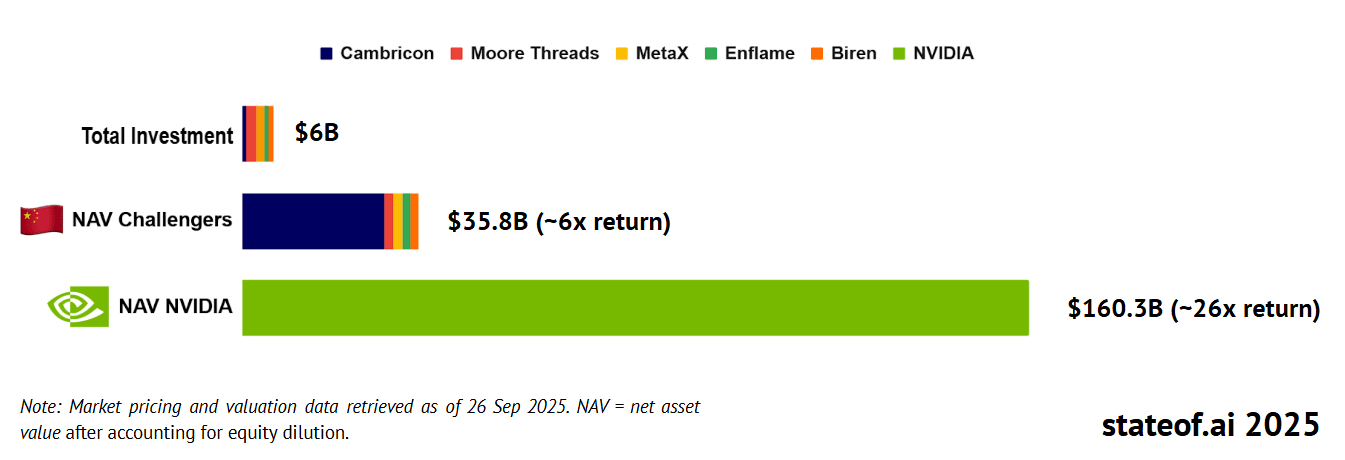

China’s AI chip startups aren’t fairing any better:

Cambricon Shows Momentum

Cambricon Technologies is a partially state-owned publicly listed Chinese technology company headquartered in Beijing. So if Huawei doesn’t remain competitive, China has some other options.

Building on explosive 4,348% revenue growth in the first half of 2025, Cambricon has tallied strong orders through 2026. Cambricon, who reportedly sold just ~10K GPUs in 2024, will ship ~150K GPUs in 2025, with rumors that 2026 orders could reach 500K GPUs.

-

Huawei will be responsible for just ~62% of the XPU volume produced by Chinese firms in 2025. For reference, NVIDIA still controls 90%+ of the global XPU market.

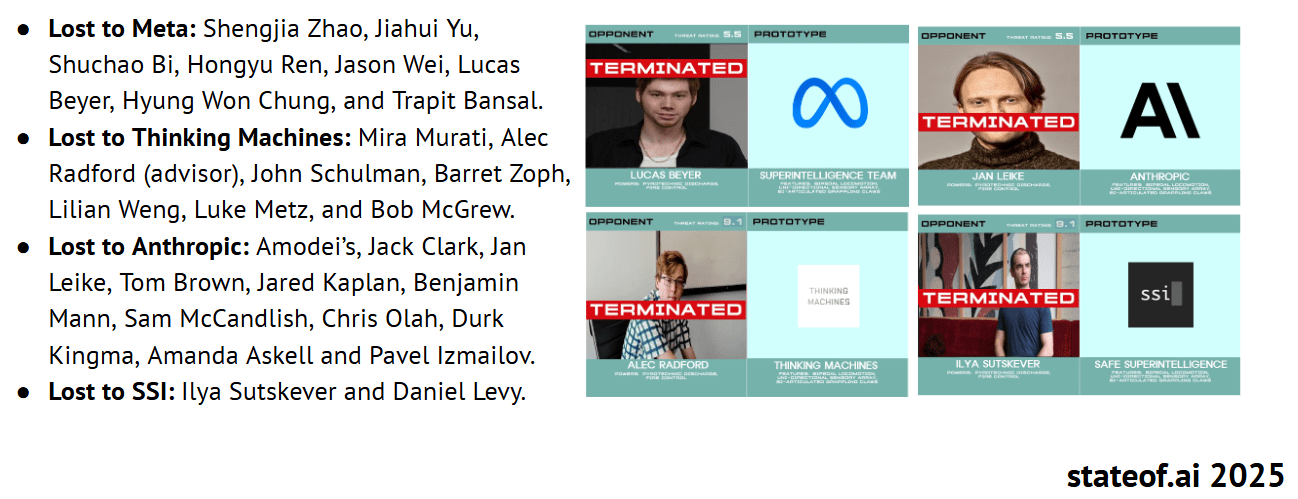

The Mass Migration Talent Wars

Meta’s poaching of AI talent was certainly one of the highlights of 2025 in Silicon Valley.

Amid the OpenAI mafia starting so many new AI startups, the churn of talent at OpenAI is starting to add up: (Ironically it’s Chinese talent for hire was easily poached with monetary tactics by Meta).

Humanoids Became part of the AI Race in 2025

China flexed its robotics muscles here a lot:

-

China’s Unitree’s launched their R1 humanoid at $5,566, has >$140M annual revenue and is kicking off a ~$7B onshore IPO.

-

Hong Kong-listed UBTech booked ~$180M in 2024 revenue and targets 500–1,000 Walker-S deliveries in 2025 to automakers, Foxconn, and SF Express.

-

Outside China, Agility’s Digit is in a paid multi-year RaaS deployment with GXO Logistics, while Figure has raised $2.34B and Apptronik raised $350M to reach pilots. Tesla’s Optimus and 1X are still demo’ing.

U.S. Venture Capital’s Massive Allocation into AI

This is mostly what Airstreet Capital does, so they would know a thing or two about it. Not like I’m bullish on Europe or the UK’s contribution to the cohort. This is all about U.S. capital outflows.

This isn’t just about capex this is also about BigTech investing directly into AI startups, acquiring some and getting their IP and talent too fast.

Capital allocation on Generative AI is massive because its hitting on so many levels, even if capex in AI chips and AI Infrastructure is the one that stands out. There are many layers to this. Which AI startups Nvidia invests in actually matters in 2025 to shape the future, not just the partners or vendors its financing.

Nvidia has extremely important stakes in:

-

Reflection AI

-

Nscale

-

CoreWeave

-

Mistral

-

xAI and OpenAI

-

Crusoe

-

Cohere (basically the mini Anthropic of Canada)

-

Poolside

-

Lambda

-

Perplexity

You’ll notice Open-weight AI models, robotics and datacenter related AI startups really stand out.

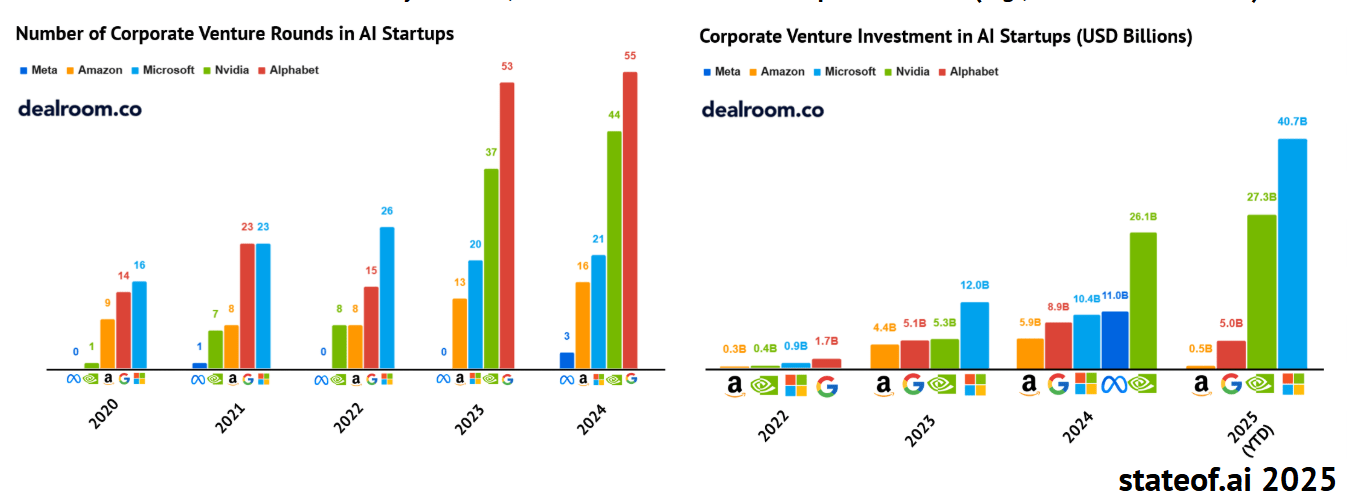

Gen AI Megaounds make up 90% of all VC Funding

Mega $250M+ rounds eat the lionshare of private capital invested into GenAI

So there isn’t just concentration in the markets, in BigTech, BigAI and who is funding them, but in the mega rounds and leverage BigAI have with incumbents pushing them higher. This is why the demand for compute is bound to keep going up at alarming rates. It’s engineered by capital dynamics and U.S. shareholder capitalism to go up, not because it is a legit AI boom, but because there’s so much capital.

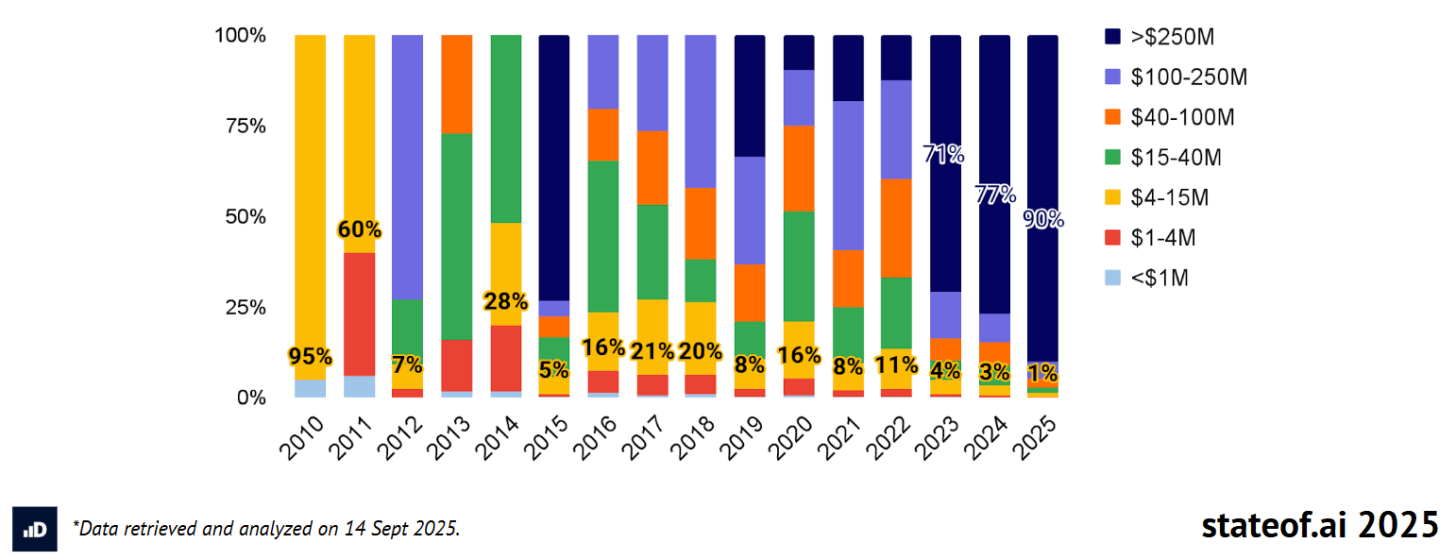

Private market valuations are also a major sign we’re in an AI bubble:

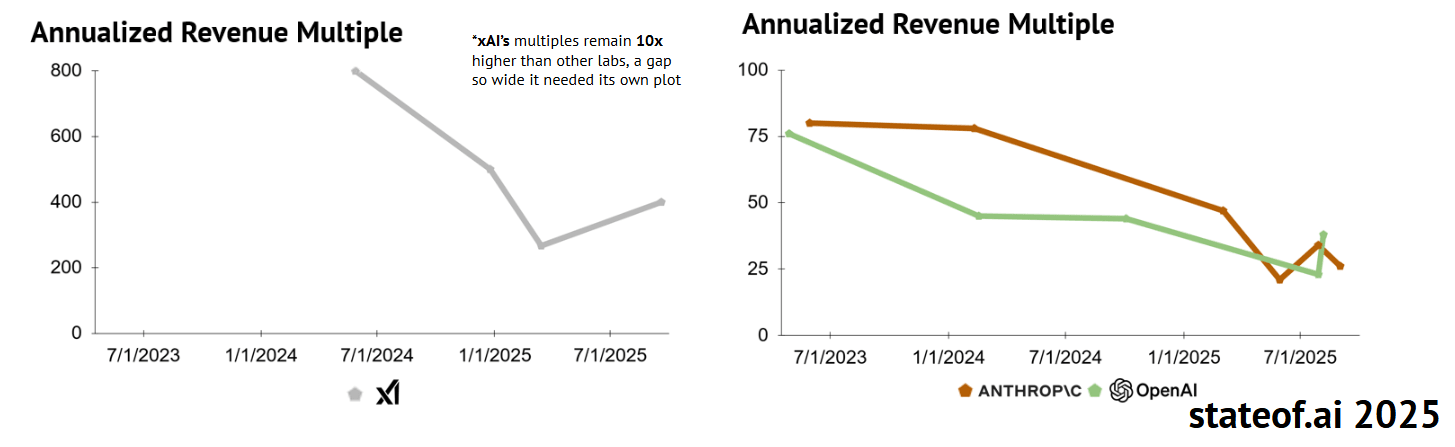

BigAI Private Market Valuation Insanity

At this rate BigAI will all be over $1 Trillion in market cap, much more if they rush to IPO in a bull-market. These are the BigThree of BigAI but there could be others who will join them.

2025 was the Year BigAI started to Scale

However bad their margins and AI Infrastructure needs for the future:

Anthropic’s annualized revenue again looks poised to ~10x YoY, while the slightly more mature OpenAI appears on track to ~3x YoY.

BigAI are growing as if they were “generational companies”. But then again so is Google Gemini and their AI products. Few companies had a better 2025, than Google or Anthropic this year in AI. Alibaba with Qwen is certainly in the picture in germs of LLM innovation.

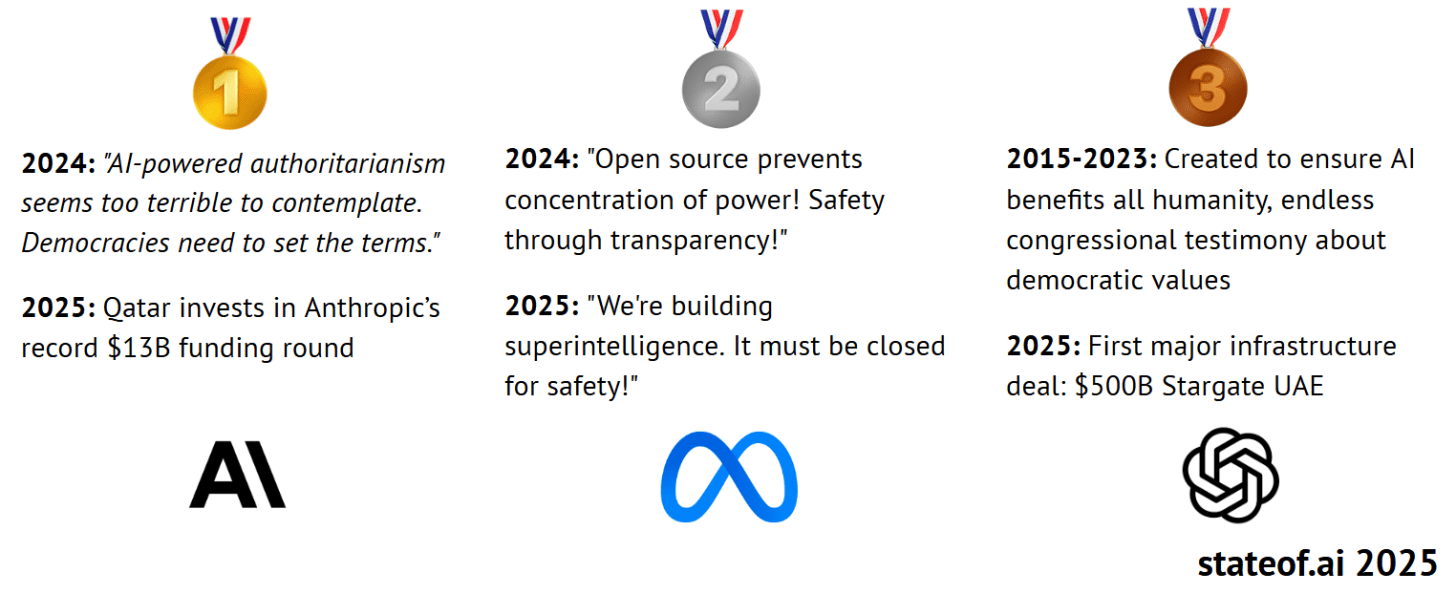

The Report Does Poke Some fun at the Ideological Vibe Shifts of 2025

Nevermind what OpenAI has become is barely recognizable from its former mission and Sam Altman is pandering to global elites.

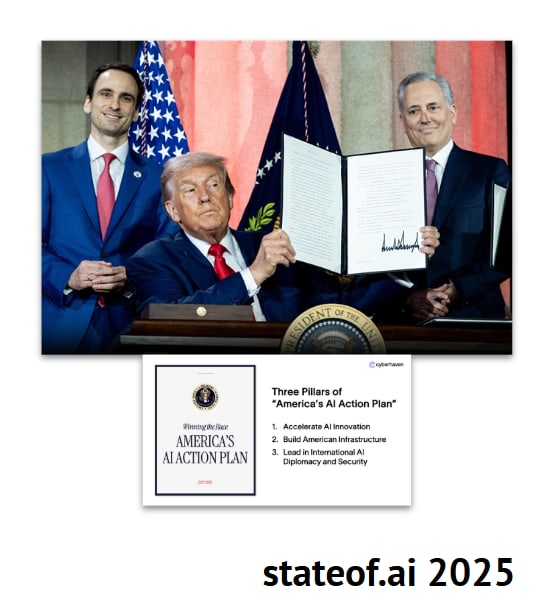

The AI Action Plan: America’s Grand AI Strategy

Trying to untangle U.S. politics and geopolitics and its impact on AI is a bit more difficult.

Suffice to say it got a bit cringe with an AI Action Plan that looked like it was written by ChatGPT (big surprise there).

The 23-page plan in some areas didn’t make a lot of sense in its objectives:

-

US tech stack exports: Executive Order 14320 establishes American AI Exports Program, an AI stack package (hardware, model, software, applications, standards) for allies and others.

-

AI infra build-out: Plan calls for streamlining permitting, upgrading the national grid, and making federal lands available to support and build data centers and AI factories.

-

Open source model leadership: US open source leadership is viewed as vital to US national security interests.

-

Rollback of AI regulations: Federal agencies may withdraw discretionary AI spending to states with “onerous” AI regulations.

Protecting “free speech” in deployed models: Federal procurement policies updated – US will only procure frontier LLMs “free from top-down ideological bias.” A lot of build-baby-build and “we must win” rhetoric of supposed Chinese hawks. Which makes Trump’s Friday statement so predictable (threats and tariffs).

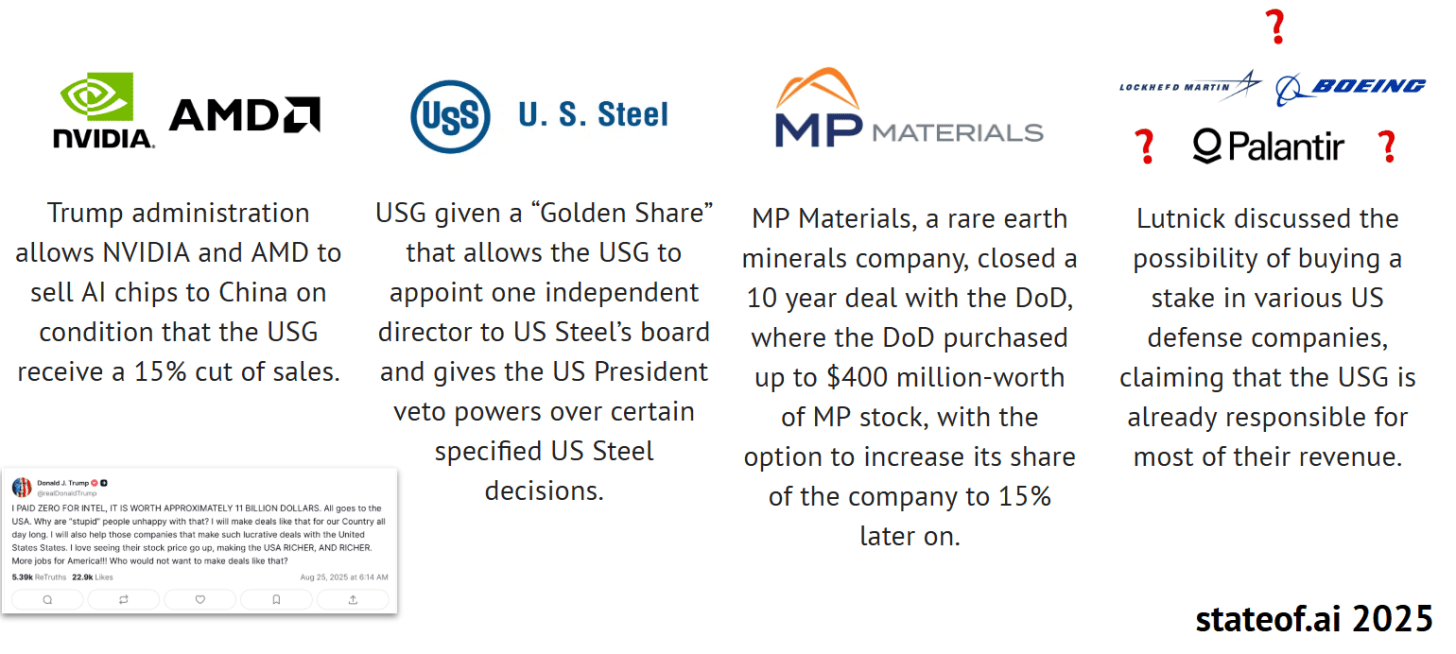

Trump’s Massive Industrial Policy Interventions

-

From Intel to plans to build a U.S. Sovereign Wealth Fund, Golden dome and T-dome with Taiwan, things are getting a bit weird.

The AI Action Plan mentions the need to fund “basic science” in AI, but “core” AI R&D is far below the $32B that experts have recommended the US invest by ‘26. This is foundational, non-commercial research – the type of research that is sometimes argued to be more transformative than that of the private sector.

The Trump Administration have also defunded science and curtailed how the U.S. gets the majority of its AI talent, immigration. Has this Administration done more harm than good, we’ll know in five or ten years. Or maybe a lot sooner than that.

In 2025, the U.S. basically vetoed International AI Governance, AI Treaties, and Global AI Safety Alignment. The Trump Administration walks to the beat of its own drum. The weakening of democracies could be an AI risk unto itself the way things are going.

The so-called U.S. Federal agencies like the DOJ, FTC, and SEC have largely assumed the AI regulatory oversight role. Their main priority is in making sure that companies do not overstate their AI claims (“AI-Washing”). But it’s hard to take them seriously too given recent history. The FTC requested information from Meta, OpenAI, and Google but also from Snapchat, xAI, and Character.AI.

The Middle East Gulf enters the AI power game with a trillion-dollar bets

The Trump Administration certainly seems to have friends in this part of the world. OpenAI, Oracle and Nvidia also seem to have the same network:

-

The UAE will import up to 500k Blackwell GPUs worth $15B through 2027. G42 will get 20% of the chips and 80% will go to the regional data centers US tech giants with each watching out for unintended Chinese usage.

-

In Saudi Arabia, the US agreed deals worth $600B: $142B of US defense equipment sales, $20B for US AI data centers, and $80B of investments into the region from Google, DataVolt, Oracle, Salesforce, AMD and Uber.

-

Beyond domestic projects, UAE sovereign funds pledged to finance a 1GW AI datacenter in France (costing €30-50B).

Trump’s trip to the Middle East and the U,K. in 2025 felt like AI geopolitical events of some importance.

U.S. Department of Defense (Department of War) Pivos to AI

In 2025 we saw a noticeable inclination of the DoD to support Peter Thiel backed companies like Palantir, Anduril and others with major contracts including OpenAI. BigAI has been welcomed inside of the U.S. military like would have been unthinkable a few years ago.

-

US Army signed a 10-year enterprise contract with Palantir worth up to $10B, consolidating dozens of prior software deals into one platform.

-

Project Maven expanded to $1.3B through 2029, now supporting 20,000+ individual warfighters, double the base earlier in the year.

-

NATO procured Palantir’s Maven Smart System in just six months for all members despite feeling frosty with loosening US security guarantees.

-

DoD signed $200M ceiling contracts with each of OpenAI, Anthropic, Google, and xAI to explore frontier AI for command, cyber, and planning missions. This marked quite a vibe shift for AI groups that previously took a strong view that their models should not be used for defense purposes.

NATO fast-tracked its first alliance-wide AI system, Palantir’s Maven Smart System, as a central pillar of the Western defense industrial base. This caused Palantir’s stock to go up another 133% so far in 2025 without much of a revenue jump. Trump’s pet projects like The Golden Dome is an easy way for him to support the companies of his friends here and political backers.

To change the name of the DoD to the DoW was after all Peter Thiel’s idea via the Anduril founder. It makes you wonder just who Trump and the VP represent exactly: The vibe chift in the military sounds like a made for Anduril (the sort of companies a16z and Peter Thiel’s VC fund backs) homecoming:

The U.S. has moved from DARPA’s AlphaDogfight in 2020 and live AI-flown F-16 tests in 2022 to embedding autonomy into doctrine. Collaborative drones, swarming initiatives, and multi-domain contracts are now framed as essential to offset China’s numerical advantage, making uncrewed systems a core pillar of force design.

Thus 2025 was also a dangerous year on how AI is being used in the United States both in politics and National Defense. I won’t go into the rise of fascism and authoritarianism implicit in this administration.

On the VC front it all took predictable wins:

-

Air Force’s Collaborative Combat Aircraft (CCA) first prototypes (General Atomics’ YFQ-42A and Anduril’s YFQ-44A took flight in 2025, with 1,000+ drones planned at ~$25-$30M each. FY25/26 budgets total $4B+ for Next Generation Air Dominance and CCAs.

-

The Replicator initiative targets thousands of cheap autonomous systems across air, land, sea within 24 months, backed by $1.8B FY24 funding as a direct hedge against Chinese mass.

-

Anduril scored major autonomy wins, including $250M for Roadrunner/Pulsar cUAS, $200M Marine cUAS contract, and an $86M SOCOM deal for autonomy software. The company is expanding its maritime autonomy XL UUV and hypersonic missile work.

-

Saronic too has a $392M OTA with the US Navy for autonomous boats.

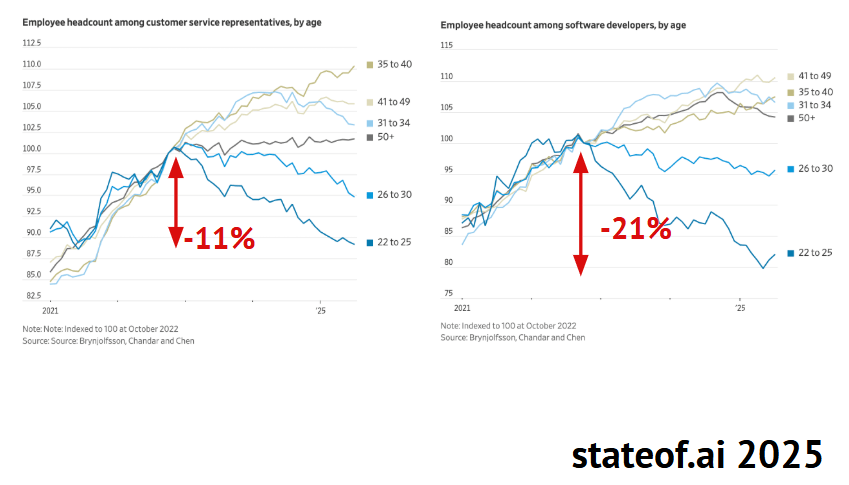

The Myth that AI is Squeezing Entry Level Jobs Persists

We certainly do have a tightening labor market as of September and October, 2025. But the impact of AI outside of SWEs is fairly limited.

That covers our time we have on this report. You can read the Safety section and AI research section at the beginning that we more or less skipped over.

Of course my opinion differs from the slant of the AI report on some points but moreover it was the best State of AI Report we’ve seen yet in eight years.

I encourage you obviously to read the original source.

❓ Feedback 🗳️

Thank you to on Ghost for the idea:

Kindly comment if something stood out for you.

For more dialogues, check out my Chat:

Stuart Winter-Tear and Henrik Göthberg on LinkedIn.

This LinkedIn post came up in search on this topic.

“}]] Read More in AI Supremacy