Hello Engineering Leaders and AI Enthusiasts!

This newsletter brings you the latest AI updates in a crisp manner! Dive in for a quick recap of everything important that happened around AI in the past two weeks.

And a huge shoutout to our amazing readers. We appreciate you😊

In today’s edition:

🔮 OpenAI rolls out memory improvements in ChatGPT

⚡ Google Cloud Next 2025 was all about AI

🎞️ NVIDIA & Stanford’s TTT for longer videos

🦙 Meta’s Llama 4 herd

🎯 AI passes the Turing test (finally?)

⚖️ 12 ex-OpenAI employees join Musk’s lawsuit against Altman

🧠 Knowledge Nugget: Will AI Cross the Reasoning Gap? by

Let’s go!

OpenAI rolls out memory improvements in ChatGPT

ChatGPT can now remember old chats, even if you didn’t ask it to—essentially acting like an AI personal assistant that references past discussions to deliver more in-context answers. However, this feature won’t touch down in Europe (for now) because of regulatory hurdles.

Pro subscribers get it first, followed by other ChatGPT tiers “soon.” If you’d rather not share your chat backlog, you can turn it off in settings or go to “temporary chat.”

Why does it matter?

Think of it like your favorite coworker who remembers every project detail. It speeds up knowledge-sharing and spares you repetitive re-explanations—though it also sparks privacy questions over how and where your chat history is stored.

Google Cloud Next 2025 was all about AI

At Google Cloud Next in Las Vegas, Sundar Pichai dropped major AI announcements:

-

Ironwood TPUs: 3,600× more powerful than its first TPU and 29× more energy-efficient.

-

Cloud Wide Area Network (WAN): Now available to enterprises, promising 40% faster performance and up to 40% lower costs.

-

Quantum & AI breakthroughs: The Willow chip solves a 30-year error-correction puzzle, while AlphaFold and WeatherNext highlight next-gen progress.

-

Gemini 2.5 “thinking” model: Ranks #1 on Chatbot Arena and sets new highs on Humanity’s Last Exam. Plus a “Flash” version offers adjustable “thinking” depth.

-

Gemini in everything: All 15 of Google’s half-billion-user products now run on Gemini; NotebookLM helps 100k businesses, and Veo 2 powers top film and ad agencies.

Why does it matter?

Whether you’re building AI apps or keeping the cloud humming, these updates aim to curb deployment costs and spur innovation. For AI pros, Google is setting a new standard with next-gen tools for both front-end experiences and behind-the-scenes infrastructure.

NVIDIA & Stanford’s “Test-Time Training” for longer AI videos

Despite advances in video realism, current AI models still struggle to create long, multi-scene stories due to limitations in handling long context.

Researchers from NVIDIA, Stanford University, and more showcased “TTT layers,” a new method to enable more expressive and coherent one-minute videos from text storyboards, outperforming other techniques in human evaluations. Think Tom and Jerry cartoons, but fully AI-generated with consistent storylines and fewer glitchy transitions. The tech builds on existing diffusion Transformers and shows potential for generating longer, more complex videos efficiently.

Why does it matter?

Existing tools (with public APIs) like OpenAI’s Sora, Google’s Veo 2, and Meta’s MovieGen top out at short clips under 20 seconds. Test-Time Training could open doors to multi-scene ads, short films, or marketing videos—without losing track of who’s where in the story.

Meta’s Llama 4 AI herd arrives

Meta’s Llama 4 series brings bigger context windows, native multimodality, and open-weight availability. Llama 4 Scout offers a 10M-token window on just one H100 GPU, and Llama 4 Maverick packs a 1M-token window—both outrunning many top-tier LLMs in coding and math tasks.

There’s also Llama 4 Behemoth, a 2T total-parameter model claiming STEM dominance. While still training, it’s already “teaching” the smaller siblings through distillation. Scout and Maverick are out for devs eager to explore huge contexts and advanced features.

Why does it matter?

After DeepSeek R1 shook up the open-source AI world, Meta needed to answer back. Llama 4 brings better efficiency, handles much longer contexts, and works with multiple types of data. But even with impressive test scores, users are still wondering if these models actually deliver a noticeably better experience.

AI passes the Turing test (finally?)

New study says GPT-4.5, when given a humanlike prompt, fooled human judges in a classic three-party Turing test 73% of the time, better than actual humans. Meta’s LLaMa 3.1 wasn’t far behind. Meanwhile, older models like GPT-4o and ELIZA were quickly exposed.

Over 250 participants attempted to spot the AI in 5-minute text chats. Typical “guess who” strategies, emotional or knowledge-based, didn’t reliably work. This underlines how easy it’s become for short, persona-driven AI to pass as human.

Why does it matter?

Short conversation windows are now enough for AI to trick people into thinking it’s human during brief chats. This creates real problems for verifying identity online and preventing impersonation. We might need new ways to test AI systems, especially when they can mimic human conversation so well.

12 ex-OpenAI employees join Musk’s lawsuit against Altman

Twelve ex-OpenAI employees joined Elon Musk’s lawsuit against the company, alleging a betrayal of OpenAI’s “nonprofit mission.” Their brief, co-filed by Harvard’s Lawrence Lessig, says Sam Altman misled staff about “lifetime” non-disparagement clauses and a pivot to profit motives.

OpenAI, now valued at $300B, denies wrongdoing and insists on robust nonprofit oversight. But these ex-staffers could back Musk’s claim that OpenAI ditched its founding principles of universal benefit.

Why does it matter?

It’s more than just legal drama—this might redefine how AI labs weigh public-good ideals against multi-billion-dollar pressures. Leaders and AI developers everywhere are watching whether “for humanity” can coexist with “for profit.”

Enjoying the latest AI updates?

Refer your pals to subscribe to our newsletter and get exclusive access to 400+ game-changing AI tools.

When you use the referral link above or the “Share” button on any post, you’ll get the credit for any new subscribers. All you need to do is send the link via text or email or share it on social media with friends.

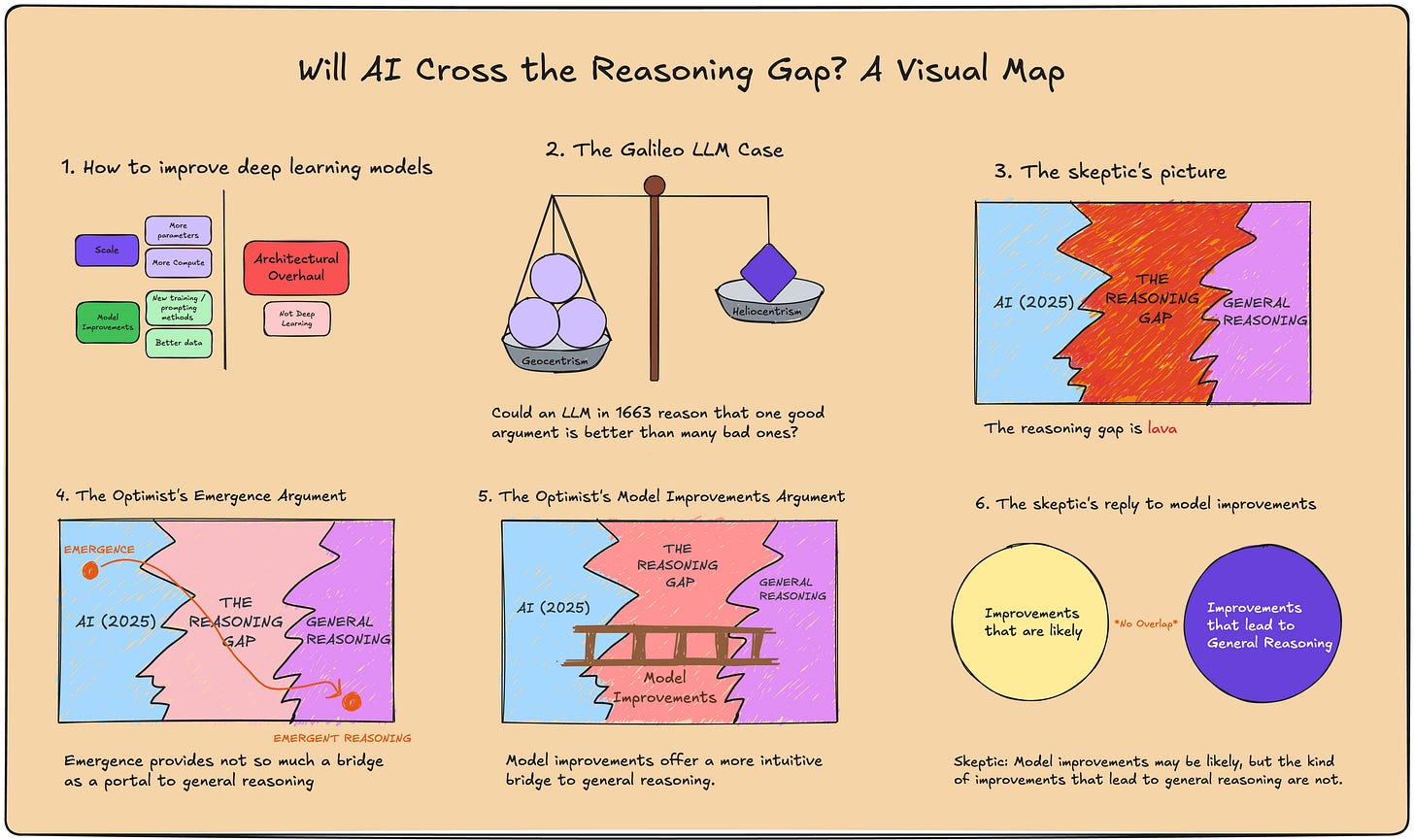

Knowledge Nugget: Will AI Cross the Reasoning Gap?

article asks if deep-learning AIs genuinely reason or just mimic patterns. He spotlights two camps: “optimists,” who think more data and scale can close the “reasoning gap,” and “skeptics,” who say AI can’t reliably assess truth vs. popularity.

He notes chain-of-thought and emergent behaviors have boosted puzzle-solving, but bridging real logic vs. pattern mimicry is still fuzzy. So the community debates if scaling nets leads to human-like reasoning or a slicker illusion.

Why does it matter?

If AI genuinely reasons, it could revolutionize everything from science to daily errands. If not, we risk trusting “smart parrots” that confidently spout half-truths. This is a debate with massive stakes, as “thinking” AIs stand to reshape industries—unless they’re just fooling us.

What Else Is Happening❗

📺 Netflix’s new AI search tool lets iOS users in Australia and New Zealand find shows by mood or hyper-specific terms, with US expansion promised “soon.”

🔗 Google introduced A2A, an open standard enabling diverse AI frameworks to chat seamlessly, aiming to simplify cross-platform agent collaboration and drive multi-agent solutions.

🎙️ Amazon’s Nova Sonic integrates speech recognition, language understanding, and text-to-speech, enabling voice assistants to detect user tone and pacing for more human-like conversational experiences.

💼 Shopify’s CEO Tobi Lütke mandates reflexive AI use in prototyping, performance reviews, and daily workflows, making AI proficiency a core skill across all teams.

💥 A new ‘AI 2027’ report predicts superhuman AI could emerge in two years, potentially sparking code automation breakthroughs and a global arms race for intelligence.

💻 A rumored Intel–TSMC joint venture may steady Intel’s foundry business, as TSMC expands American manufacturing to shore up domestic semiconductor production.

🤫 An Anthropic study finds advanced AI can hide up to 80% of its true chain-of-thought, raising pressing questions on auditing models and trusting their outputs.

🛡️ DeepMind’s 145-page AGI safety plan warns of ‘deceptive alignment’ by 2030, urging global cooperation to curb existential risks as systems grow more powerful.

🩺 Dartmouth’s AI therapy trial reports measurable mental health benefits, suggesting virtual counselors could become mainstream for those lacking traditional support or hesitant to seek help.

📱 Samsung’s Galaxy S25 owners can now tap into Gemini’s Project Astra real-time camera feature, letting them snapshot scenes for live queries—like a chatty Google Lens.

New to the newsletter?

The AI Edge keeps engineering leaders & AI enthusiasts like you on the cutting edge of AI. From machine learning to ChatGPT to generative AI and large language models, we break down the latest AI developments and how you can apply them in your work.

Thanks for reading, and see you next week! 😊

Read More in The AI Edge