Good Morning,

Today we continue our robotics series, a new section at the top of our publication’s website. There’s a lot to cover. Please click on the links for more information. This is the sort of emerging tech moment that for me is truly exciting.

“Introducing GR00T N1, the world’s first open foundation model for humanoid robots! We are on a mission to democratize Physical AI.” – Jim Fan, Nvidia.

Generative AI advancements have accelerated how quickly robotics innovation is occuring in 2025.

Tesla’s lead with Optimus may no longer be a sure bet. In today’s guest post deep dive, I asked my lead robotics writer to dig into Figure AI’s Helix model, an important innovation as the humanoid startup Figure AI build their own in-house model instead of relying on OpenAI.

Helix and GR00T N1

So why is Helix special? Helix is an innovative Vision-Language-Action (VLA) model developed by Figure AI, aimed at enhancing the capabilities of humanoid robots. It was announced on February 20th, 2025.

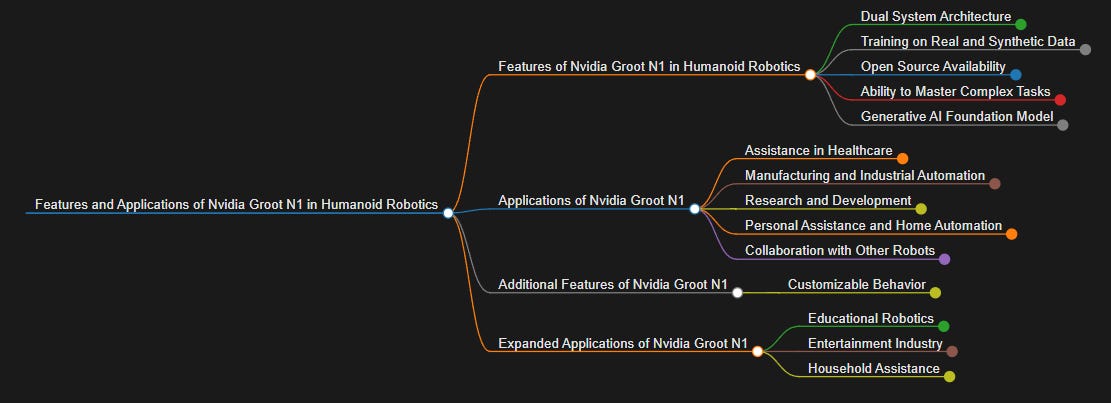

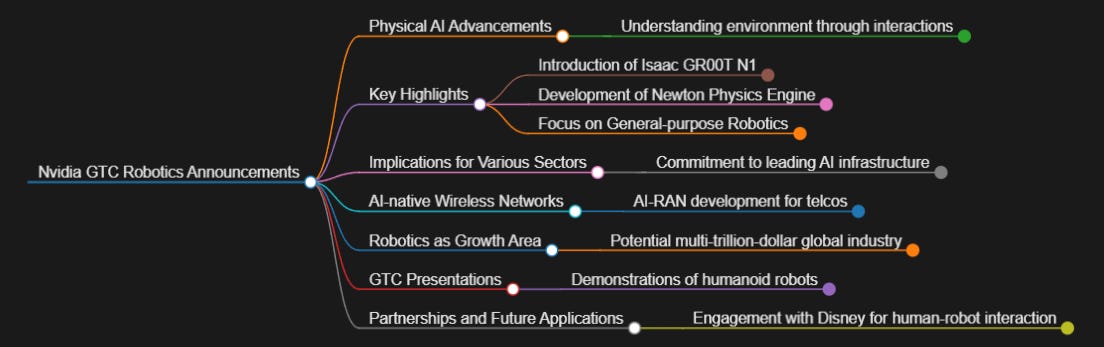

Nvidia GTC: General Purpose Robots are coming

The main takeaway from this event dubbed the “Super Bowl of AI” is for me clearly elevating the role of robotics and Physical AI in the years ahead.

Fast forward just a month, watching the Nvidia GTC keynote, what stood out to me was how much they are focusing on the future of robotics. Some aspects of real-world AGI will require major advances in robotics at the intersection of Generative AI and new AI architectures not yet invented or developed.

Generative AI Accelerating Robotics Development 2025-2030

A flurry of announcements (including more out of China) means Generative AI has shortened the period for how quickly robotics can learn to be useful in the human world.

Major Recent Announcements in Robotics in early 2025

Consider the following:

-

Nvidia has announced that Isaac GR00T N1 — the company’s open-source, pretrained but customizable foundation model that’s designed to expedite the development and capabilities of humanoid robots — is now available.

-

A few days earlier Google had announced Google DeepMind Gemini robotics. Read the blog.

-

In mid February, 2025 we learned both Apple and Meta are working on robotics in a major way.

-

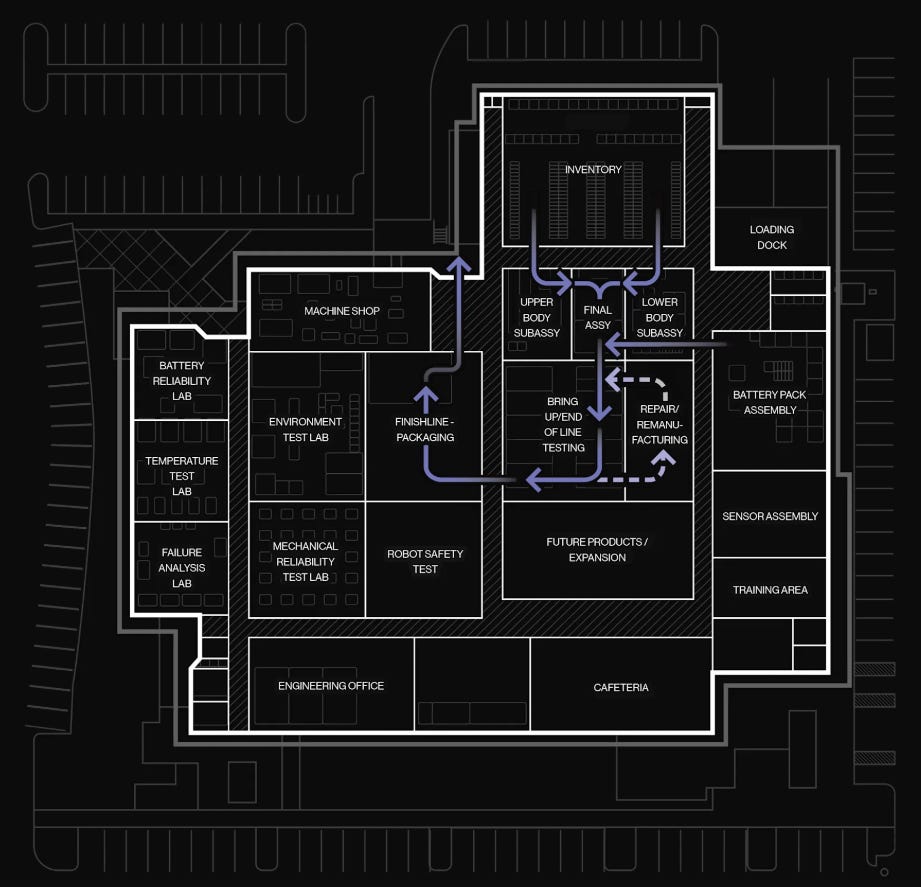

Three days ago Figure AI announced BotQ, Figure’s new high-volume manufacturing facility for humanoid robots.

BotQ is designed to produce 12,000 units/yr, scaling our fleet to 100,000.

Source: Figure AI.

BotQ Layout

Are general purpose robots even possible in the 2020s?

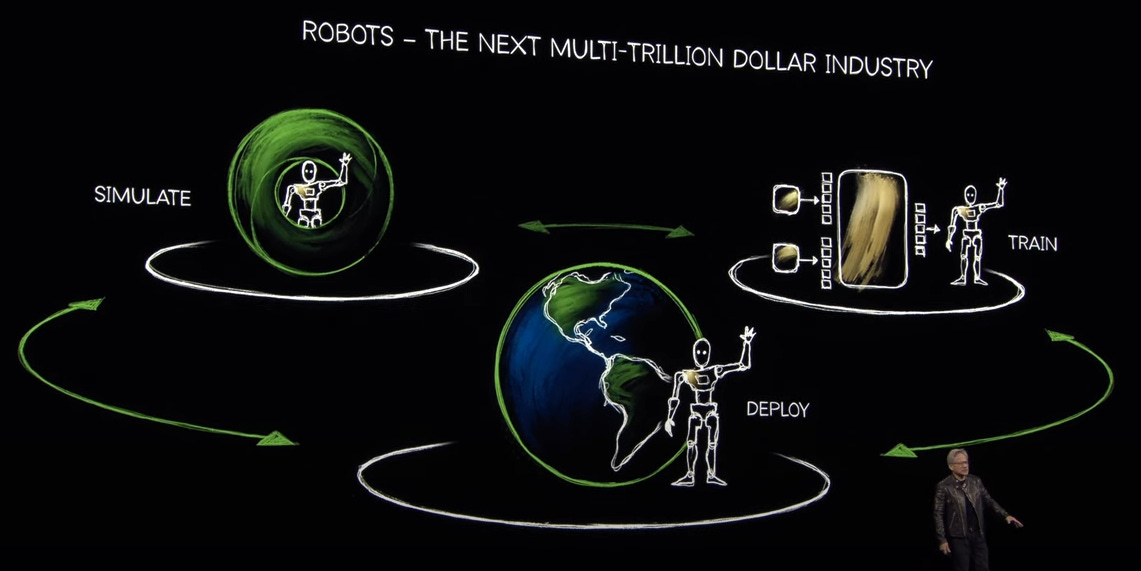

“The age of generalist robotics is here,” says Nvidia founder and CEO, Jensen Huang. “With Nvidia Isaac GR00T N1 and new data-generation and robot-learning frameworks, robotics developers everywhere will open the next frontier in the age of AI.”

The NVIDIA Isaac™ AI robot development platform consists of NVIDIA® CUDA®-accelerated libraries, application frameworks, and AI models that accelerate the development of AI robots such as autonomous mobile robots (AMRs), arms and manipulators, and humanoids. It appears to be democratizing robotics training leading to a wave of humanoid robots in the next 5 years.

🤔🔮🌊😬🤖

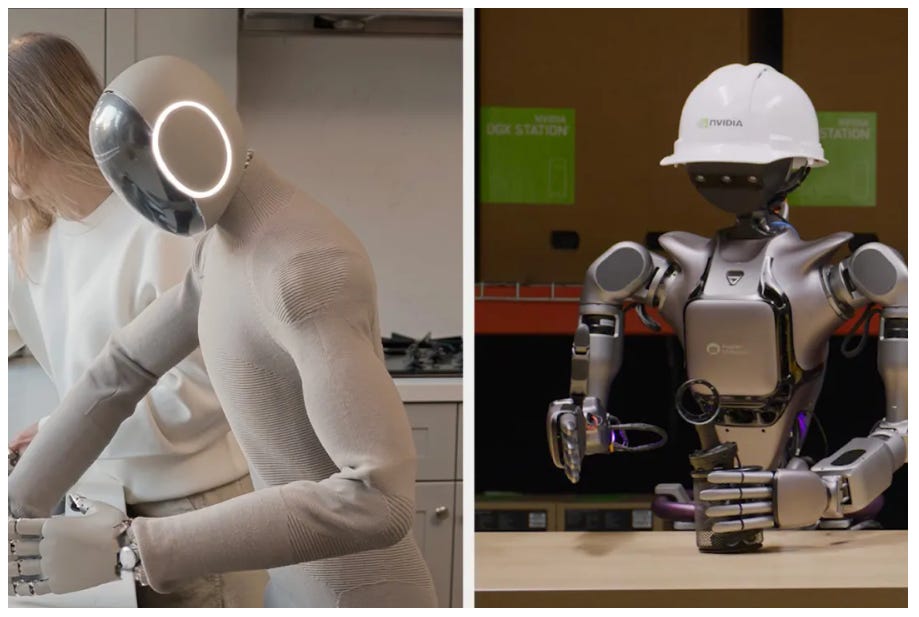

What is GR00T N1 ?

Nvidia’s GR00T N1 is democratizing physical AI, and the world hasn’t yet realized how big of a deal this is:

🎬 Video: 2 min, 21 seconds:

Introducing Isaac Groot N1

-

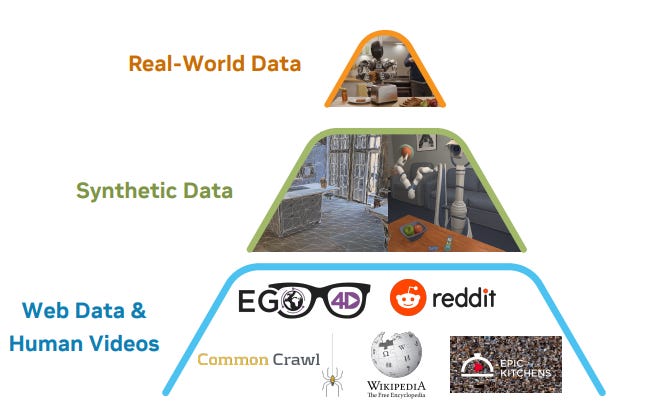

Groot N1, is a “generalist” model, trained on both synthetic and real data.

-

The power of general robot brain, in the palm of your hand – with only 2B parameters, N1 learns from the most diverse physical action dataset ever compiled and punches above its weight.

-

Groot N1 is an evolution of Nvidia’s Project Groot, which the company launched at its GTC conference last year.

-

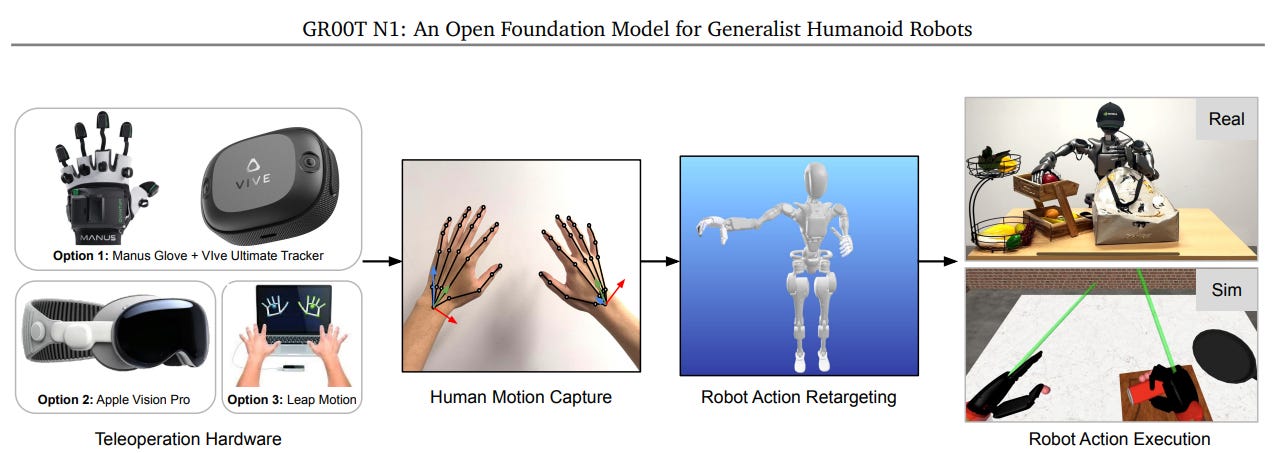

Real humanoid teleoperation data with large-scale simulation data: Nvidia is open-sourcing 300K+ trajectories!

-

GR00T N1 is a single end-to-end neural net, from photons to actions.

-

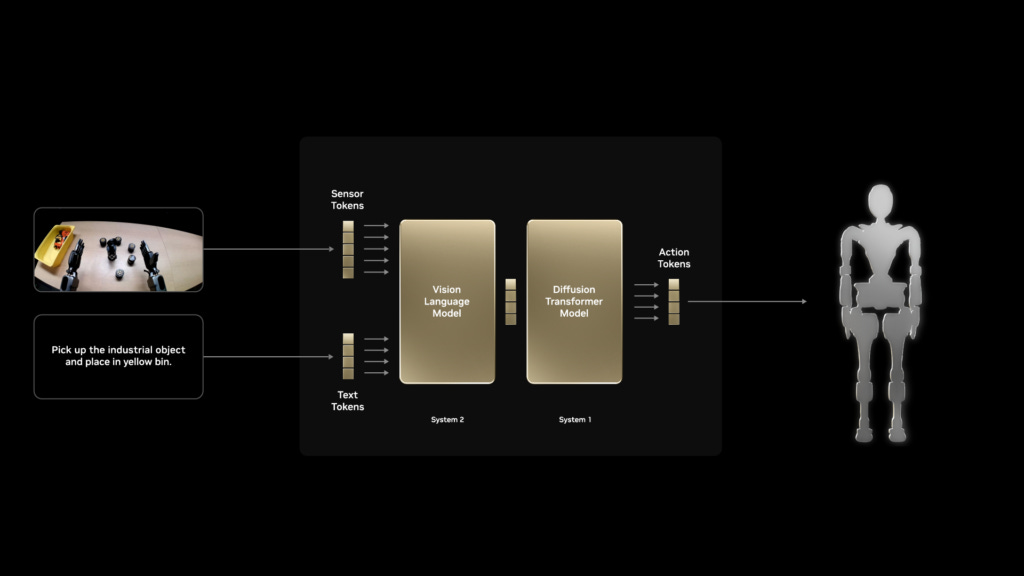

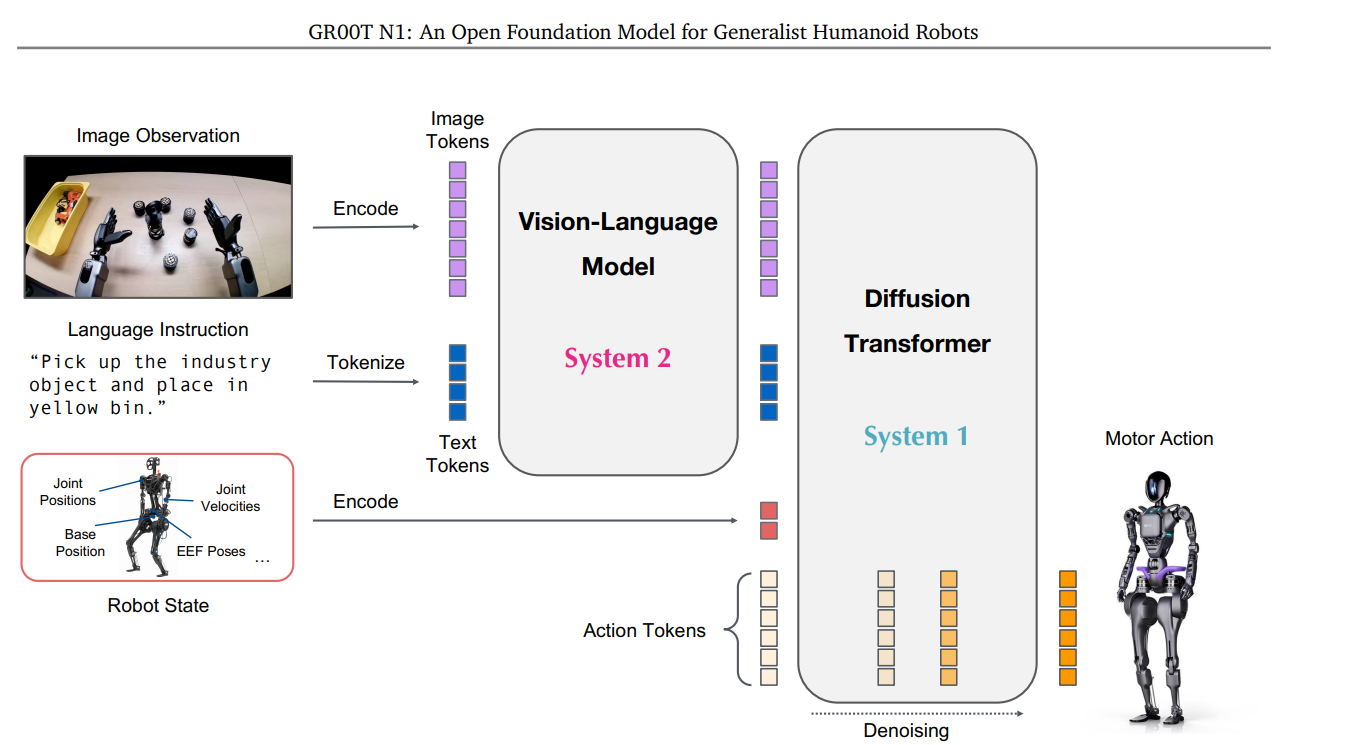

Vision-Language Model (System 2) that interprets the physical world through vision and language instructions, enabling robots to reason about their environment and instructions, and plan the right actions.

-

Diffusion Transformer (System 1) that “renders” smooth and precise motor actions at 120 Hz, executing the latent plan made by System 2.

-

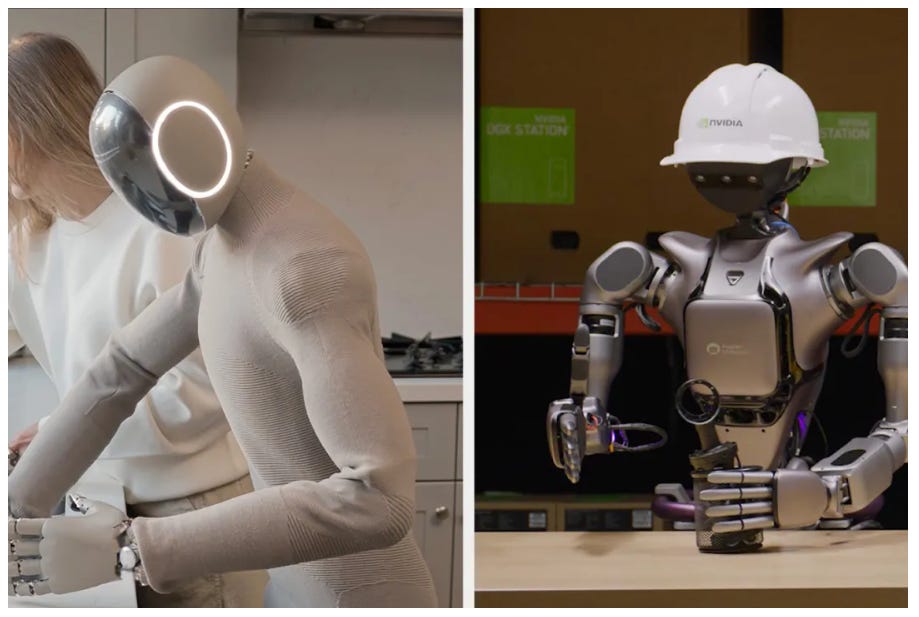

At GTC 2025, Nvidia demonstrated 1X’s NEO Gamma humanoid robot running its GR00T N1 foundation model.

-

The consensus is that NVIDIA’s GR00T N1 model provides a major breakthrough for robot reasoning and skills.

-

Other Nvidia partners with early access to this include 1X NEO, Boston Dynamics, Agility Robotics, Mentee Robotics, Neura Robotics and the makers of Atlas Robot. There are probably more I’m not aware of.

“The future of humanoids is about adaptability and learning,” says 1X Technologies CEO Bernt Børnich.

Chinese EV Makers to pivot into Humanoid Robotics

-

Another major news headline in 2025 is how many EV makers in China will also be working on robotics.

-

A company called Xpeng’s chairman said just on Monday that it plans to start mass production of its flying car model and industrial robots by 2026. Alibaba has a stake in Xpeng of about 9% equity. In December, 2024 we got more info about how BYD will also research and develop humanoid robots. More on Chinese robots in another article soon.

-

Figure AI basically announced it’s already training and alpha-testing robots for consumer home use, significantly earlier than anticipated.

This is important to understand to realize how big robotics is going to be as a topic in 2026 and moving forwards.

💡 If you want to keep up to date on the latest AI news, this short audio might be helpful:

👀💥🌊🛰️🧠

🏺 AI Origins: In Case you Missed It 🧬

To help you keep up in the AI News, here is a recent bite-sized round-up: a tl;dr aid:

Deep Learning With The Wolf Newsletter:

Diana is currently at GTC.

-

GTC 2025: The AI Moments That Mattered

Robotics Articles so far:

Consumer Home Robots are Coming in 2026 or 2027

-

Figure AI is among the best funded humanoid general purpose startups in 2025.

Figure is planning to bring its humanoids into the home sooner than expected. CEO Brett Adcock confirmed on February 27th, 2025 that the Bay Area robotics startup will begin “alpha testing” its Figure 02 robot in the home setting later in 2025.

So what is Helix exactly?

-

This model integrates perception, language understanding, and learned control to address various challenges in robotic manipulation and interaction.

Today’s post is made free by our Sponsor, App20X who just came out of stealth.

🌟 In partnership with App20x 📈

Build AI apps with just simple prompts

App20X allows creators with no coding skills to build AI business apps in minutes and monetize them as SaaS. It offers seamless built-in integrations with 20+ AI models and 200+ business tools. All tech is managed, requiring no technical skills.

-

More insights from me after the guest post.

Let’s get to today’s deep dive:

The Robots of Tomorrow, Today: A Deep Dive into Figure AI’s Helix

By

The Sandwich Generation Squeeze

I’m part of the sandwich generation, caught between caring for younger and older family members.

Some days I manage well; others I feel compressed—like a jelly sandwich oozing over the sides, staining everything it touches.

FigureAI’s announcement of accelerating their home robot timeline by two years caught my attention immediately. My home deserves to be spotless, but I rarely have the energy after fulfilling other responsibilities. A robot dedicated to household tasks 24/7? Immensely appealing. Our tech-friendly household would readily adopt such innovation, even in early stages with occasional mishaps.

This interest extends beyond my inability to fold fitted sheets properly. (They’re round objects that somehow should fold into neat squares—a puzzle ideally suited for artificial intelligence rather than my creativity-focused brain.)

Beyond Convenience: What Robots Could Mean for Our Lives

The potential of having capable robots in our homes extends far beyond tidying up or organizing kitchen cabinets. It’s about reclaiming time, reducing stress, and potentially transforming how we live.

I often think about all the households juggling multiple responsibilities—careers, children, home maintenance, personal health, social connections—and imagine how different life could be with reliable robotic assistance. Not to mention those caring for family members who need extra support. The mundane tasks that consume so much of our mental and physical energy could be handled while we focus on what truly matters.

As FigureAI’s founder Brett Adcock explains: “What I’m going to have him do is… do my laundry, cook me dinner… Every day [I] get home [and] there’s kids toys everywhere. I need them to clean the kids toys up every day… Over a long enough period of time everybody will own a humanoid just to do work for them.”

It’s for these reasons, more than my shortcomings regarding fitted sheets, that I look forward to robots in the home.

From Science Fiction to Reality

When I first saw a gold-plated C-3PO toddle onto the screen in 1977, sitting in a theater seat in the suburbs of New York, it felt real. Anthony Daniels’ performance and the groundbreaking SFX wizardry of the Star Wars crew convinced me I was seeing the future. I watched that movie multiple times, and it took my breath away each time.

I get the same feeling when I watch the robots of FigureAI toddle around their test “Home Kitchen.” Except there is no actor in the suit and no special effects wizard behind the sets, other than the very talented robotics and automation engineers at Figure. In a way, they are wizards that stand in a class all their own.

Video:

There is no doubt this is a slickly produced video with cinematic qualities. It doesn’t have that raw, uncut feel you get when OpenAI launches something. No, these two robots very carefully hand objects back and forth in movements so precise it is mesmerizing. I’m not sure I’ve ever been so intrigued by watching anyone—or anything—put a bottle of ketchup away. (It is hard to know how to refer to a humanoid, but that is a challenge for another day.)

There is a magical moment in the middle of the video where the robots hand off an item and then look at each other. There is something so powerful about that look, as if they are acknowledging each other’s existence. Now, it is likely all software-controlled and transactional, ensuring a smooth handoff, but it feels like so much more because our human brains want to anthropomorphize these things.

Why humanoid form specifically? As FigureAI’s founder Brett Adcock explains: “The whole world was built for humans… If you want to automate work, you want to build a general interface to that… The equivalent is a human form. You can do everything a human can and the world was optimized specifically for us.”

The Technical Breakthrough: Understanding Helix

Beneath the captivating demonstration is Helix, FigureAI’s advanced Vision-Language-Action (VLA) model – a term that requires unpacking. In essence, this system allows robots to:

-

See the world around them (Vision)

-

Understand human instructions (Language)

-

Interact physically with objects (Action)

All three components work in concert to create a seamless experience where robots can comprehend their environment, process verbal commands, and respond with appropriate physical movements. Think of it as combining the visual recognition capabilities of computer vision, the language understanding of ChatGPT, and the physical control systems of industrial robots – all in one integrated system.

The technical architecture is remarkably sophisticated:

Dual Cognitive Architecture: Helix operates on two complementary systems – S1 for fast decision-making and real-time actions, and S2 for higher-level planning and complex scene interpretation. This mirrors theories about human cognition, where we have both rapid, instinctive responses and slower, more deliberate thinking. It’s the difference between catching a ball reflexively (S1) versus planning a complex dinner menu (S2).

Modular Neural Networks: The system consists of a multimodal language model with 7 billion parameters (for context, that’s about 1/10 the size of GPT-4) and a specialized motion AI with 80 million parameters. This enables the robot to coordinate up to 35 degrees of freedom simultaneously – essentially allowing for human-like range of motion and dexterity. In robotics, “degrees of freedom” refers to the number of independent movements a robot can make, with each joint typically providing one or more degrees.

Efficient Learning: Perhaps most impressive is that Helix was trained on just 500 hours of data, representing a significant efficiency breakthrough compared to other approaches that typically require vastly more training time. This makes the system not only more practical to develop but potentially easier to update and refine for specific home environments.

Single Neural Network for Multiple Tasks: Helix uses a single set of neural network weights to handle all behaviors, eliminating the need for task-specific fine-tuning. This universality allows the robots to generalize their skills to new situations without extensive reprogramming—crucial for functioning in the unpredictable chaos of real homes.

Why Helix Matters for Everyday Life

The significance of FigureAI’s Helix extends beyond impressive technical specifications. Here’s why it could transform our daily experiences:

Enhanced Human-Robot Interaction: Helix enables robots to interpret natural language prompts and execute complex tasks with minimal pre-programming. You can simply tell it what you need, rather than learning specific commands or interfaces.

Adaptability in Unstructured Environments: Unlike traditional robots that struggle in unpredictable settings, Helix allows Figure’s robots to manipulate objects they’ve never encountered before. This is crucial for home environments, which are inherently less structured than factory floors.

Collaborative Robotics: One of the most remarkable features demonstrated is Helix’s ability to support multi-robot collaboration. The system can coordinate two robots simultaneously, allowing them to work together on shared tasks they’ve never performed before. Imagine one robot cooking while another sets the table, or one folding laundry while another puts it away.

Commercial Viability: Operating entirely on embedded low-power GPUs, Helix-equipped robots are designed for immediate deployment in practical, everyday environments. This isn’t just a laboratory curiosity – it’s being developed with real-world applications in mind.

Real-World Applications Already Underway: While home robots capture our imagination, FigureAI is already implementing Helix in commercial settings. Their recent technical report details applying these robots to logistics package manipulation—sorting and reorienting packages on conveyor belts. The robots can handle various package sizes and shapes (from rigid boxes to deformable bags), track multiple moving objects simultaneously, and even reorient packages to expose shipping labels—all at speeds exceeding human demonstrators. What’s particularly impressive is that the system generalizes to package types it never saw during training, like flat envelopes.

These capabilities demonstrate why Helix represents such a breakthrough. If robots can adapt to entirely new objects and maintain precision while moving faster than their human trainers, they’re approaching a level of flexibility that makes them practical for unpredictable real-world environments like our homes.

Imagining the Possibilities

The demonstrations so far show robots performing basic object manipulation tasks like putting away items and opening drawers. But the potential applications are far broader:

Household Management: Beyond simple tasks, these robots could potentially track household supplies, organize spaces according to your preferences, and handle routine maintenance like dusting hard-to-reach places or vacuuming under furniture.

Personalized Assistance: The adaptability of Helix suggests these robots could learn your specific habits and preferences over time, anticipating needs rather than just responding to commands.

Environmental Monitoring: With their mobility and sensing capabilities, these robots could patrol homes checking for open windows, water leaks, or unusual temperature patterns.

Supporting Independence: For people with mobility limitations or those who need assistance with physical tasks, these robots could provide help without requiring another person to be present.

The magical moment when the robots look at each other in the demo hints at something profound: these machines are designed to operate not just alongside humans but with awareness of each other. That kind of situational awareness represents a leap forward in how robots might integrate into our complex social environments.

Beyond the Hype: Practical Considerations

While the demonstrations are genuinely impressive, several important questions remain unexplored:

Real-World Adaptability: The demonstrations occur in controlled environments with specific objects. How will Helix perform with the chaotic, unpredictable nature of actual homes—with pets darting underfoot, irregular furniture, poor lighting, and the countless idiosyncrasies of lived-in spaces?

Safety Protocols: The videos don’t address how these powerful, mobile machines will safely navigate around people, pets, and fragile items. What fail-safes prevent dangerous actions?

Longevity and Maintenance: Sophisticated robotics systems require maintenance. How often will these robots break down? Will they require specialized technicians for repairs? What happens when critical components become obsolete?

Power Management: The demonstrations don’t mention battery life or charging requirements—crucial factors for real-world usability.

These aren’t reasons to dismiss the technology, but rather important considerations as we imagine how these robots might actually function in our daily lives.

Timeline and Accessibility

Figure AI has accelerated their timeline by two years, but what does that actually mean for eager potential users? Industry experts suggest it could still be several years before consumer-ready versions reach the market. The path from demonstration to your living room faces significant hurdles:

-

Regulatory Approval: Robots that operate in close proximity to people will face scrutiny from safety regulators.

-

Production Scaling: Moving from prototypes to mass production involves solving complex manufacturing and quality control challenges.

-

Software Refinement: The AI systems will need extensive testing in diverse real-world environments to ensure reliability.

-

Market Positioning: Figure AI will need to identify the specific consumer use cases that provide the most value and target those first.

As for cost, Figure AI hasn’t announced pricing, but advanced robotics systems typically enter the market at premium price points—think tens of thousands of dollars initially, not hundreds. Over time, economies of scale should bring costs down, but early adopters should expect a significant investment.

Adcock himself is optimistic about the eventual affordability: “There’s a lot of preconceptions that these will be really expensive. I do not think they’ll be expensive… Cost is going to come down to really affordable levels. It’ll take time… Cost reduction happens whenever you get manufacturing volumes up to certain levels.”

On why now is the right technological moment, Adcock explains: “I don’t think this was really possible 5 years ago… The power train… batteries and motors… have improved significantly [in] the last couple decades. We didn’t have the runtime 10-20 years ago… to make this really work.”

Despite these challenges, the accelerated timeline suggests Figure AI is confident in their progress—which only makes me more eager to see what comes next.

Final Thoughts: Planning for Tomorrow, Today

When I watch those Figure AI robots carefully handing that ketchup bottle to each other, I’m not just seeing an impressive tech demo—I’m witnessing the dawn of a new relationship between humans and technology. That simple handoff represents something profound: technology stepping into our physical world to shoulder the burdens that weigh us down.

As someone feeling the squeeze of the sandwich generation today, I can’t help but look ahead to my own future. The years advance for all of us. Today I’m caring for others, but tomorrow I’ll be the one potentially needing assistance. This isn’t just about convenience or novelty—it’s about dignity, independence, and quality of life across our entire lifespan.

The prospect of capable home robots represents something far more valuable than a clean kitchen or properly folded sheets. It represents the possibility of reclaiming time for what truly matters—creative pursuits, meaningful connections with loved ones, or simply having enough energy left at the end of the day to enjoy life rather than just maintain it. And as I age, these robots could be the difference between maintaining my independence in my own home versus needing to uproot my life.

These robots represent a fundamental shift in how technology might serve humanity—moving beyond screens and digital experiences to provide tangible help in our three-dimensional world. They could redefine aging itself, allowing us to remain in familiar surroundings even as our physical capabilities change.

I’m ready to invest in this future—a future where technology helps me age gracefully, on my own terms. When these robots finally make it to market, I want to be among the first to welcome them into my home. Not because I’m fascinated by gadgets, but because I’m planning for a future where technology adapts to my changing needs and helps me live fully at every stage of life.

That ketchup bottle handoff isn’t just about kitchen organization—it’s about the promise of technology that grows with us through the decades. It’s about creating a future where independence isn’t limited by physical capability, where our homes can continue to be our sanctuaries throughout our lives. And that’s a future worth investing in today.

Key Terms: Understanding the Technology

Vision-Language-Action (VLA) Model: An AI system that combines visual perception, language understanding, and physical action capabilities into a unified framework.

Multimodal Language Model: AI systems that can process and generate different types of data (like text, images, and audio) simultaneously.

Degrees of Freedom (DoF): The number of independent movements a robot can make, typically corresponding to joints or articulation points. More DoF means greater flexibility and range of motion.

Embedded GPU: Graphics Processing Units built into systems for on-device AI processing, allowing for faster response times without relying on cloud computing.

S1/S2 Systems: Dual cognitive systems where S1 handles fast, intuitive responses (like reflexes) while S2 manages slower, more deliberate planning and reasoning.

FAQ: What You Need to Know

Q: When will Figure AI’s robots be available for purchase? A: Despite the accelerated timeline, most industry experts predict 3-5 years before consumer versions reach the market, with early versions likely targeting institutional settings before home use.

Q: How much will a Helix-powered robot cost? A: While Figure AI hasn’t announced official pricing, comparable advanced humanoid robotics systems typically debut in the five- to six-digit range ($100,000-$500,000+) for early commercial models. The consumer market may eventually see more accessible price points, but early adopters should anticipate a substantial investment. It’s worth noting that market dynamics are evolving—China’s Unitree has announced a humanoid robot priced at $17,000-$18,000, which could accelerate price competition. However, highly sophisticated systems with Helix’s capabilities will likely command premium prices initially, with costs decreasing as manufacturing scales and technology matures. Figure AI’s recent significant funding rounds may influence their pricing strategy, potentially allowing for more competitive entry points than previous generation humanoid robots.

Q: What kinds of tasks will these robots be able to perform? A: The demonstrations show basic object manipulation like picking up items and opening drawers. First-generation consumer robots will likely focus on similar tasks like organizing, fetching items, and basic household assistance before expanding to more complex functions.

Q: Will these robots recognize my specific home items? A: Helix appears designed to recognize and handle objects it hasn’t specifically been trained on, suggesting it should adapt to your unique household items, though performance may vary with unusual or complex objects.

Q: How will these robots learn my home’s layout and my preferences? A: Based on available information, Helix-powered robots will likely use computer vision to map environments and machine learning to adapt to user preferences over time. The training process may involve some guided learning where you demonstrate or correct behaviors.

Q: What happens if the robot breaks or malfunctions? A: Support infrastructure hasn’t been announced, but consumers should expect a combination of remote diagnostics, software updates, and potentially service visits for hardware issues, similar to other complex home technology systems.

Q: Will these robots need constant internet connectivity? A: The embedded GPU architecture suggests core functions should work without internet, but certain updates or advanced features might require connectivity. This represents an advantage over cloud-dependent systems that stop functioning entirely without internet.

Q: How will privacy be protected with these robots? A: Figure AI hasn’t released comprehensive information about data handling. Given the visual and auditory sensing capabilities of these robots, privacy considerations will be crucial for consumer acceptance and regulatory compliance.

Additional Resources:

If you are into humanoids and Figure AI as much as we are, you might want to check out the follow two recent interviews:

The argument for humanoid AI robots

https://www.figure.ai/news/helix-logistics

A Humanoid Robot in Every Home? It’s Closer Than You Think w/ Brett Adcock (at A360 2025) | EP #156 – link.

With Peter Diamandis.

Editor’s Observations

-

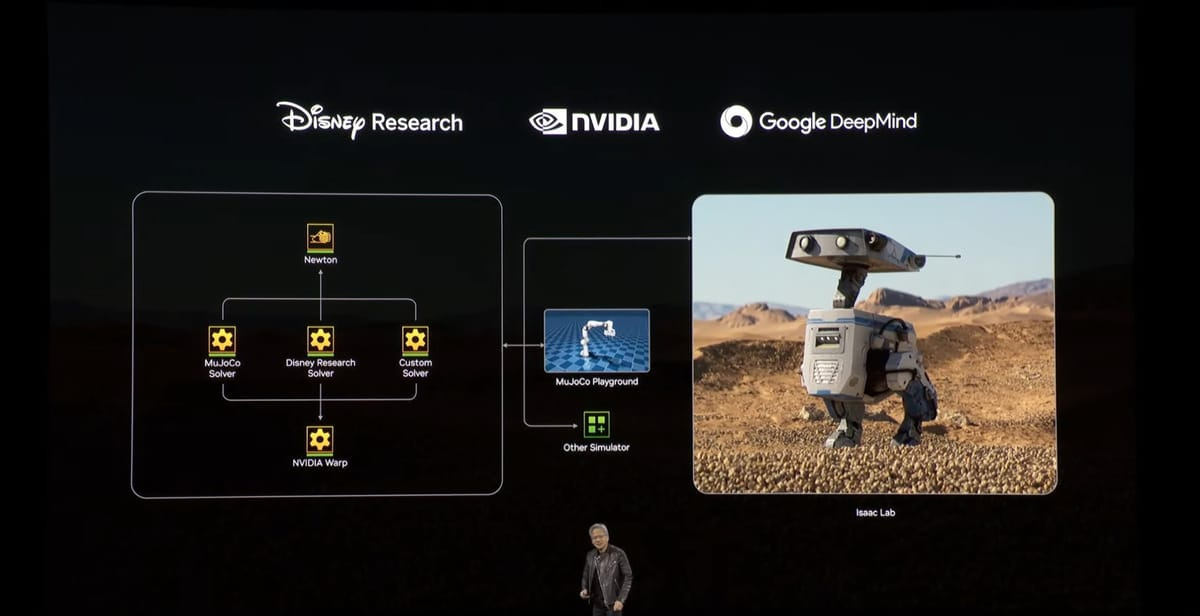

Nvidia is collaborating with Disney Research and Google DeepMind to develop Newton, a physics engine to simulate robotic movements in real-world settings.

Key Points:

-

Newton is an open-source physics engine designed for advanced robotic simulations.

-

It is built on NVIDIA Warp and will be compatible with MuJoCo and NVIDIA Isaac Lab.

-

MuJoCo-Warp, a collaboration with Google DeepMind, accelerates robotics workloads by over 70x.

-

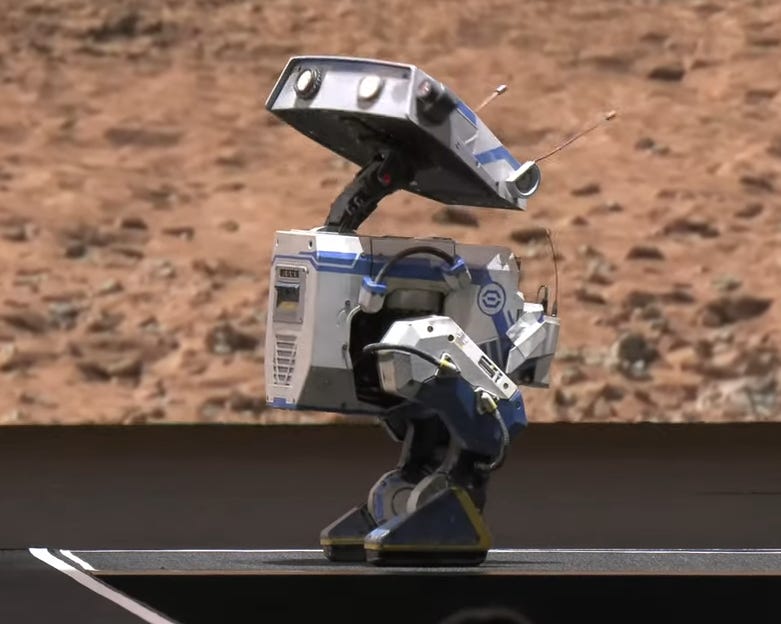

Disney will use Newton for its next-generation robotic characters, including the expressive BDX droids.

-

Nvidia plans to release an early, open source version of Newton later in 2025.

With the open-sourced of GROOT N1 it accelerates robotics globally in a noticeable way in the 2025 to 2030 period.

I maintain that the inflection point for robotics is likely in 2027, though it could happen earlier.

On March 18th, Apptronik said Tuesday announced it added an extra $53 million to its oversubscribed Series A funding round, bringing the total funding from the round to $403 million. The company said the round reflects strong market demand and investor confidence in Apptronik’s leadership, unique design, and technology, as covered by The Robot Report.

Nvidia’s Leadership in Physical AI

While many major companies will end up facilitating the robotics movement and humanoid robots, its’ clear Nvidia is already playing a pivotal role, easily the biggest takeaway for me in GTC 2025. Most of the other things we already knew, if I’m going to be honest.

During Nvidia’s GTC 2025, CEO Jensen Huang emphasized significant advancements in robotics, highlighting a transition into what is referred to as “physical AI.”

GR00T N1 model architecture

Huang detailed a vision for general-purpose robots capable of understanding their environment and performing tasks independently and this a dream many of us share.

You can access the following resources to start working with GR00T N1:

-

The NVIDIA Isaac GR00T N1 2B model is available on Hugging Face.

-

Sample datasets and PyTorch scripts for fine-tuning are available from the /NVIDIA/Isaac-GR00T GitHub repo.

For more information about the model, see the NVIDIA Isaac GR00T N1 Open Foundation Model for Humanoid Robots whitepaper.

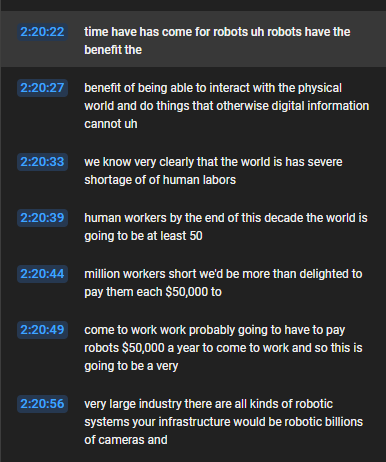

If you are just going to listen to one part of the GTC keynote, go to 2:20:12 seconds. Near the very end of the GTC keynote that was a little too long.

I’ve listened to this section many times, because this at GTC 2025 is the moment when civilization takes another turn. This my friends, is a key moment in this decade in the history of technology.

-

March 18th, 2025 is the day Physical AI was born. Groot N1 will be completely open-source is the key accelerator.

A robot foundation model, trained on massive and diverse data sources, is essential for enabling the robots to reason about novel situations, robustly handle real-world variability, and rapidly learn new tasks. To this end, we introduce GR00T N1, an open foundation model for humanoid robots. GR00T N1 is a Vision-Language-Action (VLA) model with a dual-system architecture. The vision-language module (System 2) interprets the environment through vision and language instructions. The subsequent diffusion transformer module (System 1) generates fluid motor actions in real time. – More.

If you remember one thing from GTC 2025, I hope it’s this.

GR00T N1 is a Vision-Language-Action (VLA) model for humanoid robots trained on diverse data sources.

If Nvidia can be a key facilitator of robotics innovation, VLAs and world models, and other tools it will also mean China will be more competitive with the U.S. and Nvidia will be a more diversified company not just overly reliant on AI chips and its datacenter business. The main risk of Nvidia’s stock is this over-reliance on AI Infrastructure demand, BigTech capex and so forth.

This is why GR00T N1 is so important. Humanoid robotics is far closer to the real world and fruition than Quantum computing or Autonomous Driving or many other things Nvidia is working on.

Keep in mind that this scaffolding currently available is very limited. Currently, Nvidia’s GR00T N1 model focuses primarily on short-horizon tabletop manipulation tasks. A lot of videos on social media exaggerate the real capability of humanoid robots and general purpose utility is still far off.

Research Leads: Linxi “Jim” Fan, Yuke Zhu

Read More in AI Supremacy