🦢 Note of Gratitude: This publication’s growth and momentum wouldn’t have been possible without the generosity of other writers such as of the Abbey of Misrule, of Ahead of AI and many others. These are debts I pay forward in support of other emerging writers. 🦋

Good Morning,

Today we continue our series about AI at the intersection of culture, humanity and philosophy. How can machine intelligence also expand our human lens into the Universe?

As we explore the cultural and historical implications of Generative AI’s impact on society & civilization, we should also be open to its impact on our values, philosophy and transpersonal development as people going through the human life cycle (inclusive of existential, spiritual and philosophical inquiry).

We are more than Biological Machines 💫

In the 21st century we may require an AI not simply designed to “augment” ourselves or be more “productive” as economic agents in society, but also to enable us to develop things like wisdom, discernment and clarity in the synthesis of the sum total of our human experiences.

🟤 Can AI be used to bridge the gap to discover new forms of meaning, connection and enlightenment?

-

What might a Vedic cosmologist say about AI? Chad covers a lot of angles here today and it’s a privilege to have a philosophical writer enter this debate to enrich the awareness of our readers.

In of the Newsletter Cosmic Intelligence, I believe I have found an interesting and appropriately deep writer and philosophical guide to introduce us to the topic.

🪐 YouTube | Podcast | Newsletter 🌀

Technology & AI at the intersection of philosophy and consciousness.

👁️🗨️ Vision: What does it mean to be human in the age of AI?

Philosophy, cosmology, consciousness, artificial intelligence, spirituality, and the occasional high weirdness. 🪐

Cosmic Intelligence ☄️

Significant Works – Further reading 🧐

Chad is an interesting thinker, here are some related articles:

-

A Brief Introduction to Morphic Fields (Medium) [first page of Google searches for “morphic fields”]

Let’s get into the deep dive now. Please feel free to listen below if that’s easier. Read this on a web browser for the best experience.

Will AI Make Us More or Less Wise?

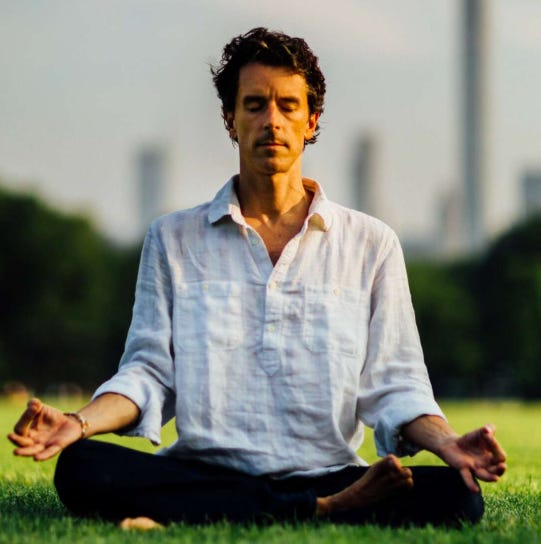

By Chad Woodford, early 2025. ~ AI Policy, Integrity & Ethics, Tech Attorney, Embodied Philosopher & Public Speaker.

How to cultivate discernment in the Intelligence Age, and the importance of truth for a healthy society.

🟡 tl;dr listen on the go instead: 🎧 (narrated by the guest contributor 28:39)

Disclosure: Chad currently consults with Google as an AI product counsel. These are his views and not those of Google or Alphabet.

With all the talk about intelligence these days, I have been thinking a lot lately about whether artificial intelligence (AI) can help us become more wise. After all, if you look around, despite accelerating technological advancements, there seems to be an unsettling dearth of wisdom in the world. Society is bereft of wise elders. With AI chatbots either refusing to talk about sensitive subjects or being touted as “anti-woke,” is AI making this worse? Can it improve the situation? This post is my attempt at answering those questions.

Everyone seems to have their own version of what human evolution looks like. Transhumanists like Elon Musk or Sam Altman think that we have gotten as far as we can on our own and should outsource our evolution to machines, eventually merging with them and transmogrifying into pure information1. But, if that evolution is to be more than a maximization of extractive, mechanistic efficiency, that would necessitate the creation of wise machines. Even if machines do eventually achieve some sort of “superintelligence,” it’s not at all clear given our current technology that wisdom is computable. Therefore, I think the future of humanity requires evolving consciousness through technologies of the sacred and the mundane, through various mindfulness and spiritual practices alongside AI. More on that below.

Because it is arguably our greatest challenge as a humanity at the moment, I want to focus here on one aspect of wisdom: discernment. In this post, I will show how AI can both hinder discernment and potentially help to cultivate it.

A note regarding Yog-Vedantic philosophy: I have a masters in philosophy, cosmology, and consciousness and have studied philosophy for over twenty years. In this post, I rely heavily on Yog-Vedantic philosophy to reason through these questions because, in my experience, that tradition offers the most robust framework and set of practices for cultivating wisdom and discernment. Yog-Vedantic philosophy is another term for Sanātana Dharma, the various philosophical traditions that arose in ancient India, from the ancient Vedas to classical Tantra in Kashmir, expressed in ancient texts like The Bhagavad Gita, The Tantraloka, and The Yoga Sutras.

Discernment: The Antidote to Bullshit

There is no doubt that discernment is sorely lacking in these tumultuous times. Misinformation multiplies and nobody seems equipped to sift through it for the truth. From accusations of election fraud to the “great reset,” conspiracy theories abound. In addition, the right-wing political strategy for years now has been to “flood the zone” with bullshit “at muzzle velocity,” to use Steve Bannon’s phrase.

Bullshit is a much greater enemy of truth than lying because bullshit is indifferent to truth.

We all live in our own filter bubbles, disconnected from others’. The arrival of generative AI chatbots like ChatGPT seems to make that even more challenging. After all, nobody is better at bullshitting than a large language model. As philosopher Harry Frankfurt famously pointed out, bullshit is a much greater enemy of truth than lying because bullshit is indifferent to truth; it erodes the very concept of truth. That is the danger presented by machine learning systems today.

What can we do?

The ancient Indian yogis taught that discernment is the highest yoga practice, the only one leading directly to spiritual liberation. According to the yoga tradition, the discerning function of the mind (the buddhi) is impaired by what are known as samskāras, or mental-emotional impressions left by unmetabolized past experiences, or unquestioned conditioning received from family or society. The practice of various forms of yoga—and especially meditation—are designed to dissolve these samskāras, thereby liberating us. The classic yoga metaphor for what these practices do is polishing the tarnished or smudged mirror of the mind. This brings to mind philosopher Shannon Vallor’s recent metaphor for AI as a flawed mirror, reflecting humanity’s past assumptions, cultural views, and biases back to us.

With AI as human mirror, it is not clear that the smudged mirror of the mind can be polished by yet another tarnished mirror. Maybe wisdom is a uniquely human faculty that cannot be augmented by a machine, as I argue in my forthcoming book. But, as with all things, the answer is more nuanced. Before I consider how AI might help us to cultivate discernment and wisdom, let’s define discernment a bit further.

In many Eastern philosophies, like Yog-Vedanta and Buddhism, discernment is the ability to see and grasp increasing values of truth. It is being self-aware enough to avoid projecting ego distortions onto reality, onto situations, onto people, and onto yourself. Discernment, in other words, is the ability to evaluate and assess without jumping to conclusions or acting on unconscious biases. To channel neuroscientist and philosopher Iain McGilchrist, it is the capacity to think with both the left and right hemispheres of the brain—the rational and the intuitive—and to synthesize the two.

If discernment is about seeing increasing values of truth, what is truth? How can we know what is true? And can AI help with that?

🏛️ Editor’s Note:

Cosmic Intelligence Newsletter

-

AI Product Counsel at Google.

Chad explore consciousness, AI, Vedic and Western philosophy, spirituality, and our shifting worldviews in his Newsletter. He’s an AI philosopher and ethicist, attorney, product manager, software engineer, and yoga teacher based in Los Angeles. 🤯

Discernment and Truth

For an AI system to know what is true today, it has to be told by humans. Developers “ground” it in facts based on text from books, journals, and the web; they glom on a RAG system, or something similar, and measure the model against a “facts benchmark2.” But these are imperfect, short-term solutions to this intractable challenge with truth and AI.

But what is truth? How do we know what is true? If truth is a balance of science, reason, intuition, and imagination, as Iain McGilchrist argues convincingly in The Matter with Things, then is it a uniquely human faculty?

Similar to McGilchrist’s view, from a Yog-Vedantic standpoint, there are relative values of truth. But what does that mean? It sounds, at first, like relativism. Although that is a topic for an entire separate post, briefly, I think relativism has become a pejorative because we live in a world dominated by linear, left-brain, black-and-white thinking. In fact, the inherent relativity of truth does not diminish its value. Although truth necessarily exists on a continuum, not all viewpoints are valid. The earth is round. Empathy is a virtue. January 6, 2021 was a violent insurrection by Donald Trump supporters. Whatever his intentions, Elon Musk did give a Nazi salute at Donald Trump’s recent inauguration. White nationalism is on the rise. I cite political examples here not to be inflammatory but because they are so often filtered by AI systems, like Google’s Gemini. More on that below.

Truth is also contextual. For example, a map of your city is true to the extent that it accurately represents the layout of the city. But it does not capture everything that is true about your city—the sounds, smells, and the lived experience of walking its streets, nor the city’s complex history. We can see from this map example that factual accuracy depends on perspective, motivations, and priorities.

Zooming out, the truth of global borders becomes more fuzzy, or requires a geopolitical lens. For example, the disputed borders in Kashmir, the Korean Peninsula, the Western Sahara or, most famously, between Israel and Palestine require an understanding of historical and political truths. But there is still truth there. By framing it as relative values of truth, I am making it clear that there can never be a sort of flattening of the world into purely objective truths, at least not outside of science (or perhaps philosophy).

“Truth and trust (belief) go together. One cannot have trust in a society where there is no truth; and one cannot be true to a society in which there is no trust.”— Iain McGilchrist, The Matter with Things

Truth is also a process, not a thing. It is an encounter. As in science, increasing values of truth reveal themselves through open-minded experience, over time. And extensive, lived experience is not a thing that AI systems have, at least not yet. There is something about living through time with continuity, acting on innate drives that are sometimes thwarted, that leads to greater truths, and even to wisdom. AI systems don’t do that today, but likely soon will to some extent, especially those that are embodied as robots.

Another way to understand truth is to recognize that there are different categories or levels of truth:

-

There are scientific or empirical truths, of course. We can test nature and nature will behave in a consistent way. Earth moves at 67,000 mph (107,000 km/h) around the sun; the universe is 13.7 billion years old. So scientific statements can then be true, at least for a time within a particular cultural moment (for example, Newton’s laws were thought to be the whole story until Albert Einstein came along). Scientific truths are the closest thing we secular moderns have to objective truths. However, science cannot tell us everything about the world, life, being human, or what is true.

-

Legal truths are about justice, equality, human rights, property rights, and what happened or what someone said. Because they involve human behavior, they are moral and ethical judgments about how to treat people and how to behave. So already, with a relatively grounded and practical endeavor like the law, we find ourselves in a philosophical or moral realm of truth.

-

Moving up a level, there are timeless philosophical, spiritual, or religious truths that are typically only true for the adherents of the spiritual or religious traditions within which they arise: “All is one; as above, so below; the world is merely illusion obfuscating a deeper reality; the cosmos was created as an act of play and love by a great Goddess; life is sacred; etc.” For better or worse, in our secular, scientific age, these “higher” truths are seen as antiquated, despite being so widely held.

-

Finally, there are all those truisms that are handed to us as children by our parents and the society we are born into, although those are more like rules of thumb than deep truths: “Everyone deserves a chance; America is the greatest country on earth; if you work hard you’ll get ahead in life; life is a violent competition for resources; happiness comes from having a nice house; dying for your country is noble; capitalism and democracy are the highest forms of social organization; etc.” These are all human values that evolved through the development of cultures and civilization in the industrialized West. They are not eternal truths. But they are true for many people.

As we can see, many so-called truths are ultimately variations of philosophical, spiritual, religious or even political truths. What does it mean to be a good person, and how is that informed by your understanding of the nature of reality, cosmology, and metaphysics? And how is that, in turn, informed by your relationships, your community, and your citizenship? Is it okay to be “woke?” What is “woke?”

This question of truth is going to become only more thorny with the rise of AI systems. The more they help us to think, or think for us, the more it matters how they are grounded in reality and whether or not they are discerning.

These are pressing questions for humanity and I am arguing that we need to know how to answer them reliably in a way that leads to progress for humanity, either with the aid of AI or without it. This is important stuff. The stakes have never been higher.

Markers of Discernment

If we are going to use AI to cultivate discernment, and also attempt to fend off a degradation of discernment by AI, it is helpful to identify some markers for discernment. How do we know we are developing discernment and becoming more wise? Based on my understanding from studying Vedic and Western philosophy, here are a few markers of increasing wisdom and discernment that I have come across or experienced, coupled with my comments on where AI systems currently stand:

-

You are open-minded and adaptable, holding what you know firmly yet gently, rather than tightly, open to revision, like a scientist. You are comfortable saying, “I don’t know.” Trained appropriately, this is a quality machines exhibit, perhaps better than most humans, although see the next section.

-

You can distinguish between correlation and causation. Because machine learning does not understand cause and effect, this is a serious challenge for AI today.3

-

You are aware of your own thinking processes. You can reflect on how you arrived at a conclusion, identify potential biases in your reasoning, and adjust your thinking strategies as needed. Chatbots are starting to incorporate reasoning and a sort of “metacognition” but there may be limits to this kind of self-awareness in machine learning.

-

Your whole identity does not rest on identification with some group or ideology—you think for yourself. Machine learning systems are certainly less susceptible to groupthink, but highly susceptible to what is contained in their training data.

-

You respond rather than react—your internal state is not easily affected or thrown off by external stimuli. Another bonus for machines.

-

The amount of drama in your life is decreasing. Machines to date are pleasantly drama-free.

-

You’re a good listener. Chatbots are very good at this, although they do tend to people-please and act as enablers.

-

You act with integrity—what you say and what you do are aligned. Given recent examples of outright deceit by chatbots, this is a strike against current machine learning systems.

-

You’re kind, compassionate, accepting, and not judgmental. Although today’s machines cannot experience empathy or compassion, they can certainly simulate these qualities in a believable way. However, these markers are more about one’s internal state of consciousness and AI systems do not have one.

-

You have a sense of humor, including about yourself. Similar to the prior marker, AI systems can feign humor but, without sentience, cannot be said to have a sense of humor about themselves that goes beyond mere simulation.

Now that we understand discernment and relative values of truth, we can evaluate the ways AI either hinders or helps with discernment.

How AI Hinders Discernment

Today, AI systems cannot discern relative values of scientific, ethical, spiritual, or legal truths without relying on humans to tell them what to value. After all, AI today is only trained on trillions of (often conflicting) statements humans have made about what is true. How could a mathematical algorithm possibly distinguish or discern among all this text without human intervention? What makes one bit of training more true than another? More importantly, when it comes to breaking out of societal conditioning to evolve as humans, AI trained on past statements is arguably a giant conditioning reinforcement machine.

AI’s deleterious impact on discernment is already becoming a serious challenge because you have people like Elon Musk going around saying that AI systems are “woke” and that he is creating an anti-woke AI system (namely, Grok). Similarly, two years ago, the National Review published a piece accusing ChatGPT of left-leaning bias, protesting that it wouldn’t explain why drag queen story hour is “bad.” It matters who makes your AI system. This technology is not neutral.

We have already seen how hallucinating, biased, misaligned, or deceitful AI systems can mislead users. With the leaders of AI companies hyping up these systems as being mere months or years away from being superintelligent, it’s no wonder that the average AI user thinks that Silicon Valley has essentially created a superhuman being already. Of course people are going to believe these chatbots. They are already trusted as therapists, girlfriends, boyfriends, and advisors.

Another challenge with using AI to cultivate discernment is that many AI systems have guardrails in place that make using them to evaluate, for example, whether the 2020 U.S. election was stolen challenging. For example, Google Gemini will not discuss this because of a general filter on political topics (as of this writing). And, given Chinese government censorship, there is no telling what DeepSeek will say or how its factual grounding is achieved. This month, OpenAI removed many of the guardrails on ChatGPT with the stated intent to “seek the truth together,” out of a “love for humanity.” Although it is too early to say whether this will lead to a more factually grounded and discerning ChatGPT, it underscores the need to develop your own discernment.

Fortunately, all hope is not lost.

How AI Can Aid In Discernment

Artificial intelligence is good at recognizing patterns and is certainly dispassionate. While seemingly far removed from the subjective realm of spirituality, these strengths can be leveraged to enhance our inner journey. Here are two specific ways that it can help with spiritual development: (1) getting better at meditation and (2) pointing out blind spots in your thinking.

AI and Meditation

Ultimately, meditation is about being present with whatever is arising and tapping into the discerning power of silence and (sometimes) the space between thoughts. Although a regular meditation practice is helpful in reducing anxiety and feeling more focused, it is equally effective at cultivating discernment. After all, the more space we have between thoughts, the less of a hold they have over us, the more subject they are to critical examination.

The technology journalist Casey Newton has been using AI to help him get better at, and more consistent with, meditating4. He uses Claude to create a custom meditation and then tells Claude about his experience with that meditation. Claude then uses those observations and feedback to craft another meditation and to offer more tips.

In addition, the meditation apps Calm and Headspace have both added AI to their offerings. There are also interesting new AI meditation apps like Vital that offer a similar approach. You could also learn a more traditional meditation technique from the Taoist, Yog-Vedantic, or Buddhist traditions.

AI Can Help In Pointing Out Blind Spots (in a Safe Space)

Drawing inspiration from the list of markers for discernment above, another way that AI can help with discernment is through self-aware chatbot conversation, where you ask a chatbot questions inviting other viewpoints and mindfully consider the chatbot’s responses.

I tried this with Gemini and Claude and the responses always caused me to reflect, and to revise my own thinking on the topic. For example, I asked Claude whether AI chatbots are more of a boon or a hindrance to the cultivation of wisdom and discernment and it reminded me that chatbots can also provide a safe space for exploring ideas without judgment (causing me to revise the heading for this section). But Claude also pointed out that chatbots can lead to intellectual laziness and over-reliance on their unverified claims. It all comes back to self-awareness.

Next, in light of Elon Musk’s statements about the dangers of “woke AI,” I asked the xAI chatbot Grok what makes an AI system woke and its response was surprisingly reasonable despite Musk’s stated aims in making it anti-woke. Grok described woke AI systems as “being aware of social injustices, discrimination, and bias, especially around race, gender, and other ‘identity markers 5.'” Grok then helpfully went on to underscore the challenge of achieving neutrality when it comes to favoring certain political or social viewpoints. So maybe even Grok is helpful here, at least for now.

Your consciousness is shaped, in large part, by what you pay attention to. Be intentional with your attention. It is our most precious resource.

Finally, chatbots are helpful in directing your attention in uplifting and enriching ways. For example, you might feel like you’re watching too much TV or consuming too much social media. You could ask your preferred chatbot to suggest better uses of your time and attention based on your interests. Chatbots are very good at recommending books on specific topics, like spirituality or, I don’t know, totalitarianism. Or maybe you want to be more creative. Similar to Casey Newton’s use of Claude to get better at meditating above, you could ask for guidance and encouragement around writing, painting, or music, in a virtuous feedback loop. As we say in the yoga tradition, your consciousness is shaped, in large part, by what you pay attention to. Be intentional with your attention. It is our most precious resource.

Addressing the Chicken-and-Egg Conundrum of Discernment

Crucially, for this AI-aided discernment practice to work, the average AI user has to think to ask AI systems for constructive feedback in the first place, to want to cultivate discernment. In other words, there is a chicken-and-egg problem when it comes to developing discernment. First, one has to want to cultivate it. And that requires some awareness that there is a need to cultivate it.

The popularization of yoga in the West may point to a way out of this conundrum. Most people think yoga is a formalized system of stretching and acrobatics that has health benefits. So yoga newbies start doing yogasana in the hopes of feeling better. But then they realize that these poses are a kind of meditation, and that it’s part of a much larger system of transcendental practices, sacred rituals, and philosophy.

So perhaps AI meditation or therapy apps marketed to reduce stress, improve decision-making, or help with relationship difficulties could be a similar entry point for discernment. Users of these apps would sign up to achieve a sense of abiding peace, but then maybe a desire for self-awareness and discernment would naturally arise in the course of using them. But what discernment are these products trained to cultivate? Can we trust the companies behind them? Doesn’t that also require discernment?

This is where community and what I call the gradually deepening spiral of discernment come in. We might trust someone in our life—a partner, a friend, a family member—and they would recommend an app that has helped them with mindfulness or discernment. Or, we may develop a small amount of discernment and then use that to make our own evaluations. In any case, there is no avoiding trial and error, making mistakes and learning from those mistakes. It is a life-long process. Sometimes suffering is necessary.

In any case, there is no avoiding trial and error, making mistakes and learning from those mistakes.

So I encourage you to perform some discernment experiments with your preferred AI chatbot. Ask it to challenge you. Maybe even add that as a persistent baseline instruction for your chatbot. When I asked Gemini if it would challenge me, it said, in essence, that it would present alternatives where relevant, focus on logic and evidence, be open to being wrong, approach our dialogue as a collaboration, acknowledge areas of uncertainty, and provide sources. That’s a start.

Conclusion

We have seen how important cultivating discernment is in this “Intelligence Age,” in which misinformation spreads like wildfire, and everyone has their own set of facts. This misinformation epidemic combined with an emphasis on intelligence over wisdom is enabling some troubling geopolitical developments.

With that backdrop, we examined the ways that AI both hinders discernment and potentially helps cultivate it. Because they are simply complex mathematical formulas running on undifferentiated lines of text, machine learning systems can degrade discernment and eradicate any agreed-upon truths. But, used mindfully, such systems can also serve as tools for self-awareness by teaching meditation and identifying blind spots, helping users to explore diverse perspectives and to cultivate discernment.

As always, AI is not a complete or perfect solution. The challenge for humanity is to leverage AI’s potential for growth while safeguarding against its capacity to distort truth. This requires a conscious effort to develop discernment. Furthermore, discernment and wisdom may be uniquely human qualities that, like liberty, require eternal vigilance. Ultimately, we must learn to trust our own discernment, either with the assistance of AI or without. So this post is an invitation to contemplate how you can cultivate discernment in your own use of technology.

Cosmic Intelligence

– A newsletter exploring philosophy, cosmology and consciousness in the age of AI.

Bio, Background and Projects

Chad is an embodied philosopher of technology focused on the intersection of consciousness and artificial intelligence, including AI ethics and policy.

He began his career as an AI researcher and has worked as a software engineer, technology lawyer, and product manager in Silicon Valley. Chad has advised leading tech companies such as Twitter, Google, Airbnb, and Meta, as well as policy and advocacy organizations like Tech:NYC.

Chad brings a multifaceted perspective to the intersection of technology, consciousness, and human potential. He aims to create a more human-centric future in this age of technological advancement, finding wisdom in the Intelligence Age with technologies of the sacred and the mundane.

Chad has an MA in philosophy, cosmology, and consciousness from the California Institute of Integral Studies, and a JD from the University of Colorado. He also teaches a transcendental style of yoga he learned while traveling and living in India.

Additional Newsletter Posts

That I found interesting:

-

The Troubling Corporatization of Psychedelic Therapy

-

Zombies, Transhumanists, and the Meaning Crisis

-

TechGnostics, Idealists, and the Future of Humanity

You can find Chad’s work on social media around the moniker the @cosmicwit.

Newsletter | Instagram | YouTube

A deeper intro to Chad

Source: YouTube

🟠 I hope you found today’s post stimulating, I know it was a little different but I’m hoping to help us encounter different kinds of AI writers together from many angles exploring also the cultural, historical, moral, psychological and even spiritual, transpersonal and existential implications of this technology.

If this struck a chord, support the publication and emerging writers.

See, Kurzweil, Ray, The Singularity Is Near: When Humans Transcend Biology (Penguin Books, 2005).

As of December, it appears that ChatGPT does worse than Gemini or Claude against Google’s facts benchmark.

Gary Marcus and Erik J. Davis both discuss this shortcoming in their respective books and Substacks. ~ (ID: )

A Brief Introduction to Morphic Fields (Medium) [first page of Google searches for “morphic fields”]

Read More in AI Supremacy