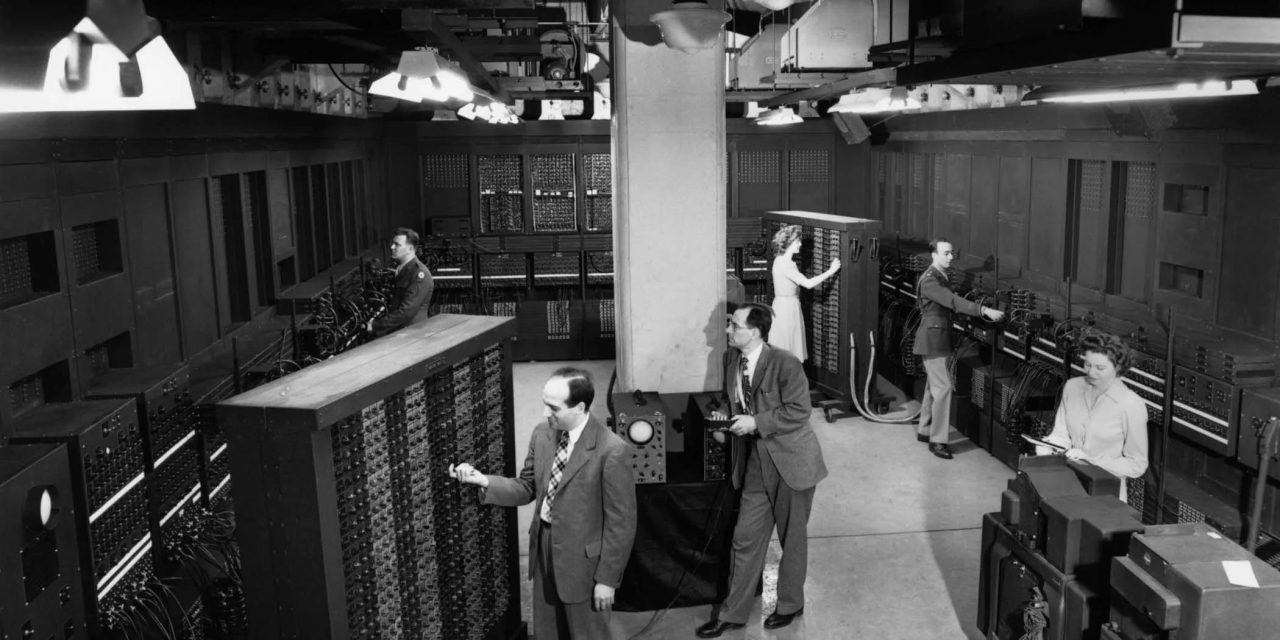

Caption: The Evolution of Data Centers

We know that BigTech capex is likely to increase from $200 Billion in 2024 to maybe even $300 billion in 2025 (Morgan Stanley projects). Where is the energy and water required going to come from?

It’s becoming impossible to think about the future of AI without deeply understanding the semiconductor supply chain and the future of datacenters.

How Generative AI and the great datacenter boom (what I term GDB) impacts environmental policy and local regions where these datacenters will be built globally is set to get fairly interesting in the decade ahead.

BigTech Capex Increases Guarantees Controversy

While Google and Meta intend to invest $62.6 billion and $52.3 billion, respectively, Amazon and Microsoft are expected to lead in capex, devoting $96.4 billion and $89.9 billion, respectively. Cloud Providers like Microsoft, Amazon, Google, Oracle and others are expanding the pace they build new datacenters not just at home in the U.S., but in foreign countries from Southeast Asia like Microsoft, to South America like Google.

Data centers are anticipated to consume a substantial portion of electricity in the coming years. Goldman Sachs estimates that global data center power demand will increase by 160% by 2030, rising from approximately 1-2% of overall power consumption to about 3-4%. There are many interesting subplots in how this will be navigated that will reshape the future of energy, geopolitics and Cloud hypersalers CSR (corporate social responsibility) policies.

Between 2024 and 2030, electricity demand for data centers in the U.S. is projected to grow by about 400 terawatt-hours (TWh), with a compound annual growth rate (CAGR) of approximately 23% according to McKinsey.

The rapid growth in data center capacity poses challenges for existing power infrastructure. Huge capex increases in 2025 signals these challenges are likely going to get accelerated especially in the 2025 to 2035 period.

Which Countries Have the Most Data Centers?

Data by Visual Capitalist.

As of December 2023, there were over 10,000 data centers were operating worldwide—86% of which were in the top 10 nations, and over half were in the U.S. This is set to dramatically shift and it will literally transform our world in ways we cannot yet fully understand in 2024.

The speed of GPU improvements, energy, water, and power requirements, the CO2 emissions, the corporate control, intricate geopolitical aspects and national security implications of AI’s growing infrastructure is part of the AI arms race I try to cover in AI Supremacy Newsletter. In the future I’m looking at, AI is as much about economic and cultural control over the world, as it is about consumer products or boosting productivity for certain industries or professions.

As an emerging tech analyst, this is why things like the semiconductor industry, robotics and quantum computing matter to me as the hardware is as important as the software in the convergence and exponential shifts of civilization with AI that we will begin to see in the coming years.

It is estimated that over $500 billion will be required for data center infrastructure alone by the end of the decade. The increasing reliance on cloud computing and artificial intelligence is driving this demand higher where the carbon footprint of datacenters globally will rise significantly more in global carbon emissions that is being projected today. The costs of BigTech companies investing in GPUs will have odd and crucial spillover effects:

AI may produce millions of tonnes of electronic waste by 2030, full read.

Taiwan’s soaring energy prices and growing outages (the price of energy could become a problem). While Taiwan is investing heavily in offshore wind power and aims to generate 27 to 30 per cent of its electricity from renewables by 2030, it started very late. The power price increases do not pose a significant financial problem for TSMC but they illustrate what the world can expect in the years ahead.

Nuclear power plans by companies like Meta and Amazon are experiencing some early challenges.

Microsoft and Constellation plan to restart Three Mile Island in Pennsylvania; Amazon took a stake in advanced reactor company X-energy; Google linked up with Kairos Power.

The Federal Energy Regulatory Commission late Friday rejected a proposal that would have allowed an Amazon data center to co-locate with an existing nuclear power plant in Pennsylvania, read more.

Can this wave of Datacenters lead to AI mediated Sustainability solutions?

I asked of Road to Earth 2.0, for a deep dive on her take of the environmental impacts of this movement and what some of the solutions might look like. In her work she tend to focus on sustainability, AI, systemic change and leans on elements and themes of history, sociology, economics and philosophy—as building blocks for a more holistic macro overview and understanding.

As we explore emerging technologies, their environmental impact and how we think of sustainability, energy (power), water, carbon footprints, the future of nuclear and other forms of energy and the well-being of people who actually live near datacenters is important.

If you think environmental concerns are important as they related to AI and datacenters, consider sharing this article.

Read more by Aysu

Recent titles:

What If Humanity’s Role is to Parent the Next Intelligent Species?

The Potential Role of AI in Enhancing Sustainability Across Sectors

What If Humans Are No Longer Among the Living?

The Sirens of Inaction: Urgency, Fear, and Hopelessness

By , October, 2024.

The first data center was created in 1945, and in less than a century, it has become a structure that has already influenced many areas behind the scenes and even directed new ones.

From humble beginnings to today’s digital palaces – data centers have gone from ‘nerdy closet’ to ‘coolest kid on the block’ faster than you can say ‘upload complete’.

In the coming days, it will become something we will all hear about much more frequently, permeating our way of thinking. Let’s dive deep to understand it better.

1. The Data Center Boom

1.1 The Digital Backbone of AI

Data centers are the physical backbone of our digital world, housing thousands of computer servers that run continuously to support everything from cloud storage and financial transactions to social media and government operations. When we talk about data being stored in the “cloud,” it’s actually being housed in these massive facilities.

Just a few decades ago, data centers were simple server warehouses, often tucked away in remote areas. The internet boom of the late ’90s changed that, with companies like Exodus Communications leading the charge. But by the early 2000s, overinvestment led to a crash, leaving many centers underused.

Now, in 2024, data centers are booming again—this time fueled by a 24/7 digital world and the skyrocketing demand for AI-driven computing power. The rapid advancement of AI is reshaping our world in countless ways, yet it’s also having a profound and often overlooked impact: an unprecedented surge in energy consumption.

Caption: statista.com

1.2 AI’s Insatiable Appetite for Energy

The rise of generative AI and other advanced technologies has led to a dramatic increase in data center construction and expansion. According to the International Energy Agency (IEA), global data center electricity demand is projected to more than double between 2022 and 2026, with AI playing a major role in this increase.

Research has shown that the cost of computational power required to train AI models is doubling every nine months, with no signs of slowing down. This exponential growth in AI capabilities comes at a significant energy cost, as these models require substantially more processing power and energy compared to traditional computing tasks.

For example, ChatGPT requires 15 times more energy than a traditional web search, says Arm exec. As Harry Handlin, U.S. Data Segment Leader at ABB, noted, “A decade ago, a 30-megawatt data center was considered huge. Now when a company announces plans to build a 300-megawatt data center, no one blinks an eye. And with AI, 1-gigawatt data centers are the norm.” It’s like we went from ‘large fries’ to ‘would you like a server farm with that?

Leading tech giants, other companies, and utilities are expected to spend an estimated ~$1tn on capex in the coming years, including significant investments in data centers, chips, other AI infrastructure, and the power grid. This aggressive expansion is fueling an unprecedented surge in demand for electricity.

2. The Environmental Cost

2.1 Electricity Consumption

Data centers now account for more than 1% of global electricity use, according to the IEA. This figure is expected to rise dramatically, with predictions suggesting that by 2026, data centers could consume as much energy as entire countries like Sweden or Germany.

To put this into perspective, in 2022, data centers used more than 4% of all electricity in the U.S., with 40% of that energy being spent to keep equipment cool. As demand on data centers increases, even more energy will be required to maintain their operations.

2.2 Water Usage

The environmental impact extends beyond electricity. Researchers at UC Riverside estimated that global AI demand could cause data centers to consume over 1 trillion gallons of fresh water by 2027. 1 trillion gallons of water is equivalent to filling approximately 1.5 million Olympic-sized swimming pools. This staggering figure highlights the often-overlooked water consumption associated with cooling these massive facilities.

Of the 8,000 data centers that exist globally, about a third are in the U.S., compared to 16% in Europe and almost 10% in China. The Hong Kong-based think tank China Water Risk estimates that data centers in China consume 1.3 billion cubic meters of water per year—nearly double the volume that the city of Tianjin, home to 13.7 million people, uses for households and services.

2.3 Carbon Footprint

The global data center industry is projected to emit 2.5 billion tons of CO2 by 2030, according to Morgan Stanley. The rising energy demand is significantly driving up carbon emissions. By then, the industry’s greenhouse gas emissions will amount to about 40% of the U.S.’s annual emissions.

For instance, Microsoft, a major player in AI development, saw its greenhouse gas emissions increase by 30% last year, primarily due to its ambitious AI pursuits. This trend isn’t unique to Microsoft, as other tech giants are also grappling with similar challenges in balancing their AI ambitions with their sustainability goals.

3. Challenges and Concerns

3.1 Strain on Infrastructure

The explosive growth of data centers is putting enormous pressure on local infrastructure, especially power grids and water resources. In regions like Northern Virginia’s “Data Center Alley,” concerns are growing about potential brownouts as data center expansion threatens to outpace power generation capacity. Similar issues are seen in hubs such as Santa Clara, California, and Phoenix, where delays in securing new power connections are becoming common. Internationally, cities like Amsterdam, Dublin, and Singapore have imposed moratoriums on new data center construction due to power infrastructure limitations.

The industry is also grappling with constraints across the power value chain, including shortages and bottlenecks that slow progress. With the industry approaching physical limits on node sizes and transistor densities, coupled with long lead times for new connections, scaling efforts have become increasingly difficult.

3.2 Local Opposition

Local opposition to data center construction is intensifying, particularly in places like Dublin, where data centers already consume nearly 20% of Ireland’s electricity. In Northern Virginia, historically residential areas are being rezoned for industrial use to accommodate new data centers, raising concerns about quality of life and infrastructure strain. Virginia state delegate Ian Lovejoy highlights that data centers have become the top issue for constituents, with concerns about threats to electricity and water access, and fears that taxpayers may be left to foot the bill for future infrastructure upgrades.

3.3 Competition

The race for clean energy is turning into a high-stakes game of musical chairs. When the music stops, let’s hope we’re not the ones left standing with a coal-powered server.

For example, CarbonCapture, a California-based climate tech company, had to cancel plans for its Project Bison, a direct air capture (DAC) facility in Wyoming, due to a lack of sufficient access to clean energy. Launched in 2022, Project Bison aimed to capture 5 million tons of carbon annually by 2030, but the competition for clean energy with industries like data centers and cryptocurrency mining hindered progress.

At the same time, OpenAI has pitched the Biden administration, highlighting the need for massive data centers that could each consume as much power as entire cities. OpenAI argues that this unprecedented expansion is crucial for developing advanced artificial intelligence models and staying competitive with China. It’s like an arms race, but instead of missiles, we’re stockpiling electricity bills.

Caption: Sam Altman in New York on Sept 23. Photographer: Bryan R. Smith/AFP/Getty Images

3.4 Transparency Issues

The exact energy consumption of many AI models remains opaque. Major tech companies have become increasingly secretive about data sources, training time, hardware, and energy usage especially since the release of ChatGPT. This lack of transparency makes it difficult to accurately assess and address the environmental impact of AI development.

Adding to this concern are questions about the influence of major tech players on organizations that set corporate climate standards. For instance, there are worries about the impact of Amazon and the Bezos Earth Fund on entities like the Science Based Targets initiative (SBTi). The Bezos Earth Fund is a significant funder of SBTi, and Amazon’s separate climate initiative allows for unrestricted use of carbon credits. This has led to fears that SBTi’s rules might be swayed to favor more lenient carbon offsetting practices, a particularly troubling prospect as companies like Amazon face increased emissions from their expanding data center operations.

3.5 Moore’s Law and Its Limitations

Moore’s Law, which posited that the number of transistors in computer chips doubles roughly every two years, has been a driving force in the tech industry for decades. However, we may be reaching the physical limitations of silicon-based CPUs. Without a practical alternative, engineers can no longer increase the computing power of chips as rapidly or as cheaply as they did in years past, potentially impacting the efficiency gains that have helped offset increased energy demands.

4. Sustainable Future Solutions

A 2023 McKinsey & Company study projects that we’re going to need about 35GW of cloud data center power capacity by 2030 to meet AI demand, compared to approximately 17GW required at the end of 2022. This means many more data centers must be built and operating in a short timeframe, creating a need for numerous innovative solutions to establish data centers, ensure their sustainability, and provide optimization.

4.1 Beating the Heat: Cool Smarter

In the world of data centers, heat is the enemy. Traditional cooling methods devour energy, often accounting for a substantial portion of a facility’s power consumption. Currently, data centers generally are cooled with either air-moving fans or liquid that moves heat away from computer racks. Here are some things that I think we can look at for a few solutions that stand out:

Futuristic Cooling Systems: University of Missouri researchers, led by Professor Chanwoo Park, are developing a two-phase cooling system that efficiently dissipates heat using phase change, requiring little to no energy.

Caption: Chanwoo Park is devising a new type of cooling system that promises to dramatically reduce energy demands on data centers.

Ultra-Energy-Efficient Temperature Control: Oregon State and Baylor University researchers have developed a method to reduce energy for photonic chip cooling by a factor of more than 1 million, using gate voltage to control temperature with almost no electric current.

Higher Operating Temperatures: A study published in Cell Reports Physical Science suggests that keeping data centers at 41°C (105°F) could save up to 56% in cooling costs worldwide. This approach challenges the conventional wisdom of keeping data centers cold and proposes new temperature guidelines for more efficient operations.

Metal foam technology: Swiss company Apheros secured $1.9 million to advance its metal foam technology, boosting cooling efficiency by 90% and addressing data centers’ growing energy consumption. Apheros aims to provide a more sustainable thermal management solution.

So, cooling data centers is becoming an exercise in thermodynamic acrobatics. We’re one step away from invoking Maxwell’s demon to sort the hot bits from the cold ones.

4.2 Leaving Carbon-Heavy Power

Nuclear Power Options: A Controversial Comeback

As data centers’ appetite for energy grows, nuclear power offers strong potential. Here are some notable developments:

On a life-cycle basis, nuclear power emits just a few grams of CO2 equivalent per kWh of electricity produced. Whilst estimates vary, the United Nations (UN) Intergovernmental Panel on Climate Change (IPCC) has provided a median value among peer-reviewed studies of 12g CO2 equivalent/kWh for nuclear, similar to wind, and lower than all types of solar. (source)

Nuclear Advocacy from Tech Titans: For example, Microsoft and Constellation Energy signed a 20-year power purchase agreement to restart Pennsylvania’s Three Mile Island Unit 1 nuclear reactor. Microsoft will use energy from the plant to power its data centers with carbon-free electricity, supporting its goal to be carbon-negative by 2030 and to match 100% of its electricity consumption with clean energy.

🟢A relaunch of Three Mile Island, which had a separate unit suffer a partial meltdown in 1979 in one of the biggest industrial accidents in the country’s history, still requires federal, state, and local approvals.

The Three Mile Island nuclear power plant, where the U.S. suffered its most serious nuclear accident in 1979, is seen across the Susquehanna River in Middletown, Pennsylvania in this night view taken March 15, 2011. REUTERS/Jonathan

Major tech executives, including ChatGPT developer OpenAI CEO Sam Altman and Microsoft co-founder Bill Gates, have touted nuclear energy as a solution to the growing power needs of data centers.

Altman has backed and is the chairman of nuclear power startup Oklo (OKLO.N), opens new tab, which went public through a blank-check merger in May, while TerraPower – a startup Gates co-founded – broke ground on a nuclear facility in June.

Small Reactors, Big Impact: Micro-nuclear developer Last Energy is taking a new approach with its 20 MWe microreactors. After raising $40 million in Series B funding, the D.C.-based company aims to create modular, mass-manufacturable reactors that can be deployed in just 24 months.

Clean Energy Options: Harnessing Nature’s Power

While nuclear energy draws attention, renewable sources remain vital for sustainable data center power. Key developments include:

Google’s Renewable Energy Addendum: To address a 48% rise in GHG emissions over five years from AI-driven data centers, Google launched this initiative, urging major suppliers to shift to 100% renewable energy by 2029.

Solar Power Partnership: Google partnered with BlackRock to develop solar energy in Taiwan, investing in New Green Power (NGP) to supply up to 300 MW of solar energy for its data centers and suppliers.

Power Solutions Acquisition: Blackstone Energy Transition Partners acquired Trystar to support its growth amid the energy transition and AI-driven data center expansion. Blackstone plans to invest $100 billion in energy transition projects over the next decade.

Solar Thermal Storage System: Exowatt, backed by Andreessen Horowitz, Sam Altman and others, raised $20 million to launch a modular solar energy platform for data centers. The Exowatt P3 system offers electricity at $0.01 per kWh, providing a cost-effective alternative to fossil fuels, and other renewable energy alternatives.

4.3 Maximize Data Storage

Innovative approaches to data storage not only allow us to accommodate the ever-growing volume of information but also promise to dramatically reduce the physical footprint and energy consumption of data centers. Here are some groundbreaking approaches that could revolutionize how we store and manage data:

World’s Smallest Electro-Optic Modulator: Oregon State University researchers have designed and fabricated the world’s smallest electro-optic modulator, which could mean major reductions in energy used by data centers and supercomputers. This new modulator is 10 times smaller and potentially 100 times more energy efficient than the best previous devices. It is about the size of a bacterium, measuring 0.6 by 8 microns.

An electro-optic modulator controls light with electrical signals, crucial for modern communication systems. In data centers, where data moves via fiber-optic cables, these modulators convert electrical signals into optical ones (light), allowing fast and efficient data transmission over long distances, improving both speed and energy efficiency.

DNA Data Storage: Storing data in DNA sounds like science fiction, yet it lies in the near future. Professor Tom de Greef expects the first DNA data center to be up and running within five to ten years. Data won’t be stored as zeros and ones in a hard drive but in the base pairs that make up DNA: AT and CG. Such a data center would take the form of a lab, many times smaller than the ones today.

De Greef can already picture it all. In one part of the building, new files will be encoded via DNA synthesis. Another part will contain large fields of capsules, each capsule packed with a file. A robotic arm will remove a capsule, read its contents and place it back.

🟢The concept of DNA data storage is a prime example of biomimicry, where human technology imitates solutions found in nature. I wonder if this is just a transition for everything to be completely organic when we think that everything is going to be increasingly inorganic?

Caption: Professor Tom de Greef expects the first DNA data center to be up and running within five to ten years. Photo: Bart van Overbeeke

4.4 Energy-Efficient Computing

Innovative computing paradigms are emerging as game-changers in the quest for sustainability. Making computers more energy efficient is crucial because the worldwide energy consumption by on-chip electronics stands at #4 in the global rankings of nation-wise energy consumption, and it is increasing exponentially each year, fueled by applications such as artificial intelligence. Let’s explore some of the most promising innovations in this space:

Quantum Computing: Quantum computers have the potential to perform complex calculations far more efficiently than classical computers. Current exascale and petascale supercomputers typically require about 15 to 25 MW to operate, compared with the 25-kW typical energy consumption of quantum computers.

Neuromorphic Computing: The human brain remains unrivaled in one crucial area: energy efficiency. Even the most advanced computers today require about 10,000 times more energy than the brain to perform tasks such as image processing and recognition. Unlike traditional computers that keep memory and processing separate, neuromorphic systems combine data storage and computation, similar to how brain cells function. This “processing in memory” design reduces energy consumption and enhances performance for AI and machine learning tasks.

Green AI: AI itself can be used to optimize the energy consumption of data centers and computational processes, potentially offsetting its own energy costs and more. For example:

In 2016, for example, Google’s DeepMind AI had helped reduce its company’s data center cooling energy usage by up to 40%.

Machine learning algorithms can study vast amounts of data, uncovering patterns that are usually too complex for human operators to spot, leading to more efficient energy management.

🟢AI Model Optimization: The Scaling Laws for Neural Language Models paper from OpenAI demonstrates that by optimizing factors like model size, dataset size, and compute usage in line with power-law scaling, it’s possible to enhance the efficiency of these models. Specifically, larger models can be more sample-efficient, requiring fewer optimization steps and less data to achieve comparable performance, which ultimately reduces the overall computational load and energy use.

5. The Road Ahead

As we stand at the threshold of an AI-driven era, our path forward demands a reimagining of the relationship between technological advancement and environmental stewardship. The road ahead is both challenging and promising, requiring us to understand and effectively manage what comes with it.

This technological leap forward, however, comes with significant challenges in energy consumption and environmental impact. Yet, these very challenges are catalyzing a unique phenomenon: A Renaissance in Sustainable Solutions. Unlike previous waves of environmental innovation, this renaissance is characterized not only by the rapid development of new technologies but also by an unprecedented urgency in their execution and implementation.

The digital revolution need not come at the cost of our environment; instead, it can be the catalyst for a more sustainable, hyper-efficient, and intelligently managed world. The synergy between tech innovators, environmental scientists, policymakers, and communities will be crucial in crafting a digital ecosystem that enhances rather than depletes our planet’s resources.

It’s time to embrace the seeds of change, learning not only from the past but also from the future that is eager to emerge. Join me, Ayu Kececi, as I explore these themes in more detail on my publication Road to Earth 2.0 as I dive into the possibilities for a more sustainable future.

Aysu Keçeci

About the author

Ayu is an AI enthusiasts who works in roles related to Sustainability consulting mostly in Istanbul, Turkey currently while in the process of relocating to the Netherlands. “Road to Earth 2.0.” is a space she created where you can explore the possibilities of the Post-Anthropocene era. What if Earth 2.0 is infinitely better than we can imagine?

Ayu’s journey in sustainability began four years ago when her project was recognized as one of the Global Top 50 projects in Google Solution Challenge — an international sustainability competition, and my story was exclusively published on Google’s official blog. She has previously been an entrepreneur where she created her own IoT hardware startup developing innovative smart recycling solutions for corporate environments, and partnered with the region’s top industrial corporations. She is also the founder of GateZero — a gamification-powered SaaS platform designed for corporate sustainability.

She is currently taking consultant roles in businesses specializing in sustainability business development needs, and also providing content creation support around sustainability, AI and green innovation. You can reach her here. For serious business inquiries her Email is: aysu@gatezero.co

Read More in AI Supremacy